{

"cells": [

{

"cell_type": "markdown",

"id": "efbc675e",

"metadata": {},

"source": [

"# Llama\n",

"\n",

"\n",

"\n",

"\n",

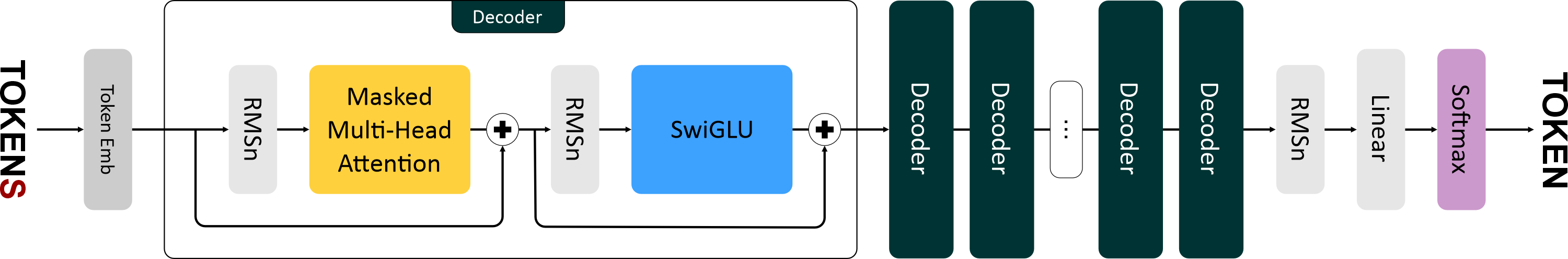

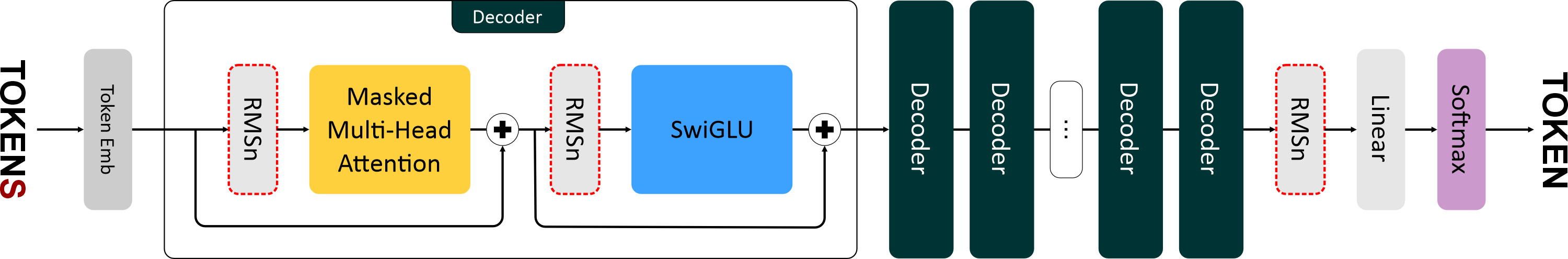

"Llama 1 вышла в феврале 2023 года. Это уже подальше, чем GPT-2. И в ее архитектуре появилось уже больше серьезных изменений:\n",

"\n",

"- Нормализация RMSNorm (вместе с pre-norm).\n",

"- Функция активации SwiGLU.\n",

"- Новый способ кодирования позиций — Rotary Positional Embeddings."

]

},

{

"cell_type": "markdown",

"id": "2cedc663",

"metadata": {},

"source": [

"# RMSNorm\n",

"\n",

"\n",

"\n",

"В Llama используется более быстрая и эффективная нормализация — **RMSNorm (Root Mean Square Normalization)**.\n",

"И, также как в GPT-2, используется *pre-norm* нормализация, то есть слои нормализации располагаются **перед блоками внимания и FNN**.\n",

"\n",

"RMSNorm отличается от обычной нормализации только одним: в нём исключен этап центрирования (вычитание среднего) и используется только масштабирование по RMS.\n",

"Это сокращает вычислительные затраты (на 7–64%) без существенной потери качества.\n",

"На картинке показана разница в распределении после применения RMSNorm и LayerNorm к исходным данным — RMSNorm не разбросан вокруг нуля.\n",

"\n",

"

\n",

" \n",

"

\n",

"\n",

"## Этапы вычисления RMSNorm\n",

"\n",

"1. **Вычисление среднеквадратичного значения:**\n",

"\n",

" $$\\text{RMS}(\\mathbf{x}) = \\sqrt{\\frac{1}{d} \\sum_{j=1}^{d} x_j^2}$$\n",

"\n",

"2. **Нормализация входящего вектора:**\n",

"\n",

" $$\\hat{x}_i = \\frac{x_i}{\\text{RMS}(\\mathbf{x})}$$\n",

"\n",

"3. **Применение масштабирования:**\n",

"\n",

" $$y_i = w_i \\cdot \\hat{x}_i$$\n",

"\n",

"---\n",

"\n",

"**Где:**\n",

"\n",

"* $x_i$ — *i*-й элемент входящего вектора.\n",

"* $w_i$ — *i*-й элемент обучаемого вектора весов.\n",

" Использование весов позволяет модели адаптивно регулировать амплитуду признаков.\n",

" Без них нормализация была бы слишком «жёсткой» и могла бы ограничить качество модели.\n",

"* $d$ — размерность входящего вектора.\n",

"* $\\varepsilon$ — малая константа (например, 1e-6), предотвращает деление на ноль.\n",

"\n",

"---\n",

"\n",

"Так как на вход подаётся тензор, то в векторной форме RMSNorm вычисляется так:\n",

"\n",

"$$\n",

"RMSNorm(x) = w ⊙ \\frac{x}{\\sqrt{mean(x^2) + ϵ}}\n",

"$$\n",

"\n",

"**Где:**\n",

"\n",

"* $x$ — входящий тензор размера `batch_size × ...`\n",

"\n"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "873704be",

"metadata": {},

"outputs": [],

"source": [

"import torch\n",

"from torch import nn\n",

"\n",

"class RMSNorm(nn.Module):\n",

" def __init__(self, dim: int, eps: float = 1e-6):\n",

" super().__init__()\n",

" self._eps = eps\n",

" self._w = nn.Parameter(torch.ones(dim))\n",

" \n",

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size]\n",

" rms = (x.pow(2).mean(-1, keepdim=True) + self._eps) ** 0.5\n",

" norm_x = x / rms\n",

" return self._w * norm_x"

]

},

{

"cell_type": "markdown",

"id": "09dd9625",

"metadata": {},

"source": [

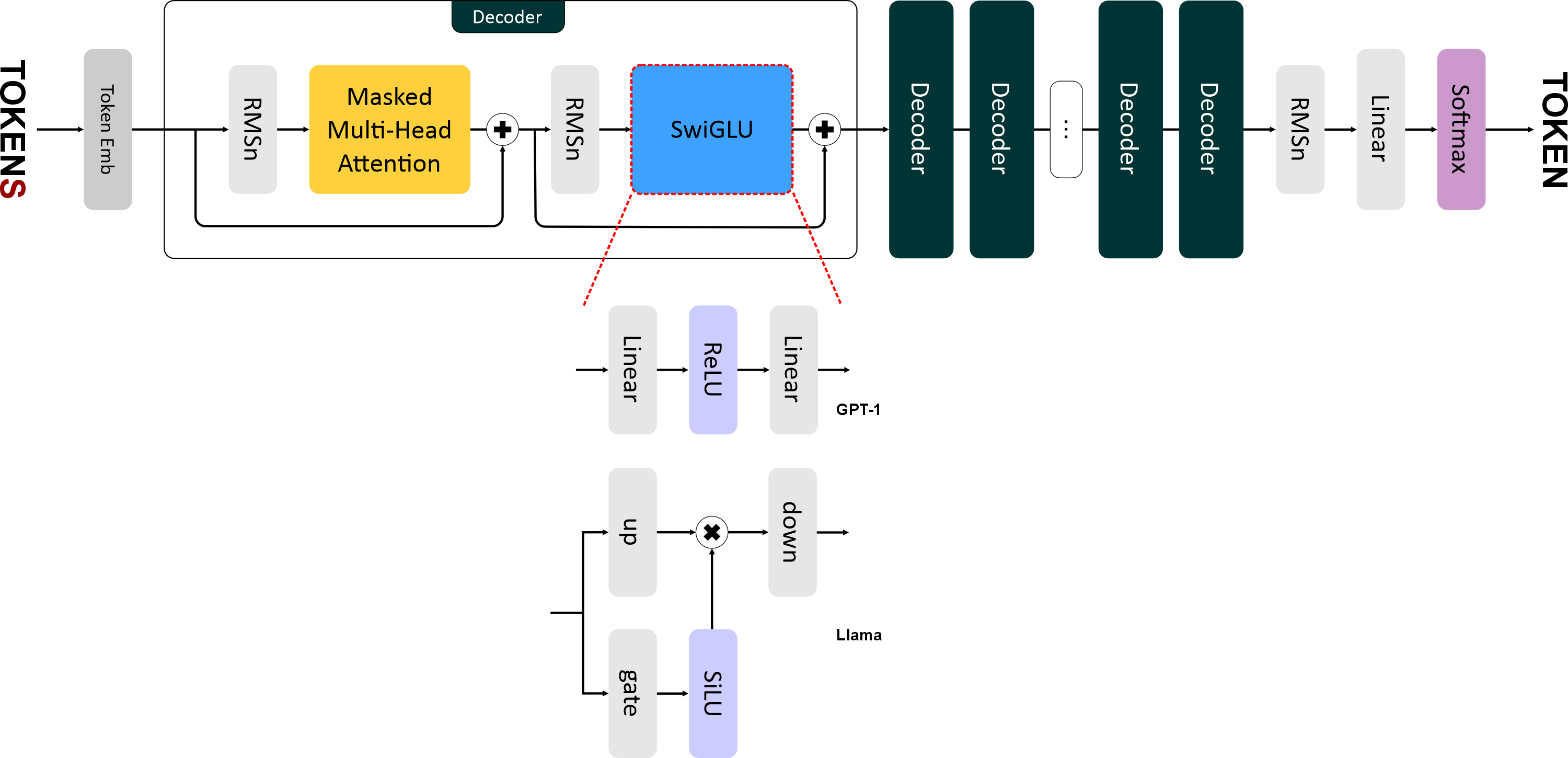

"# SwiGLU\n",

"\n",

"\n",

"\n",

"В **Llama** ввели новую функцию активации — **SwiGLU (Swish-Gated Linear Unit)** — это гибридная функция активации, которая представляет собой комбинацию трёх линейных преобразований и функции активации **SiLU (Sigmoid Linear Unit)**, она же *Swish* в терминологии Google.\n",

"\n",

"Формула SwiGLU выглядит так:\n",

"\n",

"$$\n",

"\\text{SwiGLU}(x) = \\text{down}(\\text{SiLU}(\\text{gate}(x)) \\otimes \\text{up}(x))\n",

"$$\n",

"\n",

"где:\n",

"\n",

"* $x$ — входящий тензор.\n",

"* $\\text{gate}(x)$ — линейный слой для гейтового механизма. Преобразует вход `x` размерностью `emb_size` в промежуточное представление размерности `4 * emb_size`.\n",

"* $\\text{up}(x)$ — линейный слой для увеличения размерности. Также преобразует `x` в размерность `4 * emb_size`.\n",

"* $\\text{SiLU}(x) = x \\cdot \\sigma(x)$ — функция активации, где $\\sigma$ — сигмоида.\n",

"* $\\otimes$ — поэлементное умножение.\n",

"* $\\text{down}(x)$ — линейный слой для уменьшения промежуточного представления до исходного размера (`emb_size`).\n",

"\n",

"> **Гейтинг** (от слова *gate* — «врата») — это механизм, который позволяет сети динамически фильтровать, какая информация должна проходить дальше.\n",

"> При гейтинге создаются как бы два независимых потока:\n",

">\n",

"> * один предназначен для прямой передачи информации (*up-down*),\n",

"> * другой — для контроля передаваемой информации (*gate*).\n",

">\n",

"> Это позволяет сети учить более сложные паттерны.\n",

"> Например, гейт может научиться:\n",

"> «если признак A активен, то пропусти признак B»,\n",

"> что невозможно с простой функцией активации между линейными слоями.\n",

">\n",

"> Также гейтинг помогает с затуханием градиентов: вместо простого обнуления (как в ReLU), гейт может тонко модулировать силу сигнала.\n",

"\n",

"SwiGLU более сложная (дорогая), чем ReLU/GELU, так как требует больше вычислений (три линейных преобразования вместо двух).\n",

"Но при этом показывает лучшее качество по сравнению с ReLU и GELU.\n",

"\n",

"График **SiLU** похож на **GELU**, но более гладкий:\n",

"\n",

"