mirror of

https://github.com/pese-git/llm-arch-research.git

synced 2026-01-23 13:00:54 +00:00

- implement Mistral model in llm/models/mistral/mistral.py with GroupedQueryAttention, SwiGLU, RoPE, sliding window attention - add __init__.py for module export - add config files for mistral training and generation - update universal experiment runner to support Mistral model - add notebook for Mistral experiments

3268 lines

150 KiB

Plaintext

3268 lines

150 KiB

Plaintext

{

|

||

"cells": [

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "0939798d",

|

||

"metadata": {},

|

||

"source": [

|

||

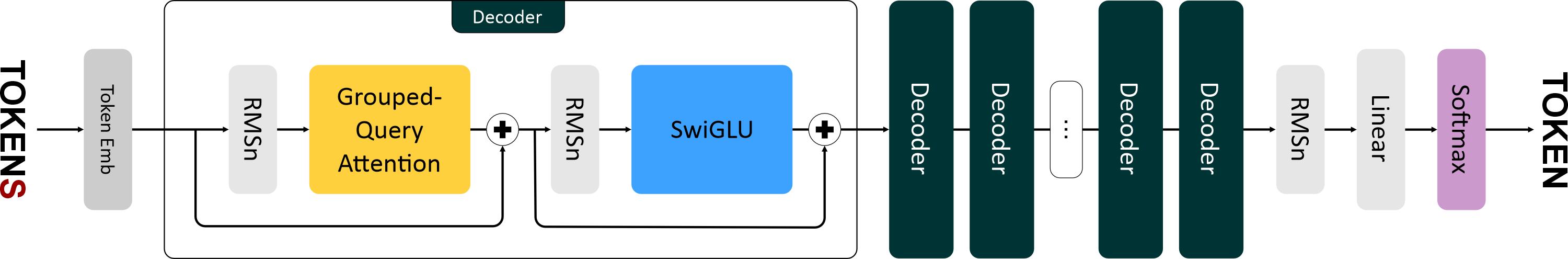

"# Mistral\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"1-е поколение Mistral вышло в сентябре 2023 года.\n",

|

||

"\n",

|

||

"Mistral получил те же улучшения, что и у Llama: RMSNorm, SwiGLU и RoPE. Но мистраль пошел дальше и добавил еще две оптимизационные фишки:\n",

|

||

"\n",

|

||

"\n",

|

||

"- Grouped-Query Attention (GQA)\n",

|

||

"- Sliding Window Attention (SWA)\n",

|

||

"\n",

|

||

"\n",

|

||

"Обе модифицируют механизм внимания. И обе предназначены для экономии памяти и ускорения вычислений."

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "ec28fd32",

|

||

"metadata": {},

|

||

"source": [

|

||

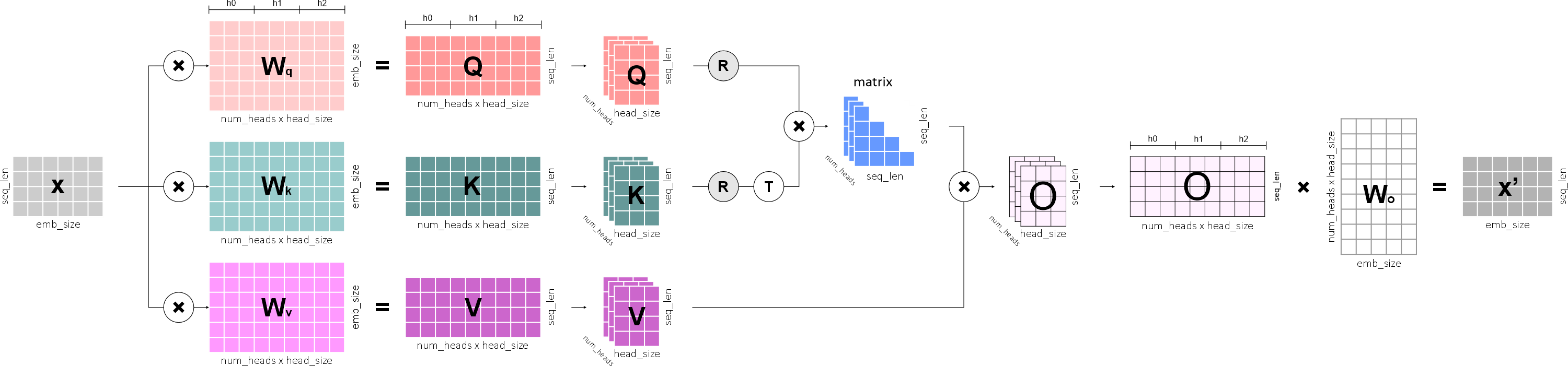

"# Masked Multi-Head Attention, ver. 2.0\n",

|

||

"\n",

|

||

"В текущей реализации **Multi-Head Attention** у нас каждая голова живет своей жизнью и обрабатывается по отдельности:\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/e4d8fadc-9817-4147-9b91-520855ba0d19/\" alt=\"multi_head_1\" width=\"1000\" height=\"331\">\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"* Класс `MultiHeadAttention` получает на вход тензор размером `batch_size × seq_len × emb_size` и передает его в каждую голову.\n",

|

||

"* В голове тензор перемножается с тремя матрицами весов: `W_k`, `W_q`, `W_v`, каждая размером `emb_size × head_size`.\n",

|

||

"* В результате получаются три матрицы: запроса (query), ключа (key) и значения (value). Каждая из них имеет размер `batch_size × seq_len × head_size`.\n",

|

||

"* Матрицы запроса (query) и ключа (key) мы поворачиваем с помощью техники RoPE.\n",

|

||

"* Матрицу ключа (key) транспонируем и перемножаем с матрицей запроса (query). В результате получается матрица внимания.\n",

|

||

"* Далее перемножаем матрицу внимания и матрицу значения (value).\n",

|

||

"* На выходе из головы получается тензор размера `batch_size × seq_len × head_size`.\n",

|

||

"* Выходы из всех голов конкатенируются и умножаются на выходные веса, что уменьшает их размер.\n",

|

||

"* На выходе из `MultiHeadAttention` у нас получается тензор такого же размера, какой поступил на вход: `batch_size × seq_len × emb_size`.\n",

|

||

"\n",

|

||

"Теперь нам нужно оптимизировать вычисления и сделать так, чтобы все головы вычислялись одновременно в классе `MultiHeadAttention`. Для этого изменим алгоритм следующим образом:\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"* Класс `MultiHeadAttention` получает на вход тензор размером `batch_size × seq_len × emb_size`.\n",

|

||

"* Тензор перемножается с тремя матрицами весов: `W_q`, `W_k`, `W_v`. Но на этот раз они имеют размер `emb_size × (num_heads * head_size)`.\n",

|

||

" То есть, мы как бы расширили каждую матрицу весов по горизонтали на число голов.\n",

|

||

"* После перемножения получаются три матрицы: запроса (query), ключа (key) и значения (value). Каждая из них также стала шире на количество голов: `batch_size × seq_len × (num_heads * head_size)`.\n",

|

||

"* Переводим матрицы запроса (query), ключа (key) и значения (value) в форму четырехмерного тензора:\n",

|

||

" `batch_size × num_heads × seq_len × head_size`. Это необходимо для дальнейших матричных операций.\n",

|

||

"* Матрицы запроса (query) и ключа (key) мы поворачиваем с помощью техники RoPE.\n",

|

||

"* Транспонируем тензор ключа и перемножаем его с тензором запроса. Получится матрица внимания, которая будет иметь размер\n",

|

||

" `batch_size × num_heads × seq_len × seq_len`.\n",

|

||

"* Далее перемножаем матрицу внимания и тензор значения (value). Получается тензор размером\n",

|

||

" `batch_size × num_heads × seq_len × head_size`. Переводим тензор в «плоский» вид:\n",

|

||

" `batch_size × seq_len × (num_heads * head_size)`.\n",

|

||

"* Пропускаем тензор через выходную проекцию (`batch_size × (num_heads * head_size) × emb_size`), чтобы уменьшить его размер.\n",

|

||

"* На выходе из класса получается тензор точно такого же размера, какой поступил на вход:\n",

|

||

" `batch_size × seq_len × emb_size`.\n",

|

||

"\n",

|

||

"Ну и также версия с кэшем (когда на вход приходит только один токен):\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"Единственное изменение: после выполнения поворота мы объединяем текущий тензор с тензором кэшей (для векторов ключа и значения)."

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "d53f1cfc",

|

||

"metadata": {},

|

||

"source": [

|

||

"# RoPE, ver. 2.0 (разработка)\n",

|

||

"\n",

|

||

"Первым делом нам нужно подредактировать класс `RoPE`. Сейчас он используется внутри класса `HeadAttention`, а будет использоваться внутри `MultiHeadAttention`.\n",

|

||

"\n",

|

||

"Единственное явное отличие старой версии от новой — что подается на вход (в метод `forward`):\n",

|

||

"\n",

|

||

"* Сейчас в него приходит тензор размера `batch_size × seq_len × head_size`.\n",

|

||

"* А будет приходить тензор размера `batch_size × num_heads × seq_len × head_size`.\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/3aefbeed-a4e8-49a2-a950-db7d4f413d3d/\" alt=\"rope\" width=\"250\" height=\"328\">\n",

|

||

"</p>\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 1,

|

||

"id": "4c10a0b2",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"from typing import Optional\n",

|

||

"\n",

|

||

"\n",

|

||

"class RoPE(nn.Module):\n",

|

||

"\n",

|

||

" def __init__(self, head_size: int, max_seq_len: int, base: int = 10_000):\n",

|

||

" super().__init__()\n",

|

||

" assert head_size % 2 == 0, \"head_size должен быть четным\"\n",

|

||

"\n",

|

||

" # Вычисление частот: θ_i = base^(-2i/d) для i ∈ [0, d/2-1]\n",

|

||

" freqs = 1.0 / (base ** (2 * torch.arange(head_size // 2).float() / head_size))\n",

|

||

"\n",

|

||

" # Позиции от 0 до max_seq_len-1\n",

|

||

" positions = torch.arange(max_seq_len).float()\n",

|

||

"\n",

|

||

" # Внешнее произведение: m * θ_i для всех позиций и частот\n",

|

||

" freq_matrix = positions.unsqueeze(1) * freqs.unsqueeze(0)\n",

|

||

"\n",

|

||

" # Предвычисление матриц косинусов и синусов\n",

|

||

" self.register_buffer(\"cos_matrix\", torch.cos(freq_matrix))\n",

|

||

" self.register_buffer(\"sin_matrix\", torch.sin(freq_matrix))\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor, start_pos: int = 0) -> torch.Tensor: # [batch_size × seq_len × head_size] [batch_size × num_heads × seq_len × head_size]\n",

|

||

" batch_size, num_heads, seq_len, head_size = x.shape\n",

|

||

"\n",

|

||

" # Берем нужную часть матриц и приводим к типу x\n",

|

||

" cos = self.cos_matrix[start_pos:start_pos+seq_len].to(x.dtype) # [seq_len, head_size//2]\n",

|

||

" sin = self.sin_matrix[start_pos:start_pos+seq_len].to(x.dtype) # [seq_len, head_size//2]\n",

|

||

"\n",

|

||

" # Явное изменение формы для broadcasting\n",

|

||

" cos = cos.reshape(1, 1, seq_len, head_size // 2)\n",

|

||

" sin = sin.reshape(1, 1, seq_len, head_size // 2)\n",

|

||

"\n",

|

||

" # Разделяем на четные и нечетные компоненты по ПОСЛЕДНЕМУ измерению\n",

|

||

" x_even = x[..., 0::2] # [batch_size, num_heads, seq_len, head_size//2]\n",

|

||

" x_odd = x[..., 1::2] # [batch_size, num_heads, seq_len, head_size//2]\n",

|

||

"\n",

|

||

" # Применяем поворот: q' = q * cos(mθ) + rotate(q) * sin(mθ)\n",

|

||

" x_rotated_even = x_even * cos - x_odd * sin\n",

|

||

" x_rotated_odd = x_even * sin + x_odd * cos\n",

|

||

"\n",

|

||

" # Объединяем обратно в исходную размерность\n",

|

||

" x_rotated = torch.stack([x_rotated_even, x_rotated_odd], dim=-1)\n",

|

||

" x_rotated = x_rotated.flatten(-2) # [batch_size, seq_len, head_size]\n",

|

||

"\n",

|

||

" return x_rotated\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 2,

|

||

"id": "e90c94a9",

|

||

"metadata": {},

|

||

"outputs": [

|

||

{

|

||

"name": "stdout",

|

||

"output_type": "stream",

|

||

"text": [

|

||

"✓ Форма корректна\n"

|

||

]

|

||

}

|

||

],

|

||

"source": [

|

||

"rope = RoPE(head_size=64, max_seq_len=512)\n",

|

||

"x = torch.randn(2, 8, 128, 64) # batch=2, heads=8, seq=128, dim=64\n",

|

||

"output = rope(x)\n",

|

||

"assert output.shape == x.shape\n",

|

||

"print(\"✓ Форма корректна\")"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "ca50ac9c",

|

||

"metadata": {},

|

||

"source": [

|

||

"## MultiHeadAttention v2"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 3,

|

||

"id": "883383d2",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"import torch.nn.functional as F\n",

|

||

"from typing import Optional, Tuple\n",

|

||

"\n",

|

||

"\n",

|

||

"class MultiHeadAttentionV2(nn.Module):\n",

|

||

"\n",

|

||

" def __init__(\n",

|

||

" self,\n",

|

||

" num_heads: int,\n",

|

||

" emb_size: int,\n",

|

||

" head_size: int,\n",

|

||

" max_seq_len: int,\n",

|

||

" rope: RoPE = None,\n",

|

||

" dropout: float = 0.1,\n",

|

||

" ):\n",

|

||

" super().__init__()\n",

|

||

" self._num_heads = num_heads\n",

|

||

" self._head_size = head_size\n",

|

||

" self._max_seq_len = max_seq_len\n",

|

||

" self._rope = rope\n",

|

||

"\n",

|

||

" self._q = nn.Linear(emb_size, num_heads * head_size)\n",

|

||

" self._k = nn.Linear(emb_size, num_heads * head_size)\n",

|

||

" self._v = nn.Linear(emb_size, num_heads * head_size)\n",

|

||

"\n",

|

||

" # Создание causal маски\n",

|

||

" mask = torch.tril(torch.ones(max_seq_len, max_seq_len))\n",

|

||

" self.register_buffer(\n",

|

||

" \"_tril_mask\", mask.bool() if hasattr(torch, \"bool\") else mask.byte()\n",

|

||

" )\n",

|

||

" \n",

|

||

" self._layer = nn.Linear(head_size * num_heads, emb_size)\n",

|

||

" self._dropout = nn.Dropout(dropout)\n",

|

||

"\n",

|

||

" def forward(\n",

|

||

" self,\n",

|

||

" x: torch.Tensor,\n",

|

||

" mask: torch.Tensor = None,\n",

|

||

" use_cache: bool = True,\n",

|

||

" cache: list = None,\n",

|

||

" ):\n",

|

||

" batch_size, seq_len, emb_size = x.shape\n",

|

||

"\n",

|

||

" if seq_len > self._max_seq_len:\n",

|

||

" raise ValueError(\n",

|

||

" f\"Длина последовательности {seq_len} превышает максимум {self._max_seq_len}\"\n",

|

||

" )\n",

|

||

"\n",

|

||

" # Пропустите тензор x через матрицы Wq, Wk , Wv, чтобы получить матрицы запроса, ключа и значения.\n",

|

||

" k = self._k(x) # [B, T, hs]\n",

|

||

" q = self._q(x) # [B, T, hs]\n",

|

||

" v = self._v(x) # [B, T, hs]\n",

|

||

"\n",

|

||

" # Шаг 2: Изменение формы для multi-head\n",

|

||

" # [batch_size, seq_len, num_heads * head_size] \n",

|

||

" # -> [batch_size, seq_len, num_heads, head_size]\n",

|

||

" q = q.reshape(batch_size, seq_len, self._num_heads, self._head_size)\n",

|

||

" k = k.reshape(batch_size, seq_len, self._num_heads, self._head_size)\n",

|

||

" v = v.reshape(batch_size, seq_len, self._num_heads, self._head_size)\n",

|

||

" \n",

|

||

"\n",

|

||

" # 3. Transpose: [B, T, H, hs] -> [B, H, T, hs]\n",

|

||

" q = q.transpose(1, 2)\n",

|

||

" k = k.transpose(1, 2)\n",

|

||

" v = v.transpose(1, 2)\n",

|

||

"\n",

|

||

" start_pos = 0\n",

|

||

" if cache is not None:\n",

|

||

" k_cache, v_cache = cache\n",

|

||

" cache_len = k_cache.shape[2]\n",

|

||

" start_pos = cache_len\n",

|

||

" \n",

|

||

" # Пропустите матрицы запроса и ключа через экземпляр rope, чтобы выполнить поворот.\n",

|

||

" if self._rope is not None:\n",

|

||

" # ✅ Применяем RoPE к Q и K (НЕ к V!)\n",

|

||

" q = self._rope(q, start_pos=start_pos) # [B, T, hs]\n",

|

||

" k = self._rope(k, start_pos=start_pos) # [B, T, hs]\n",

|

||

"\n",

|

||

" # Если cache пришел, то объединяем кэш и одну строку из ключа и значения. Это будут новые key и value для последующих вычислений.\n",

|

||

" # 5. Кэширование (для autoregressive generation)\n",

|

||

" if cache is not None:\n",

|

||

" k_cache, v_cache = cache\n",

|

||

" k = torch.cat([k_cache, k], dim=2) # Concat по seq_len (dim=2)\n",

|

||

" v = torch.cat([v_cache, v], dim=2)\n",

|

||

"\n",

|

||

" # Перемножим матрицы запроса и ключа (транспонированную), чтобы вычислить матрицу внимания.\n",

|

||

" # И разделить все значения в матрице внимания на корень из head_size.\n",

|

||

" scores = q @ k.transpose(-2, -1) / (self._head_size ** 0.5)\n",

|

||

"\n",

|

||

" # Если cache пришел, то маску не накладываем. Иначе наложите на матрицу внимания треугольную маску, созданную при инициализации. Все скрытые значения должны быть приведены к минус бесконечности: float('-inf').\n",

|

||

" if cache is None:\n",

|

||

" scores = scores.masked_fill(\n",

|

||

" ~self._tril_mask[:seq_len, :seq_len], float(\"-inf\")\n",

|

||

" )\n",

|

||

"\n",

|

||

" # Применить к матрице внимания (построчно) функцию Softmax.\n",

|

||

" weights = F.softmax(scores, dim=-1)\n",

|

||

"\n",

|

||

" # Перемножим матрицу внимания и матрицу значения.\n",

|

||

" x_out = weights @ v # [B, T, hs]\n",

|

||

"\n",

|

||

" # Измените форму тензора на batch_size × seq_len × num_heads*head_size.\n",

|

||

" # Transpose обратно и concatenate heads\n",

|

||

" x_out = x_out.transpose(1, 2) # [B, T_q, H, hs]\n",

|

||

" x_out = x_out.contiguous() # Важно для reshape!\n",

|

||

" concatenated_attention = x_out.reshape(batch_size, seq_len, self._num_heads * self._head_size)\n",

|

||

"\n",

|

||

" #concatenated_attention = x_out.reshape(batch_size, seq_len, self._num_heads * self._head_size)\n",

|

||

"\n",

|

||

" # Пропустите получившийся тензор через последний линейный слой.\n",

|

||

" # 3. Проецируем в пространство эмбеддингов\n",

|

||

" projected_output = self._layer(concatenated_attention)\n",

|

||

"\n",

|

||

" # 4. Применяем dropout для регуляризации\n",

|

||

" final_output = self._dropout(projected_output)\n",

|

||

"\n",

|

||

" if use_cache is True:\n",

|

||

" return (final_output, (k, v))\n",

|

||

" else:\n",

|

||

" return (final_output, None)"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 4,

|

||

"id": "9ab78666",

|

||

"metadata": {},

|

||

"outputs": [

|

||

{

|

||

"name": "stdout",

|

||

"output_type": "stream",

|

||

"text": [

|

||

"✅ Test 1 - Output shape: torch.Size([2, 10, 512])\n",

|

||

"✅ Test 2 - First output shape: torch.Size([2, 5, 512])\n",

|

||

"✅ Test 2 - Second output shape: torch.Size([2, 1, 512])\n",

|

||

"\n",

|

||

"✅ Все тесты пройдены!\n"

|

||

]

|

||

}

|

||

],

|

||

"source": [

|

||

"\n",

|

||

"# Параметры\n",

|

||

"batch_size = 2\n",

|

||

"seq_len = 10\n",

|

||

"emb_size = 512\n",

|

||

"num_heads = 8\n",

|

||

"head_size = 64\n",

|

||

"max_seq_len = 512\n",

|

||

"\n",

|

||

" # Создание модели\n",

|

||

"rope = RoPE(head_size=head_size, max_seq_len=max_seq_len)\n",

|

||

"mha = MultiHeadAttentionV2(\n",

|

||

" num_heads=num_heads,\n",

|

||

" emb_size=emb_size,\n",

|

||

" head_size=head_size,\n",

|

||

" max_seq_len=max_seq_len,\n",

|

||

" rope=rope,\n",

|

||

" dropout=0.1,\n",

|

||

")\n",

|

||

"\n",

|

||

" # Тест 1: Обычный forward pass\n",

|

||

"x = torch.randn(batch_size, seq_len, emb_size)\n",

|

||

"output, cache = mha(x, use_cache=False)\n",

|

||

"print(f\"✅ Test 1 - Output shape: {output.shape}\") # [2, 10, 512]\n",

|

||

"assert output.shape == (batch_size, seq_len, emb_size)\n",

|

||

"\n",

|

||

" # Тест 2: С кэшированием\n",

|

||

"x1 = torch.randn(batch_size, 5, emb_size)\n",

|

||

"output1, cache1 = mha(x1, use_cache=True)\n",

|

||

"print(f\"✅ Test 2 - First output shape: {output1.shape}\") # [2, 5, 512]\n",

|

||

"\n",

|

||

"x2 = torch.randn(batch_size, 1, emb_size)\n",

|

||

"output2, cache2 = mha(x2, use_cache=True, cache=cache1)\n",

|

||

"print(f\"✅ Test 2 - Second output shape: {output2.shape}\") # [2, 1, 512]\n",

|

||

"\n",

|

||

"print(\"\\n✅ Все тесты пройдены!\")"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "0a03c4d2",

|

||

"metadata": {},

|

||

"source": [

|

||

"### Промежуточный вариант Mistral"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 5,

|

||

"id": "645a3cf9",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"from torch import Tensor\n",

|

||

"import torch.nn.functional as F\n",

|

||

"from math import sqrt\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"class SiLU(nn.Module):\n",

|

||

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size]\n",

|

||

" return torch.sigmoid(x) * x\n",

|

||

" \n",

|

||

"class RMSNorm(nn.Module):\n",

|

||

" def __init__(self, dim: int, eps: float = 1e-6):\n",

|

||

" super().__init__()\n",

|

||

" self._eps = eps\n",

|

||

" self._w = nn.Parameter(torch.ones(dim))\n",

|

||

" \n",

|

||

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size]\n",

|

||

" rms = (x.pow(2).mean(-1, keepdim=True) + self._eps) ** 0.5\n",

|

||

" norm_x = x / rms\n",

|

||

" return self._w * norm_x\n",

|

||

"\n",

|

||

"class SwiGLU(nn.Module):\n",

|

||

" def __init__(self, emb_size: int, dropout: float = 0.1):\n",

|

||

" super().__init__()\n",

|

||

"\n",

|

||

" self._gate = nn.Linear(emb_size, 4 * emb_size)\n",

|

||

" self._up = nn.Linear(emb_size, 4 * emb_size)\n",

|

||

" self._down = nn.Linear(4 * emb_size, emb_size)\n",

|

||

" self._activation = SiLU()\n",

|

||

" self._dropout = nn.Dropout(dropout)\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size].\n",

|

||

" gate_out = self._gate(x) # [batch, seq, 4*emb]\n",

|

||

" activation_out = self._activation(gate_out) # [batch, seq, 4*emb]\n",

|

||

" up_out = self._up(x) # [batch, seq, 4*emb]\n",

|

||

" out = up_out * activation_out # поэлементное!\n",

|

||

" out = self._down(out) # [batch, seq, emb]\n",

|

||

" return self._dropout(out)\n",

|

||

"\n",

|

||

"\n",

|

||

"class TokenEmbeddings(nn.Module):\n",

|

||

" def __init__(self, vocab_size: int, emb_size: int):\n",

|

||

" super().__init__()\n",

|

||

" self._embedding = nn.Embedding(\n",

|

||

" num_embeddings=vocab_size,\n",

|

||

" embedding_dim=emb_size\n",

|

||

" )\n",

|

||

"\n",

|

||

" def forward(self, x: Tensor) -> Tensor:\n",

|

||

" return self._embedding(x)\n",

|

||

"\n",

|

||

" @property\n",

|

||

" def num_embeddings(self) -> int:\n",

|

||

" return self._embedding.num_embeddings\n",

|

||

"\n",

|

||

" @property\n",

|

||

" def embedding_dim(self) -> int:\n",

|

||

" return self._embedding.embedding_dim\n",

|

||

"\n",

|

||

"\n",

|

||

"class GELU(nn.Module):\n",

|

||

" def __init__(self):\n",

|

||

" super().__init__()\n",

|

||

" self.sqrt_2_over_pi = torch.sqrt(torch.tensor(2.0) / math.pi)\n",

|

||

" \n",

|

||

" def forward(self, x: torch.Tensor) -> torch.Tensor:\n",

|

||

" return 0.5 * x * (1 + torch.tanh(\n",

|

||

" self.sqrt_2_over_pi * (x + 0.044715 * torch.pow(x, 3))\n",

|

||

" ))\n",

|

||

" \n",

|

||

"class Decoder(nn.Module):\n",

|

||

" def __init__(self, \n",

|

||

" num_heads: int,\n",

|

||

" emb_size: int,\n",

|

||

" head_size: int,\n",

|

||

" max_seq_len: int,\n",

|

||

" rope: RoPE,\n",

|

||

" dropout: float = 0.1\n",

|

||

" ):\n",

|

||

" super().__init__()\n",

|

||

" self._heads = MultiHeadAttentionV2(\n",

|

||

" num_heads=num_heads, \n",

|

||

" emb_size=emb_size, \n",

|

||

" head_size=head_size, \n",

|

||

" max_seq_len=max_seq_len,\n",

|

||

" rope=rope,\n",

|

||

" dropout=dropout\n",

|

||

" )\n",

|

||

" self._ff = SwiGLU(emb_size=emb_size, dropout=dropout)\n",

|

||

" self._norm1 = RMSNorm(emb_size)\n",

|

||

" self._norm2 = RMSNorm(emb_size)\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor, mask: torch.Tensor = None, use_cache: bool = True, cache: list = None) -> torch.Tensor:\n",

|

||

" norm1_out = self._norm1(x)\n",

|

||

" attention, kv_caches = self._heads(norm1_out, mask, use_cache=use_cache, cache=cache)\n",

|

||

" out = attention + x\n",

|

||

" \n",

|

||

" norm2_out = self._norm2(out)\n",

|

||

" ffn_out = self._ff(norm2_out)\n",

|

||

"\n",

|

||

" if use_cache is True:\n",

|

||

" return (ffn_out + out, kv_caches)\n",

|

||

" else:\n",

|

||

" return (ffn_out + out, None)\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"from torch import nn\n",

|

||

"import torch\n",

|

||

"import torch.nn.functional as F\n",

|

||

"\n",

|

||

"class Mistral(nn.Module):\n",

|

||

" def __init__(self,\n",

|

||

" vocab_size: int,\n",

|

||

" max_seq_len: int,\n",

|

||

" emb_size: int,\n",

|

||

" num_heads: int,\n",

|

||

" head_size: int,\n",

|

||

" num_layers: int,\n",

|

||

" dropout: float = 0.1,\n",

|

||

" device: str = 'cpu'\n",

|

||

" ):\n",

|

||

" super().__init__()\n",

|

||

" self._vocab_size = vocab_size\n",

|

||

" self._max_seq_len = max_seq_len\n",

|

||

" self._emb_size = emb_size\n",

|

||

" self._num_heads = num_heads\n",

|

||

" self._head_size = head_size\n",

|

||

" self._num_layers = num_layers\n",

|

||

" self._dropout = dropout\n",

|

||

" self._device = device\n",

|

||

" \n",

|

||

" self.validation_loss = None\n",

|

||

"\n",

|

||

" # Инициализация слоев\n",

|

||

" self._token_embeddings = TokenEmbeddings(\n",

|

||

" vocab_size=vocab_size, \n",

|

||

" emb_size=emb_size\n",

|

||

" )\n",

|

||

" self._position_embeddings = RoPE(\n",

|

||

" head_size=head_size,\n",

|

||

" max_seq_len=max_seq_len\n",

|

||

" )\n",

|

||

" #self._position_embeddings = PositionalEmbeddings(\n",

|

||

" # max_seq_len=max_seq_len, \n",

|

||

" # emb_size=emb_size\n",

|

||

" #)\n",

|

||

" self._dropout = nn.Dropout(dropout)\n",

|

||

" self._decoders = nn.ModuleList([Decoder(\n",

|

||

" num_heads=num_heads,\n",

|

||

" emb_size=emb_size,\n",

|

||

" head_size=head_size,\n",

|

||

" max_seq_len=max_seq_len,\n",

|

||

" rope=self._position_embeddings,\n",

|

||

" dropout=dropout \n",

|

||

" ) for _ in range(num_layers)])\n",

|

||

" self._norm = RMSNorm(emb_size)\n",

|

||

" self._linear = nn.Linear(emb_size, vocab_size)\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor, use_cache: bool = True, cache: list = None) -> tuple:\n",

|

||

" # Проверка длины последовательности (только при отсутствии кэша)\n",

|

||

" if cache is None and x.size(1) > self._max_seq_len:\n",

|

||

" raise ValueError(f\"Длина последовательности {x.size(1)} превышает максимальную {self.max_seq_len}\")\n",

|

||

" \n",

|

||

" # Эмбеддинги токенов и позиций\n",

|

||

" tok_out = self._token_embeddings(x) # [batch, seq_len, emb_size]\n",

|

||

" #pos_out = self._position_embeddings(x) # [batch, seq_len, emb_size]\n",

|

||

" \n",

|

||

" # Комбинирование\n",

|

||

" out = self._dropout(tok_out) # [batch, seq_len, emb_size]\n",

|

||

" \n",

|

||

" # Стек декодеров с передачей кэша\n",

|

||

" new_cache = []\n",

|

||

" for i, decoder in enumerate(self._decoders):\n",

|

||

" decoder_cache = cache[i] if cache is not None else None\n",

|

||

" decoder_result = decoder(out, use_cache=use_cache, cache=decoder_cache)\n",

|

||

"\n",

|

||

" # Извлекаем результат из кортежа\n",

|

||

" if use_cache:\n",

|

||

" out, decoder_new_cache = decoder_result\n",

|

||

" new_cache.append(decoder_new_cache)\n",

|

||

" else:\n",

|

||

" out = decoder_result[0]\n",

|

||

"\n",

|

||

" out = self._norm(out)\n",

|

||

" logits = self._linear(out)\n",

|

||

" \n",

|

||

" # Возвращаем результат с учетом use_cache\n",

|

||

" if use_cache:\n",

|

||

" return (logits, new_cache)\n",

|

||

" else:\n",

|

||

" return (logits, None)\n",

|

||

"\n",

|

||

" def generate(self,\n",

|

||

" x: torch.Tensor, \n",

|

||

" max_new_tokens: int, \n",

|

||

" do_sample: bool,\n",

|

||

" temperature: float = 1.0,\n",

|

||

" top_k: int = None,\n",

|

||

" top_p: float = None,\n",

|

||

" use_cache: bool = True\n",

|

||

" ) -> torch.Tensor:\n",

|

||

" cache = None\n",

|

||

"\n",

|

||

" for _ in range(max_new_tokens):\n",

|

||

" if use_cache and cache is not None:\n",

|

||

" # Используем кэш - передаем только последний токен\n",

|

||

" x_input = x[:, -1:] # [batch_size, 1]\n",

|

||

" else:\n",

|

||

" # Первая итерация или кэш отключен - передаем всю последовательность\n",

|

||

" x_input = x\n",

|

||

" \n",

|

||

" # Прямой проход с кэшем\n",

|

||

" logits, new_cache = self.forward(x_input, use_cache=use_cache, cache=cache)\n",

|

||

" \n",

|

||

" # Обновляем кэш для следующей итерации\n",

|

||

" if use_cache:\n",

|

||

" cache = new_cache\n",

|

||

"\n",

|

||

" last_logits = logits[:, -1, :] # [batch_size, vocab_size]\n",

|

||

"\n",

|

||

" # Масштабируем логиты температурой\n",

|

||

" if temperature > 0:\n",

|

||

" logits_scaled = last_logits / temperature\n",

|

||

" else:\n",

|

||

" logits_scaled = last_logits\n",

|

||

"\n",

|

||

" if do_sample == True and top_k != None:\n",

|

||

" _, topk_indices = torch.topk(logits_scaled, top_k, dim=-1)\n",

|

||

"\n",

|

||

" # # Заменим все НЕ top-k логиты на -inf\n",

|

||

" masked_logits = logits_scaled.clone()\n",

|

||

" vocab_size = logits_scaled.size(-1)\n",

|

||

"\n",

|

||

" # создаём маску: 1, если токен НЕ в topk_indices\n",

|

||

" mask = torch.ones_like(logits_scaled, dtype=torch.uint8)\n",

|

||

" mask.scatter_(1, topk_indices, 0) # 0 там, где top-k индексы\n",

|

||

" masked_logits[mask.byte()] = float('-inf')\n",

|

||

"\n",

|

||

" logits_scaled = masked_logits\n",

|

||

"\n",

|

||

" if do_sample == True and top_p != None:\n",

|

||

" # 1. Применим softmax, чтобы получить вероятности:\n",

|

||

" probs = F.softmax(logits_scaled, dim=-1) # [B, vocab_size]\n",

|

||

" # 2. Отсортируем токены по убыванию вероятностей:\n",

|

||

" sorted_probs, sorted_indices = torch.sort(probs, descending=True, dim=-1)\n",

|

||

" # 3. Посчитаем кумулятивную сумму вероятностей:\n",

|

||

" cum_probs = torch.cumsum(sorted_probs, dim=-1) # [B, vocab_size]\n",

|

||

" # 4. Определим маску: оставить токены, пока сумма < top_p\n",

|

||

" sorted_mask = (cum_probs <= top_p).byte() # [B, vocab_size]\n",

|

||

" # Гарантируем, что хотя бы первый токен останется\n",

|

||

" sorted_mask[:, 0] = 1\n",

|

||

" # 5. Преобразуем маску обратно в оригинальный порядок:\n",

|

||

" # Создаём полную маску из 0\n",

|

||

" mask = torch.zeros_like(probs, dtype=torch.uint8)\n",

|

||

" # Устанавливаем 1 в местах нужных токенов\n",

|

||

" mask.scatter_(dim=1, index=sorted_indices, src=sorted_mask)\n",

|

||

" # 6. Зануляем логиты токенов вне топ-p:\n",

|

||

" logits_scaled[~mask] = float('-inf')\n",

|

||

"\n",

|

||

" # 4. Применяем Softmax\n",

|

||

" probs = F.softmax(logits_scaled, dim=-1) # [batch_size, vocab_size]\n",

|

||

"\n",

|

||

"\n",

|

||

" if do_sample == True:\n",

|

||

" # 5. Если do_sample равен True, то отбираем токен случайно с помощью torch.multinomial\n",

|

||

" next_token = torch.multinomial(probs, num_samples=1) # [batch_size, 1]\n",

|

||

" else:\n",

|

||

" # 5. Если do_sample равен False, то выбираем токен с максимальной вероятностью\n",

|

||

" next_token = torch.argmax(probs, dim=-1, keepdim=True) # [batch_size, 1]\n",

|

||

" \n",

|

||

" # 6. Добавляем его к последовательности\n",

|

||

" x = torch.cat([x, next_token], dim=1) # [batch_size, seq_len+1]\n",

|

||

" return x\n",

|

||

"\n",

|

||

" def save(self, path):\n",

|

||

" torch.save({\n",

|

||

" 'model_state_dict': self.state_dict(),\n",

|

||

" 'vocab_size': self._vocab_size,\n",

|

||

" 'max_seq_len': self._max_seq_len,\n",

|

||

" 'emb_size': self._emb_size,\n",

|

||

" 'num_heads': self._num_heads,\n",

|

||

" 'head_size': self._head_size,\n",

|

||

" 'num_layers': self._num_layers\n",

|

||

" }, path)\n",

|

||

"\n",

|

||

" @classmethod\n",

|

||

" def load(cls, path, device):\n",

|

||

" checkpoint = torch.load(path, map_location=device)\n",

|

||

" model = cls(\n",

|

||

" vocab_size=checkpoint['vocab_size'],\n",

|

||

" max_seq_len=checkpoint['max_seq_len'],\n",

|

||

" emb_size=checkpoint['emb_size'],\n",

|

||

" num_heads=checkpoint['num_heads'],\n",

|

||

" head_size=checkpoint['head_size'],\n",

|

||

" num_layers=checkpoint['num_layers']\n",

|

||

" )\n",

|

||

" model.load_state_dict(checkpoint['model_state_dict'])\n",

|

||

" model.to(device)\n",

|

||

" return model\n",

|

||

"\n",

|

||

" @property\n",

|

||

" def max_seq_len(self) -> int:\n",

|

||

" return self._max_seq_len\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "c6145261",

|

||

"metadata": {},

|

||

"source": [

|

||

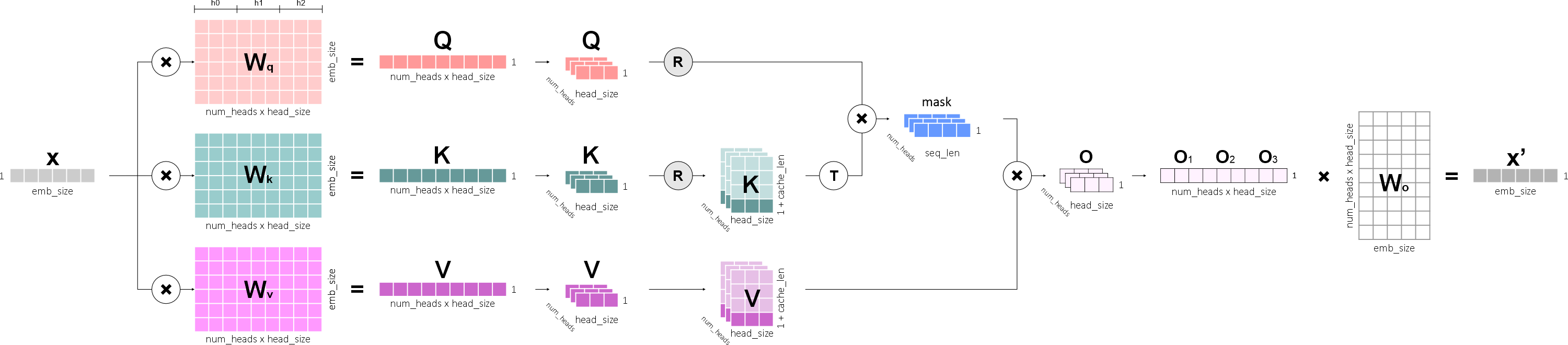

"# Grouped-Query Attention\n",

|

||

"\n",

|

||

"**Grouped-Query Attention (GQA)** — это оптимизированный механизм внимания.\n",

|

||

"\n",

|

||

"В чем суть: в классическом **Multi-Head Attention (MHA)** на каждую голову приходится по три вектора: запроса, ключа и значения. Эти вектора существуют только внутри голов, где они взаимодействуют между собой.\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/12f3e161-dbc8-4bf5-acb2-4f78cebfb3ee/\" alt=\"gqa_1\" width=\"399\" height=\"237\">\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"А в **GQA** предложили сэкономить на матрицах: разделить головы на группы и на каждую группу назначить по одному вектору ключа и значения.\n",

|

||

"При этом на каждую голову по-прежнему приходится один вектор запроса.\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/3e3dce50-29ee-4705-9e2d-84478d581c34/\" alt=\"gqa_2\" width=\"399\" height=\"237\">\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"Что мы в результате получаем:\n",

|

||

"\n",

|

||

"* **Скорость:** генерация текста происходит на 30–40% быстрее, чем в MHA.\n",

|

||

"* **Память:** экономия места в Q/G раз (где Q — количество векторов запроса, G — количество групп). Также снижается трафик памяти до 8–10 раз по сравнению с MHA.\n",

|

||

"* **Качество:** близко к MHA.\n",

|

||

"\n",

|

||

"> В первом Mistral было 32 Query Heads и 8 K/V Heads.\n",

|

||

"\n",

|

||

"---\n",

|

||

"\n",

|

||

"### Как это работает технически?\n",

|

||

"\n",

|

||

"На первых шагах этого урока мы переделали механизм внимания.\n",

|

||

"Избавились от отдельных голов и сделали единое пространство для вычислений всех голов одновременно.\n",

|

||

"Каждая голова теперь представлена отдельными измерениями в одном длинном тензоре.\n",

|

||

"Вот как это выглядит (здесь представлена часть механизма внимания):\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/ff92d0ec-987e-48e3-b108-dad75e55866e/\" alt=\"gqa_3\" width=\"800\" height=\"278\">\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"* На вход мы получаем тензор размером `batch_size × seq_len × emb_size`.\n",

|

||

"* Тензор перемножается с тремя матрицами весов: $W_q$, $W_k$, $W_v$, каждая размером `emb_size × num_heads * head_size`.\n",

|

||

"* После перемножения получаются три матрицы: запроса (query), ключа (key) и значения (value), каждая размером `batch_size × seq_len × num_heads * head_size`.\n",

|

||

"* Переводим матрицы запроса (query), ключа (key) и значения (value) в форму четырехмерного тензора:\n",

|

||

" `batch_size × num_heads × seq_len × head_size`.\n",

|

||

"* Выполняем поворот тензоров запроса (query) и ключа (key).\n",

|

||

"* Дальше ничего не меняется...\n",

|

||

"\n",

|

||

"---\n",

|

||

"\n",

|

||

"И вот как нам надо это переделать:\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/4b5bd1e6-3aaa-4fd9-8ceb-6f521f5a23a6/\" alt=\"gqa_4\" width=\"800\" height=\"279\">\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"* На вход мы получаем тензор размером `batch_size × seq_len × emb_size`.\n",

|

||

"* Тензор перемножается с тремя матрицами весов: $W_q$, $W_k$, $W_v$:\n",

|

||

"\n",

|

||

" * $W_q$ — такого же размера, как и раньше: `emb_size × num_q_heads * head_size`.\n",

|

||

" * А вот $W_k$ и $W_v$ уменьшились на количество K/V голов: `emb_size × num_kv_heads * head_size`.\n",

|

||

"* После перемножения получаются три матрицы:\n",

|

||

"\n",

|

||

" * **Запрос (query)** — `batch_size × seq_len × num_q_heads * head_size`.\n",

|

||

" * **Ключ (key)** и **значение (value)** — `batch_size × seq_len × num_kv_heads * head_size`.\n",

|

||

"* Переводим их в форму четырехмерного тензора:\n",

|

||

"\n",

|

||

" * **Query:** `batch_size × num_q_heads × seq_len × head_size`.\n",

|

||

" * **Key, Value:** `batch_size × num_kv_heads × seq_len × head_size`.\n",

|

||

"* Выполняем поворот тензоров запроса (query) и ключа (key).\n",

|

||

"* Затем проводим **уникальную операцию — размножение**.\n",

|

||

" Нам нужно произвести матричные операции с тензорами, но у них разный размер, что делает перемножение невозможным.\n",

|

||

" Чтобы исправить это, нужно продублировать головы в тензорах **query** и **key**, чтобы их размер стал одинаковым:\n",

|

||

" `batch_size × num_q_heads × seq_len × head_size`.\n",

|

||

" Копии располагаются последовательно — после каждой головы идут её дубликаты.\n",

|

||

"* Дальнейшие операции остаются без изменений.\n",

|

||

"\n",

|

||

"> Может показаться, что с точки зрения использования памяти мы пришли к тому, с чего начали.\n",

|

||

"> У нас тензор K и V получился такого же размера, как и тензор Q.\n",

|

||

"> Но это только по внешнему виду. Расширение происходит **виртуально** — в памяти место не дублируется.\n",

|

||

"\n",

|

||

"---\n",

|

||

"\n",

|

||

"Ну и версия для кэша:\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/1f66d02b-ff97-4a33-ae76-297eb002533d/\" alt=\"gqa_5\" width=\"800\" height=\"281\">\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"Единственное отличие: после операции поворота и до размножения голов мы склеиваем текущий токен с кэшем.\n",

|

||

"\n",

|

||

"---\n",

|

||

"\n",

|

||

"### Почему именно K и V?\n",

|

||

"\n",

|

||

"Любопытный читатель спросит: а почему мы сократили количество именно **K** и **V**?\n",

|

||

"Почему не **Q и V**, или не **Q и K**?\n",

|

||

"\n",

|

||

"Дело в роли, которую играют вектора. Уже знакомая нам аналогия с библиотекой:\n",

|

||

"\n",

|

||

"* **Query** — это читатели с разными запросами (один ищет научную книгу, другой — художественную).\n",

|

||

"* **Key** — это каталог карточек (индексы книг).\n",

|

||

"* **Value** — это сами книги на полках.\n",

|

||

"\n",

|

||

"У каждого читателя свой уникальный запрос (**Q**), очевидно, их нельзя копировать на других читателей.\n",

|

||

"Одни и те же каталог (**K**) и книги (**V**) разделены на секции (группы).\n",

|

||

"Несколько читателей могут использовать одну секцию каталога/книг, но их запросы остаются уникальными.\n",

|

||

"\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "aefe1ef9",

|

||

"metadata": {},

|

||

"source": [

|

||

"### Grouped-Query Attention (разработка)"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 6,

|

||

"id": "84a3a599",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"import torch.nn.functional as F\n",

|

||

"from typing import Optional, Tuple\n",

|

||

"\n",

|

||

"\n",

|

||

"class GroupedQueryAttention(nn.Module):\n",

|

||

"\n",

|

||

" def __init__(\n",

|

||

" self,\n",

|

||

" num_heads: int,\n",

|

||

" num_kv_heads: int,\n",

|

||

" emb_size: int,\n",

|

||

" head_size: int,\n",

|

||

" max_seq_len: int,\n",

|

||

" rope: RoPE = None,\n",

|

||

" dropout: float = 0.1,\n",

|

||

" ):\n",

|

||

" super().__init__()\n",

|

||

" self._num_heads = num_heads\n",

|

||

" self._num_kv_heads = num_kv_heads\n",

|

||

" self._head_size = head_size\n",

|

||

" self._max_seq_len = max_seq_len\n",

|

||

" self._rope = rope\n",

|

||

"\n",

|

||

" self._q = nn.Linear(emb_size, num_heads * head_size)\n",

|

||

" self._k = nn.Linear(emb_size, num_kv_heads * head_size)\n",

|

||

" self._v = nn.Linear(emb_size, num_kv_heads * head_size)\n",

|

||

"\n",

|

||

" # Создание causal маски\n",

|

||

" mask = torch.tril(torch.ones(max_seq_len, max_seq_len))\n",

|

||

" self.register_buffer(\n",

|

||

" \"_tril_mask\", mask.bool() if hasattr(torch, \"bool\") else mask.byte()\n",

|

||

" )\n",

|

||

" \n",

|

||

" self._layer = nn.Linear(head_size * num_heads, emb_size)\n",

|

||

" self._dropout = nn.Dropout(dropout)\n",

|

||

"\n",

|

||

" def forward(\n",

|

||

" self,\n",

|

||

" x: torch.Tensor,\n",

|

||

" mask: torch.Tensor = None,\n",

|

||

" use_cache: bool = True,\n",

|

||

" cache: list = None,\n",

|

||

" ):\n",

|

||

" batch_size, seq_len, emb_size = x.shape\n",

|

||

"\n",

|

||

" if seq_len > self._max_seq_len:\n",

|

||

" raise ValueError(\n",

|

||

" f\"Длина последовательности {seq_len} превышает максимум {self._max_seq_len}\"\n",

|

||

" )\n",

|

||

"\n",

|

||

" # Пропустите тензор x через матрицы Wq, Wk , Wv, чтобы получить матрицы запроса, ключа и значения.\n",

|

||

" k = self._k(x) # [B, T, hs]\n",

|

||

" q = self._q(x) # [B, T, hs]\n",

|

||

" v = self._v(x) # [B, T, hs]\n",

|

||

"\n",

|

||

" # Шаг 2: Изменение формы для multi-head\n",

|

||

" # [batch_size, seq_len, num_heads * head_size] \n",

|

||

" # -> [batch_size, seq_len, num_heads, head_size]\n",

|

||

" # Измените форму запроса (query) на batch_size × num_q_heads × seq_len × head_size.\n",

|

||

" q = q.reshape(batch_size, seq_len, self._num_heads, self._head_size)\n",

|

||

"\n",

|

||

" # Измените форму ключа (key) и значения (value) на batch_size × num_kv_heads × seq_len × head_size.\n",

|

||

" k = k.reshape(batch_size, seq_len, self._num_kv_heads, self._head_size)\n",

|

||

" v = v.reshape(batch_size, seq_len, self._num_kv_heads, self._head_size)\n",

|

||

" \n",

|

||

"\n",

|

||

" # 3. Transpose: [B, T, H, hs] -> [B, H, T, hs]\n",

|

||

" q = q.transpose(1, 2)\n",

|

||

" k = k.transpose(1, 2)\n",

|

||

" v = v.transpose(1, 2)\n",

|

||

"\n",

|

||

" start_pos = 0\n",

|

||

" if cache is not None:\n",

|

||

" k_cache, v_cache = cache\n",

|

||

" cache_len = k_cache.shape[2]\n",

|

||

" start_pos = cache_len\n",

|

||

" \n",

|

||

" # Пропустите матрицы запроса и ключа через экземпляр rope, чтобы выполнить поворот.\n",

|

||

" if self._rope is not None:\n",

|

||

" # ✅ Применяем RoPE к Q и K (НЕ к V!)\n",

|

||

" q = self._rope(q, start_pos=start_pos) # [B, T, hs]\n",

|

||

" k = self._rope(k, start_pos=start_pos) # [B, T, hs]\n",

|

||

"\n",

|

||

" # Если cache пришел, то объединяем кэш и одну строку из ключа и значения. Это будут новые key и value для последующих вычислений.\n",

|

||

" # 5. Кэширование (для autoregressive generation)\n",

|

||

" if cache is not None:\n",

|

||

" k_cache, v_cache = cache\n",

|

||

" k = torch.cat([k_cache, k], dim=2) # Concat по seq_len (dim=2)\n",

|

||

" v = torch.cat([v_cache, v], dim=2)\n",

|

||

"\n",

|

||

" # Если use_cache == True, то сохраните матрицы ключа и значения для кэша (это нужно сделать до дублирования голов).\n",

|

||

" if use_cache == True:\n",

|

||

" kv_cache = (k, v)\n",

|

||

"\n",

|

||

" # Продублируйте головы в тензорах ключа (key) и значения (value), чтобы получился тензор размера на batch_size × num_q_heads × seq_len × head_size.\n",

|

||

" k = self._repeat_kv_heads(k, self._num_heads, self._num_kv_heads)\n",

|

||

" v = self._repeat_kv_heads(v, self._num_heads, self._num_kv_heads)\n",

|

||

"\n",

|

||

" # Перемножим матрицы запроса и ключа (транспонированную), чтобы вычислить матрицу внимания.\n",

|

||

" # И разделить все значения в матрице внимания на корень из head_size.\n",

|

||

" scores = q @ k.transpose(-2, -1) / (self._head_size ** 0.5)\n",

|

||

"\n",

|

||

" # Если cache пришел, то маску не накладываем. Иначе наложите на матрицу внимания треугольную маску, созданную при инициализации. Все скрытые значения должны быть приведены к минус бесконечности: float('-inf').\n",

|

||

" if cache is None:\n",

|

||

" scores = scores.masked_fill(\n",

|

||

" ~self._tril_mask[:seq_len, :seq_len], float(\"-inf\")\n",

|

||

" )\n",

|

||

"\n",

|

||

" # Применить к матрице внимания (построчно) функцию Softmax.\n",

|

||

" weights = F.softmax(scores, dim=-1)\n",

|

||

"\n",

|

||

" # Перемножим матрицу внимания и матрицу значения.\n",

|

||

" x_out = weights @ v # [B, T, hs]\n",

|

||

"\n",

|

||

" # Измените форму тензора на batch_size × seq_len × num_heads*head_size.\n",

|

||

" # Transpose обратно и concatenate heads\n",

|

||

" x_out = x_out.transpose(1, 2) # [B, T_q, H, hs]\n",

|

||

" x_out = x_out.contiguous() # Важно для reshape!\n",

|

||

" concatenated_attention = x_out.reshape(batch_size, seq_len, self._num_heads * self._head_size)\n",

|

||

"\n",

|

||

" #concatenated_attention = x_out.reshape(batch_size, seq_len, self._num_heads * self._head_size)\n",

|

||

"\n",

|

||

" # Пропустите получившийся тензор через последний линейный слой.\n",

|

||

" # 3. Проецируем в пространство эмбеддингов\n",

|

||

" projected_output = self._layer(concatenated_attention)\n",

|

||

"\n",

|

||

" # 4. Применяем dropout для регуляризации\n",

|

||

" final_output = self._dropout(projected_output)\n",

|

||

"\n",

|

||

" if use_cache is True:\n",

|

||

" return (final_output, kv_cache)\n",

|

||

" else:\n",

|

||

" return (final_output, None)\n",

|

||

"\n",

|

||

" def _repeat_kv_heads(\n",

|

||

" self,\n",

|

||

" kv: torch.Tensor,\n",

|

||

" num_q_heads: int,\n",

|

||

" num_kv_heads: int\n",

|

||

" ) -> torch.Tensor:\n",

|

||

" \"\"\"\n",

|

||

" Дублирует головы K/V для соответствия количеству голов Q.\n",

|

||

"\n",

|

||

" Args:\n",

|

||

" kv: [batch_size, num_kv_heads, seq_len, head_size]\n",

|

||

" num_q_heads: Количество голов Query (например, 8)\n",

|

||

" num_kv_heads: Количество голов Key/Value (например, 2)\n",

|

||

"\n",

|

||

" Returns:\n",

|

||

" [batch_size, num_q_heads, seq_len, head_size]\n",

|

||

"\n",

|

||

" Example:\n",

|

||

" num_q_heads=8, num_kv_heads=2\n",

|

||

" Каждая голова KV дублируется 4 раза:\n",

|

||

" [KV0, KV1] -> [KV0, KV0, KV0, KV0, KV1, KV1, KV1, KV1]\n",

|

||

" \"\"\"\n",

|

||

" batch_size, num_kv_heads, seq_len, head_size = kv.shape\n",

|

||

"\n",

|

||

" if num_q_heads == num_kv_heads:\n",

|

||

" # Нет необходимости дублировать\n",

|

||

" return kv\n",

|

||

"\n",

|

||

" # Вычисляем сколько раз нужно повторить каждую голову\n",

|

||

" num_repeats = num_q_heads // num_kv_heads\n",

|

||

"\n",

|

||

" # repeat_interleave дублирует каждую голову num_repeats раз\n",

|

||

" # [B, num_kv_heads, S, hs] -> [B, num_q_heads, S, hs]\n",

|

||

" # [B, num_kv_heads, S, hs] -> [B, num_kv_heads, 1, S, hs]\n",

|

||

" kv = kv.unsqueeze(2)\n",

|

||

" \n",

|

||

" # [B, num_kv_heads, 1, S, hs] -> [B, num_kv_heads, num_repeats, S, hs]\n",

|

||

" kv = kv.repeat(1, 1, num_repeats, 1, 1)\n",

|

||

" \n",

|

||

" # [B, num_kv_heads, num_repeats, S, hs] -> [B, num_q_heads, S, hs]\n",

|

||

" kv = kv.reshape(batch_size, num_q_heads, seq_len, head_size)\n",

|

||

" \n",

|

||

"\n",

|

||

" return kv"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "e56522e7",

|

||

"metadata": {},

|

||

"source": [

|

||

"### Промежуточный вариант Mistral"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 7,

|

||

"id": "c35a8b6f",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"from torch import Tensor\n",

|

||

"import torch.nn.functional as F\n",

|

||

"from math import sqrt\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"class SiLU(nn.Module):\n",

|

||

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size]\n",

|

||

" return torch.sigmoid(x) * x\n",

|

||

" \n",

|

||

"class RMSNorm(nn.Module):\n",

|

||

" def __init__(self, dim: int, eps: float = 1e-6):\n",

|

||

" super().__init__()\n",

|

||

" self._eps = eps\n",

|

||

" self._w = nn.Parameter(torch.ones(dim))\n",

|

||

" \n",

|

||

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size]\n",

|

||

" rms = (x.pow(2).mean(-1, keepdim=True) + self._eps) ** 0.5\n",

|

||

" norm_x = x / rms\n",

|

||

" return self._w * norm_x\n",

|

||

"\n",

|

||

"class SwiGLU(nn.Module):\n",

|

||

" def __init__(self, emb_size: int, dropout: float = 0.1):\n",

|

||

" super().__init__()\n",

|

||

"\n",

|

||

" self._gate = nn.Linear(emb_size, 4 * emb_size)\n",

|

||

" self._up = nn.Linear(emb_size, 4 * emb_size)\n",

|

||

" self._down = nn.Linear(4 * emb_size, emb_size)\n",

|

||

" self._activation = SiLU()\n",

|

||

" self._dropout = nn.Dropout(dropout)\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size].\n",

|

||

" gate_out = self._gate(x) # [batch, seq, 4*emb]\n",

|

||

" activation_out = self._activation(gate_out) # [batch, seq, 4*emb]\n",

|

||

" up_out = self._up(x) # [batch, seq, 4*emb]\n",

|

||

" out = up_out * activation_out # поэлементное!\n",

|

||

" out = self._down(out) # [batch, seq, emb]\n",

|

||

" return self._dropout(out)\n",

|

||

"\n",

|

||

"\n",

|

||

"class TokenEmbeddings(nn.Module):\n",

|

||

" def __init__(self, vocab_size: int, emb_size: int):\n",

|

||

" super().__init__()\n",

|

||

" self._embedding = nn.Embedding(\n",

|

||

" num_embeddings=vocab_size,\n",

|

||

" embedding_dim=emb_size\n",

|

||

" )\n",

|

||

"\n",

|

||

" def forward(self, x: Tensor) -> Tensor:\n",

|

||

" return self._embedding(x)\n",

|

||

"\n",

|

||

" @property\n",

|

||

" def num_embeddings(self) -> int:\n",

|

||

" return self._embedding.num_embeddings\n",

|

||

"\n",

|

||

" @property\n",

|

||

" def embedding_dim(self) -> int:\n",

|

||

" return self._embedding.embedding_dim\n",

|

||

"\n",

|

||

"\n",

|

||

"class GELU(nn.Module):\n",

|

||

" def __init__(self):\n",

|

||

" super().__init__()\n",

|

||

" self.sqrt_2_over_pi = torch.sqrt(torch.tensor(2.0) / math.pi)\n",

|

||

" \n",

|

||

" def forward(self, x: torch.Tensor) -> torch.Tensor:\n",

|

||

" return 0.5 * x * (1 + torch.tanh(\n",

|

||

" self.sqrt_2_over_pi * (x + 0.044715 * torch.pow(x, 3))\n",

|

||

" ))\n",

|

||

"\n",

|

||

"\n",

|

||

"class Decoder(nn.Module):\n",

|

||

" def __init__(self, \n",

|

||

" num_q_heads: int,\n",

|

||

" num_kv_heads: int,\n",

|

||

" emb_size: int,\n",

|

||

" head_size: int,\n",

|

||

" max_seq_len: int,\n",

|

||

" rope: RoPE,\n",

|

||

" dropout: float = 0.1\n",

|

||

" ):\n",

|

||

" super().__init__()\n",

|

||

" self._heads = GroupedQueryAttention(\n",

|

||

" num_heads=num_q_heads, \n",

|

||

" num_kv_heads=num_kv_heads,\n",

|

||

" emb_size=emb_size, \n",

|

||

" head_size=head_size, \n",

|

||

" max_seq_len=max_seq_len,\n",

|

||

" rope=rope,\n",

|

||

" dropout=dropout\n",

|

||

" )\n",

|

||

" self._ff = SwiGLU(emb_size=emb_size, dropout=dropout)\n",

|

||

" self._norm1 = RMSNorm(emb_size)\n",

|

||

" self._norm2 = RMSNorm(emb_size)\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor, mask: torch.Tensor = None, use_cache: bool = True, cache: list = None) -> torch.Tensor:\n",

|

||

" norm1_out = self._norm1(x)\n",

|

||

" attention, kv_caches = self._heads(norm1_out, mask, use_cache=use_cache, cache=cache)\n",

|

||

" out = attention + x\n",

|

||

" \n",

|

||

" norm2_out = self._norm2(out)\n",

|

||

" ffn_out = self._ff(norm2_out)\n",

|

||

"\n",

|

||

" if use_cache is True:\n",

|

||

" return (ffn_out + out, kv_caches)\n",

|

||

" else:\n",

|

||

" return (ffn_out + out, None)\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"from torch import nn\n",

|

||

"import torch\n",

|

||

"import torch.nn.functional as F\n",

|

||

"\n",

|

||

"class Mistral(nn.Module):\n",

|

||

" def __init__(self,\n",

|

||

" vocab_size: int,\n",

|

||

" max_seq_len: int,\n",

|

||

" emb_size: int,\n",

|

||

" num_q_heads: int,\n",

|

||

" num_kv_heads: int,\n",

|

||

" head_size: int,\n",

|

||

" num_layers: int,\n",

|

||

" dropout: float = 0.1,\n",

|

||

" device: str = 'cpu'\n",

|

||

" ):\n",

|

||

" super().__init__()\n",

|

||

" self._vocab_size = vocab_size\n",

|

||

" self._max_seq_len = max_seq_len\n",

|

||

" self._emb_size = emb_size\n",

|

||

" self._num_q_heads = num_q_heads\n",

|

||

" self._num_kv_heads = num_kv_heads\n",

|

||

" self._head_size = head_size\n",

|

||

" self._num_layers = num_layers\n",

|

||

" self._dropout = dropout\n",

|

||

" self._device = device\n",

|

||

" \n",

|

||

" self.validation_loss = None\n",

|

||

"\n",

|

||

" # Инициализация слоев\n",

|

||

" self._token_embeddings = TokenEmbeddings(\n",

|

||

" vocab_size=vocab_size, \n",

|

||

" emb_size=emb_size\n",

|

||

" )\n",

|

||

" self._position_embeddings = RoPE(\n",

|

||

" head_size=head_size,\n",

|

||

" max_seq_len=max_seq_len\n",

|

||

" )\n",

|

||

" #self._position_embeddings = PositionalEmbeddings(\n",

|

||

" # max_seq_len=max_seq_len, \n",

|

||

" # emb_size=emb_size\n",

|

||

" #)\n",

|

||

" self._dropout = nn.Dropout(dropout)\n",

|

||

" self._decoders = nn.ModuleList([Decoder(\n",

|

||

" num_q_heads=num_q_heads,\n",

|

||

" num_kv_heads=num_kv_heads,\n",

|

||

" emb_size=emb_size,\n",

|

||

" head_size=head_size,\n",

|

||

" max_seq_len=max_seq_len,\n",

|

||

" rope=self._position_embeddings,\n",

|

||

" dropout=dropout \n",

|

||

" ) for _ in range(num_layers)])\n",

|

||

" self._norm = RMSNorm(emb_size)\n",

|

||

" self._linear = nn.Linear(emb_size, vocab_size)\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor, use_cache: bool = True, cache: list = None) -> tuple:\n",

|

||

" # Проверка длины последовательности (только при отсутствии кэша)\n",

|

||

" if cache is None and x.size(1) > self._max_seq_len:\n",

|

||

" raise ValueError(f\"Длина последовательности {x.size(1)} превышает максимальную {self.max_seq_len}\")\n",

|

||

" \n",

|

||

" # Эмбеддинги токенов и позиций\n",

|

||

" tok_out = self._token_embeddings(x) # [batch, seq_len, emb_size]\n",

|

||

" #pos_out = self._position_embeddings(x) # [batch, seq_len, emb_size]\n",

|

||

" \n",

|

||

" # Комбинирование\n",

|

||

" out = self._dropout(tok_out) # [batch, seq_len, emb_size]\n",

|

||

" \n",

|

||

" # Стек декодеров с передачей кэша\n",

|

||

" new_cache = []\n",

|

||

" for i, decoder in enumerate(self._decoders):\n",

|

||

" decoder_cache = cache[i] if cache is not None else None\n",

|

||

" decoder_result = decoder(out, use_cache=use_cache, cache=decoder_cache)\n",

|

||

"\n",

|

||

" # Извлекаем результат из кортежа\n",

|

||

" if use_cache:\n",

|

||

" out, decoder_new_cache = decoder_result\n",

|

||

" new_cache.append(decoder_new_cache)\n",

|

||

" else:\n",

|

||

" out = decoder_result[0]\n",

|

||

"\n",

|

||

" out = self._norm(out)\n",

|

||

" logits = self._linear(out)\n",

|

||

" \n",

|

||

" # Возвращаем результат с учетом use_cache\n",

|

||

" if use_cache:\n",

|

||

" return (logits, new_cache)\n",

|

||

" else:\n",

|

||

" return (logits, None)\n",

|

||

"\n",

|

||

" def generate(self,\n",

|

||

" x: torch.Tensor, \n",

|

||

" max_new_tokens: int, \n",

|

||

" do_sample: bool,\n",

|

||

" temperature: float = 1.0,\n",

|

||

" top_k: int = None,\n",

|

||

" top_p: float = None,\n",

|

||

" use_cache: bool = True\n",

|

||

" ) -> torch.Tensor:\n",

|

||

" cache = None\n",

|

||

"\n",

|

||

" for _ in range(max_new_tokens):\n",

|

||

" if use_cache and cache is not None:\n",

|

||

" # Используем кэш - передаем только последний токен\n",

|

||

" x_input = x[:, -1:] # [batch_size, 1]\n",

|

||

" else:\n",

|

||

" # Первая итерация или кэш отключен - передаем всю последовательность\n",

|

||

" x_input = x\n",

|

||

" \n",

|

||

" # Прямой проход с кэшем\n",

|

||

" logits, new_cache = self.forward(x_input, use_cache=use_cache, cache=cache)\n",

|

||

" \n",

|

||

" # Обновляем кэш для следующей итерации\n",

|

||

" if use_cache:\n",

|

||

" cache = new_cache\n",

|

||

"\n",

|

||

" last_logits = logits[:, -1, :] # [batch_size, vocab_size]\n",

|

||

"\n",

|

||

" # Масштабируем логиты температурой\n",

|

||

" if temperature > 0:\n",

|

||

" logits_scaled = last_logits / temperature\n",

|

||

" else:\n",

|

||

" logits_scaled = last_logits\n",

|

||

"\n",

|

||

" if do_sample == True and top_k != None:\n",

|

||

" _, topk_indices = torch.topk(logits_scaled, top_k, dim=-1)\n",

|

||

"\n",

|

||

" # # Заменим все НЕ top-k логиты на -inf\n",

|

||

" masked_logits = logits_scaled.clone()\n",

|

||

" vocab_size = logits_scaled.size(-1)\n",

|

||

"\n",

|

||

" # создаём маску: 1, если токен НЕ в topk_indices\n",

|

||

" mask = torch.ones_like(logits_scaled, dtype=torch.uint8)\n",

|

||

" mask.scatter_(1, topk_indices, 0) # 0 там, где top-k индексы\n",

|

||

" masked_logits[mask.byte()] = float('-inf')\n",

|

||

"\n",

|

||

" logits_scaled = masked_logits\n",

|

||

"\n",

|

||

" if do_sample == True and top_p != None:\n",

|

||

" # 1. Применим softmax, чтобы получить вероятности:\n",

|

||

" probs = F.softmax(logits_scaled, dim=-1) # [B, vocab_size]\n",

|

||

" # 2. Отсортируем токены по убыванию вероятностей:\n",

|

||

" sorted_probs, sorted_indices = torch.sort(probs, descending=True, dim=-1)\n",

|

||

" # 3. Посчитаем кумулятивную сумму вероятностей:\n",

|

||

" cum_probs = torch.cumsum(sorted_probs, dim=-1) # [B, vocab_size]\n",

|

||

" # 4. Определим маску: оставить токены, пока сумма < top_p\n",

|

||

" sorted_mask = (cum_probs <= top_p).byte() # [B, vocab_size]\n",

|

||

" # Гарантируем, что хотя бы первый токен останется\n",

|

||

" sorted_mask[:, 0] = 1\n",

|

||

" # 5. Преобразуем маску обратно в оригинальный порядок:\n",

|

||

" # Создаём полную маску из 0\n",

|

||

" mask = torch.zeros_like(probs, dtype=torch.uint8)\n",

|

||

" # Устанавливаем 1 в местах нужных токенов\n",

|

||

" mask.scatter_(dim=1, index=sorted_indices, src=sorted_mask)\n",

|

||

" # 6. Зануляем логиты токенов вне топ-p:\n",

|

||

" logits_scaled[~mask] = float('-inf')\n",

|

||

"\n",

|

||

" # 4. Применяем Softmax\n",

|

||

" probs = F.softmax(logits_scaled, dim=-1) # [batch_size, vocab_size]\n",

|

||

"\n",

|

||

"\n",

|

||

" if do_sample == True:\n",

|

||

" # 5. Если do_sample равен True, то отбираем токен случайно с помощью torch.multinomial\n",

|

||

" next_token = torch.multinomial(probs, num_samples=1) # [batch_size, 1]\n",

|

||

" else:\n",

|

||

" # 5. Если do_sample равен False, то выбираем токен с максимальной вероятностью\n",

|

||

" next_token = torch.argmax(probs, dim=-1, keepdim=True) # [batch_size, 1]\n",

|

||

" \n",

|

||

" # 6. Добавляем его к последовательности\n",

|

||

" x = torch.cat([x, next_token], dim=1) # [batch_size, seq_len+1]\n",

|

||

" return x\n",

|

||

"\n",

|

||

" def save(self, path):\n",

|

||

" torch.save({\n",

|

||

" 'model_state_dict': self.state_dict(),\n",

|

||

" 'vocab_size': self._vocab_size,\n",

|

||