mirror of

https://github.com/pese-git/llm-arch-research.git

synced 2026-01-23 13:00:54 +00:00

- Implement Rotary Positional Embeddings (RoPE) with separate cosine/sine components - Add vectorized computation of inverse frequencies for RoPE - Include tensor slicing utilities for even/odd column separation - Update dependencies in pyproject.toml and uv.lock

1630 lines

111 KiB

Plaintext

1630 lines

111 KiB

Plaintext

{

|

||

"cells": [

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "efbc675e",

|

||

"metadata": {},

|

||

"source": [

|

||

"# Llama\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

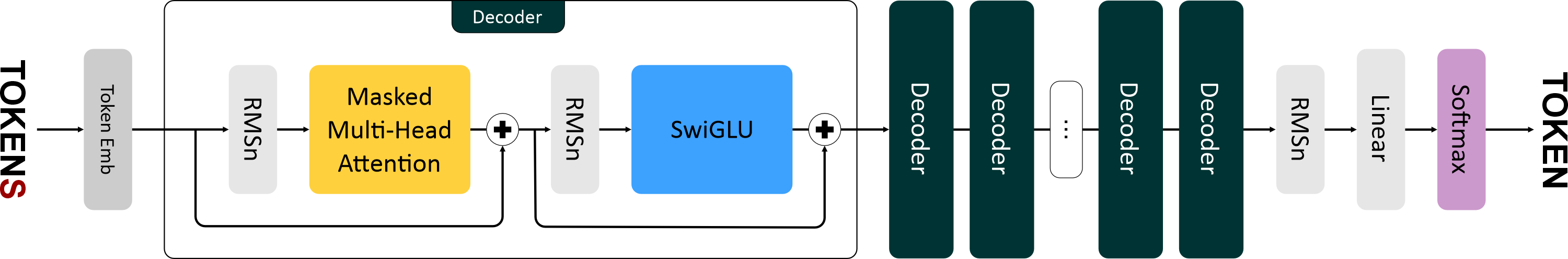

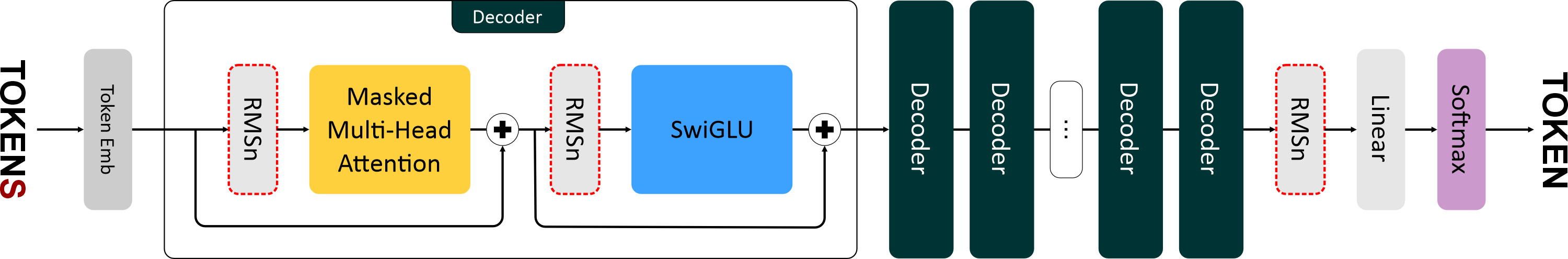

"Llama 1 вышла в феврале 2023 года. Это уже подальше, чем GPT-2. И в ее архитектуре появилось уже больше серьезных изменений:\n",

|

||

"\n",

|

||

"- Нормализация RMSNorm (вместе с pre-norm).\n",

|

||

"- Функция активации SwiGLU.\n",

|

||

"- Новый способ кодирования позиций — Rotary Positional Embeddings."

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "2cedc663",

|

||

"metadata": {},

|

||

"source": [

|

||

"# RMSNorm\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"В Llama используется более быстрая и эффективная нормализация — **RMSNorm (Root Mean Square Normalization)**.\n",

|

||

"И, также как в GPT-2, используется *pre-norm* нормализация, то есть слои нормализации располагаются **перед блоками внимания и FNN**.\n",

|

||

"\n",

|

||

"RMSNorm отличается от обычной нормализации только одним: в нём исключен этап центрирования (вычитание среднего) и используется только масштабирование по RMS.\n",

|

||

"Это сокращает вычислительные затраты (на 7–64%) без существенной потери качества.\n",

|

||

"На картинке показана разница в распределении после применения RMSNorm и LayerNorm к исходным данным — RMSNorm не разбросан вокруг нуля.\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/cbfbb78e-e2b0-40e2-ba56-73e5114d54f6/\" width=\"350\" alt=\"RMSNorm vs LayerNorm\">\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"## Этапы вычисления RMSNorm\n",

|

||

"\n",

|

||

"1. **Вычисление среднеквадратичного значения:**\n",

|

||

"\n",

|

||

" $$\\text{RMS}(\\mathbf{x}) = \\sqrt{\\frac{1}{d} \\sum_{j=1}^{d} x_j^2}$$\n",

|

||

"\n",

|

||

"2. **Нормализация входящего вектора:**\n",

|

||

"\n",

|

||

" $$\\hat{x}_i = \\frac{x_i}{\\text{RMS}(\\mathbf{x})}$$\n",

|

||

"\n",

|

||

"3. **Применение масштабирования:**\n",

|

||

"\n",

|

||

" $$y_i = w_i \\cdot \\hat{x}_i$$\n",

|

||

"\n",

|

||

"---\n",

|

||

"\n",

|

||

"**Где:**\n",

|

||

"\n",

|

||

"* $x_i$ — *i*-й элемент входящего вектора.\n",

|

||

"* $w_i$ — *i*-й элемент обучаемого вектора весов.\n",

|

||

" Использование весов позволяет модели адаптивно регулировать амплитуду признаков.\n",

|

||

" Без них нормализация была бы слишком «жёсткой» и могла бы ограничить качество модели.\n",

|

||

"* $d$ — размерность входящего вектора.\n",

|

||

"* $\\varepsilon$ — малая константа (например, 1e-6), предотвращает деление на ноль.\n",

|

||

"\n",

|

||

"---\n",

|

||

"\n",

|

||

"Так как на вход подаётся тензор, то в векторной форме RMSNorm вычисляется так:\n",

|

||

"\n",

|

||

"$$\n",

|

||

"RMSNorm(x) = w ⊙ \\frac{x}{\\sqrt{mean(x^2) + ϵ}}\n",

|

||

"$$\n",

|

||

"\n",

|

||

"**Где:**\n",

|

||

"\n",

|

||

"* $x$ — входящий тензор размера `batch_size × ...`\n",

|

||

"\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 1,

|

||

"id": "873704be",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"\n",

|

||

"class RMSNorm(nn.Module):\n",

|

||

" def __init__(self, dim: int, eps: float = 1e-6):\n",

|

||

" super().__init__()\n",

|

||

" self._eps = eps\n",

|

||

" self._w = nn.Parameter(torch.ones(dim))\n",

|

||

" \n",

|

||

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size]\n",

|

||

" rms = (x.pow(2).mean(-1, keepdim=True) + self._eps) ** 0.5\n",

|

||

" norm_x = x / rms\n",

|

||

" return self._w * norm_x"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "09dd9625",

|

||

"metadata": {},

|

||

"source": [

|

||

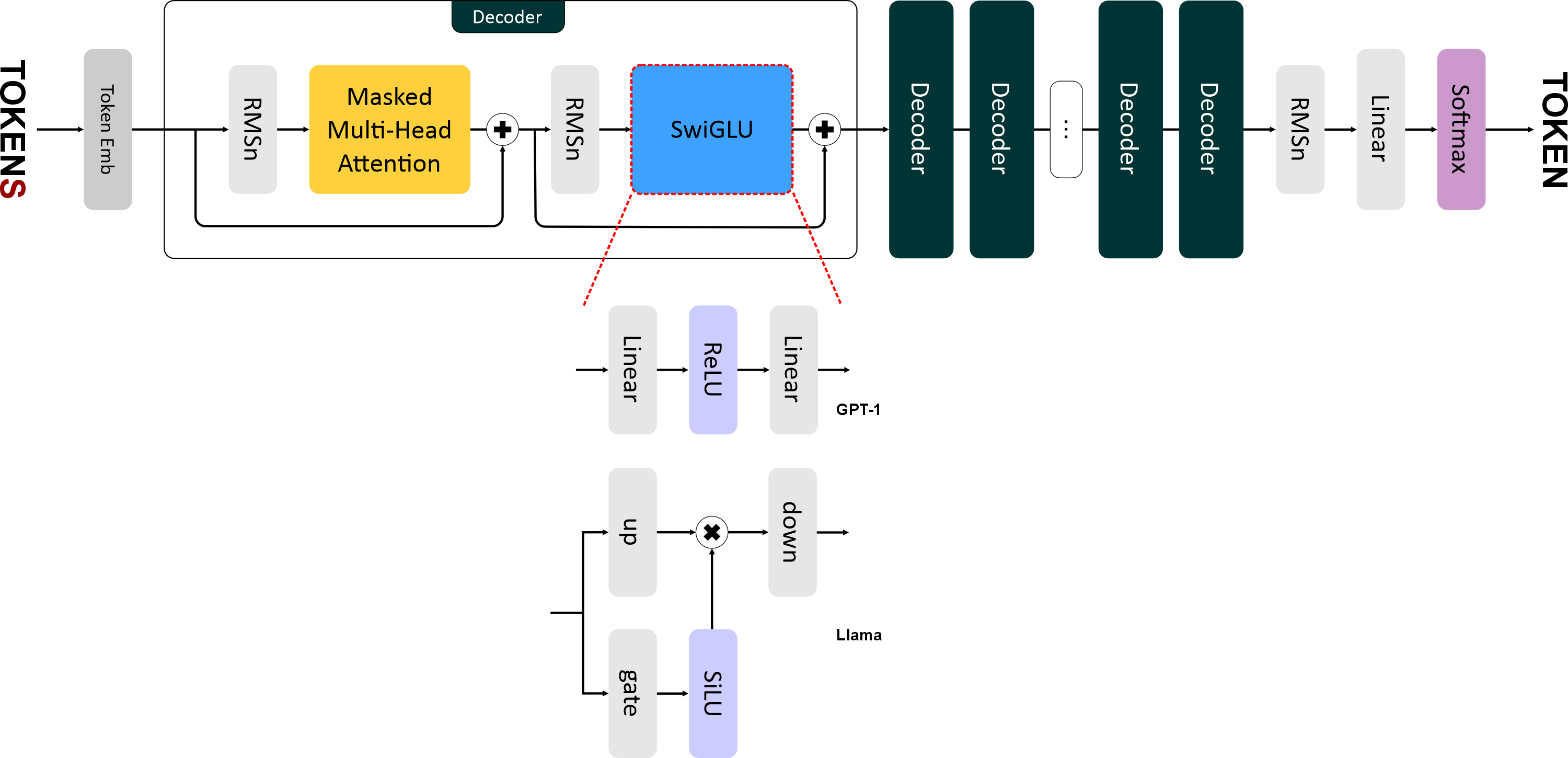

"# SwiGLU\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"В **Llama** ввели новую функцию активации — **SwiGLU (Swish-Gated Linear Unit)** — это гибридная функция активации, которая представляет собой комбинацию трёх линейных преобразований и функции активации **SiLU (Sigmoid Linear Unit)**, она же *Swish* в терминологии Google.\n",

|

||

"\n",

|

||

"Формула SwiGLU выглядит так:\n",

|

||

"\n",

|

||

"$$\n",

|

||

"\\text{SwiGLU}(x) = \\text{down}(\\text{SiLU}(\\text{gate}(x)) \\otimes \\text{up}(x))\n",

|

||

"$$\n",

|

||

"\n",

|

||

"где:\n",

|

||

"\n",

|

||

"* $x$ — входящий тензор.\n",

|

||

"* $\\text{gate}(x)$ — линейный слой для гейтового механизма. Преобразует вход `x` размерностью `emb_size` в промежуточное представление размерности `4 * emb_size`.\n",

|

||

"* $\\text{up}(x)$ — линейный слой для увеличения размерности. Также преобразует `x` в размерность `4 * emb_size`.\n",

|

||

"* $\\text{SiLU}(x) = x \\cdot \\sigma(x)$ — функция активации, где $\\sigma$ — сигмоида.\n",

|

||

"* $\\otimes$ — поэлементное умножение.\n",

|

||

"* $\\text{down}(x)$ — линейный слой для уменьшения промежуточного представления до исходного размера (`emb_size`).\n",

|

||

"\n",

|

||

"> **Гейтинг** (от слова *gate* — «врата») — это механизм, который позволяет сети динамически фильтровать, какая информация должна проходить дальше.\n",

|

||

"> При гейтинге создаются как бы два независимых потока:\n",

|

||

">\n",

|

||

"> * один предназначен для прямой передачи информации (*up-down*),\n",

|

||

"> * другой — для контроля передаваемой информации (*gate*).\n",

|

||

">\n",

|

||

"> Это позволяет сети учить более сложные паттерны.\n",

|

||

"> Например, гейт может научиться:\n",

|

||

"> «если признак A активен, то пропусти признак B»,\n",

|

||

"> что невозможно с простой функцией активации между линейными слоями.\n",

|

||

">\n",

|

||

"> Также гейтинг помогает с затуханием градиентов: вместо простого обнуления (как в ReLU), гейт может тонко модулировать силу сигнала.\n",

|

||

"\n",

|

||

"SwiGLU более сложная (дорогая), чем ReLU/GELU, так как требует больше вычислений (три линейных преобразования вместо двух).\n",

|

||

"Но при этом показывает лучшее качество по сравнению с ReLU и GELU.\n",

|

||

"\n",

|

||

"График **SiLU** похож на **GELU**, но более гладкий:\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/6683e0c8-96b7-4389-826a-a73708b4a835/\" width=\"500\" alt=\"SiLU vs GELU\">\n",

|

||

"</p>\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 2,

|

||

"id": "0484cf77",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"import torch.nn.functional as F\n",

|

||

"\n",

|

||

"class SiLU(nn.Module):\n",

|

||

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size]\n",

|

||

" return torch.sigmoid(x) * x"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "0b64da5d",

|

||

"metadata": {},

|

||

"source": [

|

||

"## SwiGLU"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 3,

|

||

"id": "74ca39ba",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"\n",

|

||

"class SwiGLU(nn.Module):\n",

|

||

" def __init__(self, emb_size: int, dropout: float = 0.1):\n",

|

||

" super().__init__()\n",

|

||

"\n",

|

||

" self._gate = nn.Linear(emb_size, 4 * emb_size)\n",

|

||

" self._up = nn.Linear(emb_size, 4 * emb_size)\n",

|

||

" self._down = nn.Linear(4 * emb_size, emb_size)\n",

|

||

" self._activation = SiLU()\n",

|

||

" self._dropout = nn.Dropout(dropout)\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor): # [batch_size × seq_len × emb_size].\n",

|

||

" gate_out = self._gate(x) # [batch, seq, 4*emb]\n",

|

||

" activation_out = self._activation(gate_out) # [batch, seq, 4*emb]\n",

|

||

" up_out = self._up(x) # [batch, seq, 4*emb]\n",

|

||

" out = up_out * activation_out # поэлементное!\n",

|

||

" out = self._down(out) # [batch, seq, emb]\n",

|

||

" return self._dropout(out)\n",

|

||

"\n",

|

||

" "

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "ecd2bcc0",

|

||

"metadata": {},

|

||

"source": [

|

||

"# RoPE\n",

|

||

"\n",

|

||

"Вот мы и добрались до наиболее серьезного изменения в архитектуре: обычные позиционные эмбеддинги в **Llama** были заменены на **Rotary Positional Embeddings (RoPE)**.\n",

|

||

"\n",

|

||

"**GPT-1** получал информацию о позициях токенов путем сложения эмбеддингов токенов и позиционных эмбеддингов.\n",

|

||

"**RoPE** же кодирует позиции токенов с помощью *вращения (rotation)* векторов **запроса (query)** и **ключа (key)** в двумерном пространстве. При этом каждая позиция в последовательности получает уникальный поворот, а угол поворота зависит от расположения токена в последовательности.\n",

|

||

"\n",

|

||

"Если упрощенно, то выглядит это так:\n",

|

||

"\n",

|

||

"* Слово на позиции **1**: поворот на 1°\n",

|

||

"* Слово на позиции **2**: поворот на 2°\n",

|

||

"* Слово на позиции **100**: поворот на 100°\n",

|

||

"* Слово на позиции **101**: поворот на 101°\n",

|

||

"\n",

|

||

"Угол между 1 и 101 словом будет достаточно большим — **100°**, что отражает большую дистанцию между ними.\n",

|

||

"А разница между 100 и 101 словом будет такой же как между 1 и 2, тем самым показывая одинаковую дистанцию между ними.\n",

|

||

"\n",

|

||

"Такое относительное кодирование позволяет модели лучше понимать расстояние между токенами.\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/26cd6249-bb93-4a26-9670-8d3847d9db4d/\" width=\"400\" alt=\"RoPE rotation illustration\">\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"В последующем тензоры **запроса (query)** и **ключа (key)** перемножаются для вычисления **матрицы внимания**.\n",

|

||

"А это означает, что теперь вычисление внимания напрямую зависит от относительных позиций слов.\n",

|

||

"\n",

|

||

"---\n",

|

||

"\n",

|

||

"## Почему RoPE лучше\n",

|

||

"\n",

|

||

"* **Устойчивость к сдвигу:** модель не переобучается на конкретных позициях в обучающих данных, так как учитывает относительные расстояния.\n",

|

||

"* **Экономия:** интегрируется в ключи (*key*) и запросы (*query*) посредством матричных вычислений, без необходимости создавать и хранить отдельный слой для позиционных эмбеддингов.\n",

|

||

"* **Экстраполяция:** модель может работать с последовательностями длиннее, чем видела при обучении — потому что она выучивает закономерности поворотов в зависимости от дистанции.\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "8ba07b89",

|

||

"metadata": {},

|

||

"source": [

|

||

"### Как это работает...\n",

|

||

"\n",

|

||

"Основная идея: для каждого токена в последовательности его эмбеддинг поворачивается на угол, зависящий от позиции токена и фиксированных частот.\n",

|

||

"\n",

|

||

"На вход к нам поступают либо тензор ключа (key), либо тензор запроса (query) размерностью `head_size`.\n",

|

||

"Обозначим его как *x*.\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/c6d62324-a062-4be7-943c-36cf16b5a424/\" alt=\"rope_0_1.png\" width=\"250\" height=\"127\" />\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"Каждая строка в тензоре — это вектор, соответствующий одному токену в последовательности.\n",

|

||

"Обозначим вектор как *xₘ*, где *m* — номер позиции токена.\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/8eb58921-02ef-4235-856d-e4cabd691f22/\" alt=\"rope_0_2.png\" width=\"400\" height=\"99\" />\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"Вращать вектор мы будем в двумерном пространстве. Но размерность вектора многомерная!\n",

|

||

"Чтобы свести вращение к двумерному пространству, вектор разбивают на рядом стоящие пары измерений:\n",

|

||

"\n",

|

||

"$$\n",

|

||

"x_m = [x_{m0}, x_{m1}, x_{m2}, x_{m3}, …, x_{m(d−2)}, x_{m(d−1)}] \\Rightarrow \\text{пары: } (x_{m0}, x_{m1}), (x_{m2}, x_{m3}), …, (x_{m(d−2)}, x_{m(d−1)})\n",

|

||

"$$\n",

|

||

"\n",

|

||

"> Из-за того, что вектор обязательно должен быть разбит на пары, RoPE может работать только для четных `head_size`.\n",

|

||

"\n",

|

||

"После этого мы можем рассматривать каждую пару\n",

|

||

"$(x_{m,2k}; x_{m,2k+1})$\n",

|

||

"как вектор в двумерном пространстве:\n",

|

||

"\n",

|

||

"$$\n",

|

||

"\\begin{bmatrix}\n",

|

||

"x_{m,2k} \\\n",

|

||

"x_{m,2k+1}\n",

|

||

"\\end{bmatrix}\n",

|

||

"$$\n",

|

||

"\n",

|

||

"А раз это вектор в двумерном пространстве, то мы можем повернуть его на угол\n",

|

||

"$m \\cdot \\theta_k$.\n",

|

||

"\n",

|

||

"\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "c386a55c",

|

||

"metadata": {},

|

||

"source": []

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "450e03ac",

|

||

"metadata": {},

|

||

"source": [

|

||

"\n",

|

||

"\n",

|

||

"Каждая пара измерений вектора поворачивается на угол:\n",

|

||

"\n",

|

||

"$$\n",

|

||

"m \\cdot \\theta_k\n",

|

||

"$$\n",

|

||

"\n",

|

||

"<p align=\"center\">\n",

|

||

" <img src=\"https://ucarecdn.com/f4eca185-7e82-43e8-a796-c88482fee36f/\" alt=\"rope_0_3.png\" width=\"150\" height=\"109\">\n",

|

||

"</p>\n",

|

||

"\n",

|

||

"Здесь\n",

|

||

"$ \\theta_k $ — это частота вращения, которая вычисляется по формуле:\n",

|

||

"\n",

|

||

"$$\n",

|

||

"\\theta_k = \\frac{1}{base^{2k/d}}\n",

|

||

"$$\n",

|

||

"\n",

|

||

"где:\n",

|

||

"\n",

|

||

"* **base** — гиперпараметр (обычно = 10 000),\n",

|

||

"* **k** — индекс пары (от 0 до `d/2 - 1`),\n",

|

||

"* **d** — размерность вектора (`head_size`) запроса (*query*) в каждой голове, каждого блока внимания.\n",

|

||

"\n",

|

||

"\n",

|

||

"Само вращение выполняется с помощью матрицы:\n",

|

||

"\n",

|

||

"$$\n",

|

||

"R(m, \\theta_k) =\n",

|

||

"\\begin{bmatrix}\n",

|

||

"\\cos(m \\theta_k) & -\\sin(m \\theta_k) \\\\\n",

|

||

"\\sin(m \\theta_k) & \\cos(m \\theta_k)\n",

|

||

"\\end{bmatrix}\n",

|

||

"$$\n",

|

||

"\n",

|

||

"Для этого каждая пара\n",

|

||

"($x_{m,2k}$; $x_{m,2k+1}$)\n",

|

||

"перемножается на матрицу поворота:\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"$$\n",

|

||

"\\begin{bmatrix} \n",

|

||

"x_{m,2k}' \\\\ \n",

|

||

"x_{m,2k+1}' \n",

|

||

"\\end{bmatrix}\n",

|

||

"= \n",

|

||

"R(m, \\theta_k) \\cdot \n",

|

||

"\\begin{bmatrix} \n",

|

||

"x_{m,2k} \\\\ \n",

|

||

"x_{m,2k+1} \n",

|

||

"\\end{bmatrix}\n",

|

||

"$$\n",

|

||

"\n",

|

||

"$$\n",

|

||

"\\begin{bmatrix} \n",

|

||

"x_{m,2k}' \\\\ \n",

|

||

"x_{m,2k+1}' \n",

|

||

"\\end{bmatrix}\n",

|

||

"= \n",

|

||

"\\begin{bmatrix} \n",

|

||

"\\cos(m \\theta_k) & -\\sin(m \\theta_k) \\\\ \n",

|

||

"\\sin(m \\theta_k) & \\cos(m \\theta_k) \n",

|

||

"\\end{bmatrix} \n",

|

||

"\\cdot \n",

|

||

"\\begin{bmatrix} \n",

|

||

"x_{m,2k} \\\\ \n",

|

||

"x_{m,2k+1} \n",

|

||

"\\end{bmatrix}\n",

|

||

"$$\n",

|

||

"\n",

|

||

"$$\n",

|

||

"\\begin{bmatrix} \n",

|

||

"x_{m,2k}' \\\\ \n",

|

||

"x_{m,2k+1}' \n",

|

||

"\\end{bmatrix}\n",

|

||

"= \n",

|

||

"\\begin{bmatrix} \n",

|

||

"x_{m,2k} \\cdot \\cos(m \\theta_k) - x_{m,2k+1} \\cdot \\sin(m \\theta_k) \\\\ \n",

|

||

"x_{m,2k} \\cdot \\sin(m \\theta_k) + x_{m,2k+1} \\cdot \\cos(m \\theta_k) \n",

|

||

"\\end{bmatrix}\n",

|

||

"$$\n",

|

||

"\n",

|

||

"Где:\n",

|

||

"\n",

|

||

"* $R(m, \\theta_k)$ — это стандартная матрица вращения в 2D пространстве. Взята из классической линейной алгебры.\n",

|

||

"* $\\theta_k$ — частота вращения для $k$-й пары измерений.\n",

|

||

"* $m$ — позиция токена в последовательности.\n",

|

||

"* $k$ — индекс пары измерений.\n",

|

||

"* $x_{m,2k}$ и $x_{m,2k+1}$ — четное и нечетное измерение вектора.\n",

|

||

"* $x_{m,2k}'$ и $x_{m,2k+1}'$ — четное и нечетное измерение вектора после вращения.\n",

|

||

"\n",

|

||

"И так происходит с каждым вектором ключа (*key*) или запроса (*query*) в каждой голове, каждого блока внимания.\n",

|

||

"\n",

|

||

"---\n",

|

||

"\n",

|

||

"**Примечание**\n",

|

||

"\n",

|

||

"Обратите внимание, что разные вектора и их составляющие вращаются с разной «скоростью».\n",

|

||

"Угол поворота зависит от позиции токена $m$, и позиции пары $k$.\n",

|

||

"\n",

|

||

"$$\n",

|

||

"m \\cdot \\theta_k = m \\cdot \\frac{1}{base^{-2k/d}}\n",

|

||

"$$\n",

|

||

"\n",

|

||

"---\n",

|

||

"\n",

|

||

"Вот для примера сводная таблица для разных значений $m$ и $k$:\n",

|

||

"\n",

|

||

"| Позиция $m$ | $k=0$ | $k=1$ | $k=2$ | $k=3$ |\n",

|

||

"| ----------- | ------- | ------ | ----- | ------ |\n",

|

||

"| 0 | 0° | 0° | 0° | 0° |\n",

|

||

"| 1 | 1.0° | 0.1° | 0.01° | 0.001° |\n",

|

||

"| 10 | 10.0° | 1.0° | 0.1° | 0.01° |\n",

|

||

"| 100 | 100.0° | 10.0° | 1.0° | 0.1° |\n",

|

||

"| 1000 | 1000.0° | 100.0° | 10.0° | 1.0° |\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"- $m$ напрямую и линейно масштабирует угол: чем больше $m$, тем больше угол поворота.\n",

|

||

"- $k$ делает наоборот: чем больше $k$, тем меньше угол.\n",

|

||

"И уменьшается он экспоненциально с ростом $k$.\n",

|

||

"\n",

|

||

"Такое неравномерное распределение создает уникальный паттерн для каждого вектора,\n",

|

||

"что позволяет модели лучше различать **относительные позиции**."

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 4,

|

||

"id": "02c300f9",

|

||

"metadata": {},

|

||

"outputs": [

|

||

{

|

||

"name": "stdout",

|

||

"output_type": "stream",

|

||

"text": [

|

||

"cos shape: torch.Size([512, 32])\n",

|

||

"sin shape: torch.Size([512, 32])\n"

|

||

]

|

||

}

|

||

],

|

||

"source": [

|

||

"def create_rotary_embeddings(head_size, max_seq_len, base=10000):\n",

|

||

" \"\"\"\n",

|

||

" Создает матрицы косинусов и синусов для RoPE.\n",

|

||

" \n",

|

||

" Returns:\n",

|

||

" cos_matrix: [max_seq_len, head_size//2]\n",

|

||

" sin_matrix: [max_seq_len, head_size//2]\n",

|

||

" \"\"\"\n",

|

||

" # Обратные частоты\n",

|

||

" freqs = 1.0 / (base ** (2 * torch.arange(head_size // 2) / head_size))\n",

|

||

" \n",

|

||

" # Позиции\n",

|

||

" positions = torch.arange(max_seq_len)\n",

|

||

" \n",

|

||

" # Матрица частот (внешнее произведение)\n",

|

||

" freq_matrix = torch.outer(positions, freqs)\n",

|

||

" \n",

|

||

" # Матрицы косинусов и синусов\n",

|

||

" cos_matrix = torch.cos(freq_matrix)\n",

|

||

" sin_matrix = torch.sin(freq_matrix)\n",

|

||

" \n",

|

||

" return cos_matrix, sin_matrix\n",

|

||

"\n",

|

||

"# Использование\n",

|

||

"head_size = 64\n",

|

||

"max_seq_len = 512\n",

|

||

"\n",

|

||

"cos_m, sin_m = create_rotary_embeddings(head_size, max_seq_len)\n",

|

||

"print(f\"cos shape: {cos_m.shape}\") # torch.Size([512, 32])\n",

|

||

"print(f\"sin shape: {sin_m.shape}\") # torch.Size([512, 32])"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 5,

|

||

"id": "ac072b9b",

|

||

"metadata": {},

|

||

"outputs": [

|

||

{

|

||

"data": {

|

||

"image/png": "iVBORw0KGgoAAAANSUhEUgAABKUAAAGGCAYAAACqvTJ0AAAAOnRFWHRTb2Z0d2FyZQBNYXRwbG90bGliIHZlcnNpb24zLjEwLjYsIGh0dHBzOi8vbWF0cGxvdGxpYi5vcmcvq6yFwwAAAAlwSFlzAAAPYQAAD2EBqD+naQAAfxFJREFUeJzt3QeYk1X2x/GbZJLpM/ShN+liFxWxi2DHurqyu6jYVsVed+2rYlkFEcWytnXtvXcQG1bsIIgivcP0nuT/3Nf/jAygQn7XJJN8P88zypR735PMMDnc9557fNFoNGoAAAAAAACAOPLH82IAAAAAAACAxaIUAAAAAAAA4o5FKQAAAAAAAMQdi1IAAAAAAACIOxalAAAAAAAAEHcsSgEAAAAAACDuWJQCAAAAAABA3LEoBQAAAAAAgLhjUQoAAAAAAABxx6IUgKTn8/nMFVdckegwkoZ9LuxzAgAA0k/37t3Nsccem+gwksb999/v5UU//fRTokMBEAMWpQBskh9++MGcfPLJpmfPniYrK8sUFBSYIUOGmFtuucVUVVWZVExy7Nt777233uej0ajp0qWL9/kDDzwwpmtce+215tlnn3UQLQAAaM6+/vprc8QRR5hu3bp5OVanTp3MPvvsY2699VaTDN5+++3GvOh///vfBr/G5oT28wMHDozpGrfffruXfwFIH76o/VcVAGyEl156yRx55JEmMzPT/O1vf/MSjtraWm/B5qmnnvLu2t11113Or1tdXW0yMjK8t3iySdFxxx3nJYb2/zZRWjc523PPPb3nY+jQoebFF1/c5Gvk5eV5CeimJGD19fXem40LAAA0fx988IGXU3Tt2tWMGjXKtG/f3ixYsMB8+OGH3g3BOXPmNH5tTU2N8fv9JhgMxjXGhrzH5h/2/y+//HKTz9udSj169PA+v9lmm5lvvvlmk69hc8s2bdp419pY4XDY1NXVefkYO8mB5ie+/8ID0GzNnTvXHH300d7du8mTJ5sOHTo0fu60007zkiW7aPVHSPTiy/7772+eeOIJM2HChCYLYw8//LDZbrvtzMqVK+MSR0VFhcnNzU3IAh0AAPjjXHPNNaawsNB88sknpkWLFk0+t3z58ibv28WXROdFzz//vJf/2AWktfOioqIi07t3b7NmzZq45UWBQMB7A9A8Ub4HYKPccMMNpry83Nxzzz1NFqQa9OrVy5x55pmN79udPP/617+8O2U2ebLnH/zjH//w7u6t7dNPPzXDhw/3kprs7GzvDtvxxx//m2dKNZypZBfC7O4sm7zZRM7uZqqsrFwvNrvF3C4e2flbtWrlLa7Zu48b689//rNZtWqVeeONNxo/ZneIPfnkk+aYY47Z4Jh///vfZueddzatW7f2rmuvb79+3cdlE6oHHnigcTt8wxkRDY9xxowZ3jVatmxpdtlllyafa3Dfffd57997773rlQbaj697JxMAACQXuxtq8803X29BymrXrt1vninVcNzA+++/b8455xzTtm1bb7Hm0EMPNStWrFhvvldeecXsuuuu3tfk5+ebAw44wHz77bcbHeuIESO83M7esFubXZT605/+tMEFIpur7LXXXt5jsWMHDBhgJk2atN7jsnFMnTq1MS/aY489mjxG+7lTTz3Vm6dz584bPFPK3jy1O8kuu+yy9eKzX7fudQEkFotSADbKCy+84J0jZRdaNsYJJ5zgJQPbbrutGTdunNl9993N2LFjvQWhte/8DRs2zEsiLrroIu/MhJEjR3pb1TeGTXzKysq8ee2fbVJy5ZVXrnfn0ZYa2rt2N998sznrrLPMW2+9ZXbbbTdTXFy8UdexSdLgwYPNI4880iShKykpafJ41mbP2Npmm23MVVdd5S0O2Z1NtvRx7d1kDz74oJeY2cTQ/tm+2fO61mbH2IU2O8eJJ564wWvZxTh7ppVNRBsW2+y5FPa5GD16tHdHEwAAJC+7E/2zzz6LqeStwZgxY8yXX35pLr/8cvP3v//dy91OP/30Jl9jcw27CGWPD7j++uvNpZde6t0Asze+Nvag8JycHG9hau28yF7XLij92s06uxBkH6O9QXnTTTd5Z3LaxaXbbrut8WvGjx/vLTT169evMS/65z//2WQeO8bGa3NMmztuiF38sl9n88Pp06d7H1uyZIn3/NjjFk455ZSNepwA4sSeKQUAv6WkpMSePRcdMWLERn39F1984X39CSec0OTj5513nvfxyZMne+8/88wz3vuffPLJb85nv+byyy9vfN/+2X7s+OOPb/J1hx56aLR169aN7//000/RQCAQveaaa5p83ddffx3NyMhY7+Pruu+++xrjmzhxYjQ/Pz9aWVnpfe7II4+M7rnnnt6fu3XrFj3ggAOajG34uga1tbXRgQMHRvfaa68mH8/NzY2OGjVqvWs3PMY///nPv/q5tS1ZsiTaqlWr6D777BOtqamJbrPNNtGuXbt63zsAAJDcXn/9dS9nsW+DBw+OXnDBBdHXXnvNyx/WZfOOtXOHhnxl6NCh0Ugk0vjxs88+25uvuLjYe7+srCzaokWL6IknnthkvqVLl0YLCwvX+/i6pkyZ4l3niSeeiL744otRn88XnT9/vve5888/P9qzZ0/vz7vvvnt08803/828yBo+fHjjmAZ2nB2/robHuMsuu0Tr6+s3+Lm5c+c2fqyioiLaq1cvb77q6movTysoKIjOmzfvNx8jgPhjpxSA31VaWur9327x3hgN5WJ2587azj33XO//DbuFGrao2wPC7QGVm2rdO112x5Ets2uI9+mnnzaRSMTbRWXPPWh4s4eH2p1TU6ZM2ehr2Tlsd0Ebq92dZf//a3cDLVuy18Ceq2B3Vdn4Gu7YxfoYf419TPZuoy0xtNf54osvvHI+2x0RAAAkN9tlb9q0aebggw/2dh3ZYxPs8Qa2A589v2ljnHTSSU3K+20+YA8Bnzdvnve+zRHsLnF7LMHaeZEtt9txxx03KS+yO93tkQiPPvqo143Y/t/OuzF5kc2J7HXtLvoff/zRe39j2V3jG3N+lN3NZXfQz5w509sdb3NPu3PfHiQPILlwUi6A39WwsGEXYzaGTX5sLb89Z2rdhRO7ENWQHNlk5PDDD/fKzGyiYM8NOOSQQ7zFno05xHPdxMKeu9SwCGRj/v77771EyS5AbcimdK2x5zPYLd/2PAJbTmeTPNs179fYRaurr77aWxxa+xytTe0KY8/Y2li2lNCen2UTL5uY7r333pt0LQAAkDiDBg3ybqjZcyvtwtQzzzzj5Uc237D5hD2HKda8yLJ5UUN524Zsyo0sm0PZIwZsXrTDDjt4xwf81s06e96VLSu0C2/rnv9pF6Xs2aCu86IhQ4Z4ZYz2pp1d4Fv3zFIAyYFFKQC/yyYpHTt23ORzDn5vAcZ+3h7+bc+QsucevPbaa17CYM8asB+z5x38ll+7U/ZzxZ/xdknZa9jznzb0tb83/7pssmXv0C1dutTst99+GzyM1Hr33Xe9O532ztztt9/uHQxvkzd7yKdN3jbF2ncWf4/dJWYPjrfseQv28dvFQQAA0HyEQiFvgcq+9enTxzs70h4qbhd11LzIsmc12RuF69rUzr42L7rjjju8BixbbbXVry6a2UPc7Y0ye1aUPd/TnidlH6PdWW8X3Rricp0X2ZuCb7/9dmMMdjHM7qACkFxYlAKwUexB2nfddZd3h8se+v1b7EGWNsGwd+T69+/f+PFly5Z528bt59e20047eW/2UHK7aGMPO7fbwO1h6Qrb+c8mYvaumk3qVLaLjT2I3C6YPfbYY7/6dU899ZTJysryFtnW3vFlF6XWtak7p37Laaed1njw+8UXX+wdGLpuCSUAAGg+tt9++8aDulU2L7Js5zq7+1tlD0e3u7Pswo89NP3X2BuPdoHIliGuvZtrQ+WCLvMiu4hny/dsR+QLL7zQOxh9woQJzuYH4Aa30AFslAsuuMBrHWwXiuzi0rrsHSjbcc5q6PZmF0XWZu+OWbbrS8N28oa7dw223npr7/9rl7zF6rDDDvPuGtrywHWvY9+3O4s2hd1ZZbvH2DuCBx100K9+nb2mTapsiV8D29Hm2WefXe9r7XO6sV0Af4vdcWYXyq677jov6bKlfJdccomZPXu2PDcAAPhj2QWadXOVtc/p7Nu3r3wNW8Jmd7/bjr4bOstzxYoVmzSfzXXsIo9d/PnrX//6uzu41n58tmRvQzfrXOVFH330kbcYZbsu2zNNzz//fDNx4kQzdepUeW4AbrFTCsBG312zu5iOOuoob/fT3/72NzNw4EDv3IMPPvjA21Z+7LHHel9rt3CPGjXK21llEwt7dtTHH39sHnjgAe/MqD333NP7Ovu+LW+zO5Ds/HaXz9133+0lTA0LW2rM9lwnu2vILgrZa9vD2ufOneud02DPXTrvvPM2aU77uH6PXXSzC3D77ruvt7V9+fLl3nkG9oytr776qsnXbrfddubNN9/0vt6WSNpdXfaw0U1h57dnJtjntaH1s028bIJrvyfvvfceZXwAACSxMWPGeOVlNieyZW4N+ZW94dS9e3evhE9l8yt7c80uIG277bbeDSx7Zub8+fO98yjtGUw2f9gUI0aM8N5+71B0W65nb+jZHefl5eVevmd3bK27A8zmRTZGm7/ZvMl+za+dgfVrqqurvXzNnilqd+Fb9gal3bFln8evv/7aW/wCkBxYlAKw0ew5SXZR5cYbbzTPPfeclzTY8rQtt9zSOwfKnrfU4D//+Y/p2bOn1/nELgDZswvs4tDa5yE0LFbZUj27+8oecmkPy3zooYc26SDL32J3DdnSPXtmgU1ILHuWgU2Q7OP5I9jk6Z577vF2Ldk7dPax2G3tdmFs3UUpuxhlF8fsribb3c8mUZu6KGUXpOzOMnvHsWHbe+vWrb1FQZso2juFdqcbAABITva12t7gszuj7Ou3XZSypW6nnnqqlyP82jmWm8reLLM3wWyOYvM5mz/YDn+2U5+Lha8Nsbu87I5u+zjszUCbE9rcxS6IrXv4+GWXXeY1xLHdB+3NSpsrbuqi1D/+8Q8zZ84cb1HPHqdg2UUxezPUHhdhd03Zm6IAkoMvuqF9ogAAAAAAAMAfiHoOAAAAAAAAxB2LUgAAAAAAAIg7FqUAAAAAAAAQdyxKAQAAAAAAIO6axaKUbaVuW6Ha7gm2K5Xt1gUAAIDfRg4FAACSWdIvSj322GPmnHPO8drIT58+3Wy11VZm+PDhZvny5YkODQAAIGmRQwEAgGTni0ajUZPE7F29QYMGmYkTJ3rvRyIR06VLFzNmzBhz0UUX/e54+/WLFy82+fn5xufzxSFiAACQSmyqVFZWZjp27Gj8/qS/n+ckhyJ/AgAA8cifMkwSq62tNZ999pm5+OKLGz9mH8zQoUPNtGnTNmoOm1DZBAwAAECxYMEC07lzZ9McqDkU+RMAAIhH/pTUi1IrV6404XDYFBUVNfm4ff+7777b4JiamhrvrUHDRrDAgD8ZXyD4B0cMAABSTTRcZ8IzHvd2DTUXm5pD/Vr+NOf775vV4wYAAMnB7pLq1bv37+YRSb0oFYuxY8eaK6+8cr2P2wUpXyCUkJgAAEDzl8plbL+WP9lEsqCgICExAQCA1M+fknpRqk2bNiYQCJhly5Y1+bh9v3379hscY7ep20M9G5SWlnrbz0ecOtoEs/NijuWbb5vGsKlWLVhkVNVrtBhqK0rkGMK1VfIc0Ug4oeN/niMizwEAQKrkUL+WP2015nHjD2bHHEfH3l2Nou9mrYxq2+4tpfE9WubIMbTL1W+MtszW0vbMgH4eWlaGtjCb4dcXdgPiFAEHMfiTYJHbwcMwybDM7uJxAEhOG3t4eVKf1hkKhcx2221n3nrrrSYHb9r3Bw8evMExmZmZ3h29td8AAADSyabmUORPAAAgEZJ6p5Rl79qNGjXKbL/99maHHXYw48ePNxUVFea4445LdGgAAABJixwKAAAku6RflDrqqKPMihUrzGWXXWaWLl1qtt56a/Pqq6+ud3Dn7xkfecUURDJjjqPqn7/dOvn3/OdTvXzvhWnzpPFL566SY6hYsUCeo6ZstTS+vrpcjiFSXyuNp4QQAJAOOVT16iXGl5EVcwxfzvjAKH7ssJlRTe+3hTS+y2at5Ri276mXIfYriv0YCqtTfuzfxwZtxDJEtfzPm0MsQwwF5BCMg0pIvQzRlxolhJGNre9JcpQhAim8KGWdfvrp3hsAAAA2HjkUAABIZkl9phQAAAAAAABSU7PYKeXCFac9akLCGtzJL38iXf+CWyca1cD2A6Xxd74zV45h7qzYO/A0WLNIKwGsLlkhx1AndiJUy/9czaGihBAA8Fsm33mqyc+P/dDz/a785aD1WMz74AWjUvOGijWD5BjKSqvlOVaIJYBbd9W6EFpd67TjC9rl6V0I80PaP1/CUb3OKuSgdi7Dn/h6Md9G98b69RlSoezNRQhqGWIyPA9AorBTCgAAAAAAAHHHohQAAAAAAADiLm3K9/46vKfJC8b+cO988jvp+icb/ZDR/dUSwN16yDHcaRJvTaIDcFD+54KL8j+fX1+XpgQQAFLXT/seaHIDsbcre+WdKdL197vSyNQSwJWztSMcfqaXACYFByWAMq0JYTr98+d3BcWasbBc/pccJYDJ8ChcdCGkBBDNFTulAAAAAAAAEHcsSgEAAAAAACDu0mb/6oqr/2Mq8vJjHr/bh3tK13/0udlGdWr/66Txwy+6RY5haXlHeY6HKrSys7oarfOLFamrTeh4F6KRcFLMAQBIXW/PLTaZvtjvYZ5w+fHS9a85Rc9dzly5RBq/avancgzFC2bKc4RycqTxszP1tD8vS5sjS245Z0xQ7HwXdHB0gV/4O9HAJ5ZauajUUsu9Ag6iiDoonouIcbgoe0t8H0NKANF8sVMKAAAAAAAAcceiFAAAAAAAAOIubcr3/nbaTcaXkRnz+NJ37pCu/+HAUdJ4b447PpDGH7TLI3IMe2/9J3mOWUvLpPFvl1TLMdRWtpHGh2urEl4656L0zkUHPxXd+wAgeV36ylWmIDf2srFzdzlPuv64U7Xux9aDe20jjZ+y+Ac5huo1S+U5ShbNlcbnFsSeBzf4MV+bo3WeHkNOMPZukFZmhjbeyvAH5TnEKkQTUOv/7O4EMQXz+fV6MZ+LxxGNJrT8L5XK3tQSwFR5HhBf7JQCAAAAAABA3LEoBQAAAAAAgLhLm/K9ztvuZgKZsW8///tXsXfus/42uJNRPfbBQmn8wEeel2PYbJth8hw792wtjf9qQYkcQ2VJjTS+trJUjqFeLAF0UXrn8+tb2OngBwCpa793Ck1GVm7M40cXZknXf3/0JUZ17bOvSuMP/nQnOYZFn74iz1G5apE0vmRlFzmGlS207+dCcbzVMickjc8L6blPTlC/r5/h1/4ZliGWrFkBcYqoi+57DjrGReQugonn4Glw0sEPSAR2SgEAAAAAACDuWJQCAAAAAABA3KVN+d6HY3qagvy8mMcXHn23dP1J/7nWqJ4UO/h989IcOYYef35dnmNrsYPfll0K5RhWLyuXxleWFMgx1FVoZYiRutqkKL1zUQKoooMfAPwxvnzhKeMLxF4uNfWlq6Xrq937rHGrPpXGD9y+sxzDiu9aynPUVWp5Q8WKBXIM5W1iL+W0lhTrHZQ7t9Lyn1bZeue8vDo978jO0Aq26h2cnqB28FO797nq4Kc+jqiDGkK1g1+qdK1Tu/el0nOBjcdOKQAAAAAAAMQdi1IAAAAAAACIu7Qp35uw/TEmyxf7Glz2nqOl6z9a3dOo9i7Stky/u6JSjmHQm+/Ic3Te+Uhp/MCOevne9JarpfFlq7VujFZNWezlpC6697nq4AcASF1XXHeOycqN/TXv6rI66fqFQb1EfNaN46TxJ1/0HzmGz97uI8+x8ruPpPHVJSvkGMqLu0vji8u07sfW8lKtBLBdfqYcQ8t6/Z9QtWHtZzsU0P9uhMWyNbV7X9J08EuRcjE6+KG5YqcUAAAAAAAA4o5FKQAAAAAAAMRd2pTvdcgKmGxf7Ntcx101Urr+pXdoW66taefsLY1/6fzn5Bjmvf29PEeX0d9I4/u26SfH0KF1jjR+1dIyOYaKzCxpfCAj9m5IDcIOOuclQ/c9Fd37AGDDDnxxrMnPjL1b2ZafdpSuP+0I/TV/ynNa7nLCJO34BKt9r27yHKvnTE9o9z6rqlQ7/qCiVDu6wFpVrh09UFZTL8dQ6aD7XnW9Nkd2UN9bEI6IXev8ydGtzScWrvnE7n2WX6whVLv3eTFQe4dmip1SAAAAAAAAiDsWpQAAAAAAABB3LEoBAAAAAAAg7tLmTKlDZr5rCgoKYh4feX68dP3RH+tnMbW89mppfJt/vijHMOv7NfIc23/1njS+695byTH0bKedafDDPP15CGZpMdRmrEmK86DUOaKRsBwDAOCPMf62aSYk3MP07/0X6fp9xt1mVBMf3kOb4J2H5Ri2H7iDPMcP09pK46tWLZZjqClZqcVQXiTHsFo8U6q4sk6OoTJPz11qxDOlwg6Ow4z6owk9k8oKuDgHibOUUop6zhhnazU/7JQCAAAAAABA3LEoBQAAAAAAgLhLm/K9rU57xPiD2TGPP+P5e6XrF+w52qiemK/t092xdeyPv8G7KyrlOVZ88q00vv1++pbpzdpo7Z3fz8uUYwjl5Ejjq0P699MfDMlzROq1bfQAgOR19hk7m/zM2F8r+hx0nHT9qz+vNqo2Ia3M/IdHXpBjOPiiEfIcL7btkvDyvdqKEml8dYWD0rkqbY6SSj1vqazTc9G6iJbX1zqo3wsFtL8bERNNeKmWFRXr96IOYkiVEkL1qUiRpwFxxk4pAAAAAAAAxB2LUgAAAAAAAIi7tCnfqylebnwZWTGPLxG36R48cphR3fT0N9L4xw4dIMfw0u0fy3MsnT5fGt951U9yDF0KO0jjWxfG/rPUYHmmtmXaHwymRPc9FzGoHfx8fn19PipuwweAZPTs8AtNVm5+zOPH1LwjXb/HvVVG9ehW7aTxX7/yoxzD0ImxP4cNWnXqKI1fNVt/rauvLpfG11Zq463qSu25LKuuT4ryvWqx+15dWK85C4t1a9GoXqzlonJOLQH0OYjC59OeCz0bBpovdkoBAAAAAAAg7liUAgAAAAAAQNylTfnelLtOM/n5BTGPz713hXT9Fgf3Nqqie56Wxne74gg5huAkvXxvxYyV0vjwvBlyDJ379JDGd2ihl+/9mK2V32U46L4XyNC77+mb4HVqCaBa/gcAqepf/xhnfIHYXyuy57wrXX9VzyFGNeiSw6XxVxx9uxzDkau+k+do17WFNH5uVl7Cy/dqytbIMdRUtZXGl1TqHQCravW8oUYt33NwbEAkKnbfc1B756T7Xgq0jIs6aAEYcfBA/EnwXCTDz1QqPA/NCTulAAAAAAAAEHcsSgEAAAAAACDu0qZ878d99je5QolP5quvS9fvesnJRlW1SisZq97mn3IM7bP+Ic/xY3mtNL7mB718r9VWB0vjO7TQS+cys7W/fgEH5Xt+B+V76hyReu3nwaL8DgD+GFuPOMJkZOXGPH7upW9J1y8auKtR1Qw/ShpfWj9RjqHq/RflOQb3/pM0/ov8VnIMdZWlCS3/s2qqtPK7cgfd91x08KsJJ777nlrmpHbvc9XBT47BxRwpUEIIJAo7pQAAAAAAABB3LEoBAAAAAAAg7tKmfG/qvBKT6Yt9De6hy56Vrj/m2U+MqmDP0dL4F2avkmPY0kHXuXdXVErjV8+cJ8fQPqBtu+5QoD8PWTla2VswKzMpyvd8gUBCO+cBAP44L++82hTkVsc8fvE5Wvld+wP08r1x78+XxrcJ6a9T817X88DBF2lHQdzXskiOoXzZT9L4uiq9fK+2Ssvhamr00rvyar2DX3USdN+rE0sIM8Uc0IqYxJchBqidS6pSSL4b6YedUgAAAAAAAIg7FqUAAAAAAAAQd2lTvnfpK1eZgtycmMdPOPMN6frzK/VtvgP22Eka/5+pP8oxjB/SWZ6j9MnvpPGrZy+RY+hUos3RLreFHEN+rlY6lxHU15T9waA8RzKU36kxuOje5/Pr34+og634AODSFftfLh1/cNyCL6Trnx1ebFT9x2p5x/h2sXcfbDDvnQXyHAPHaXHktdHL91aJr3Xh2io5hrrqqoR277OqavW8oUYs31PL/1x0z1PL5iwHDfzkkjEXjyMg1py5yAATn5EDsWGnFAAAAAAAAOKORSkAAAAAAADEXdqU7+33TqHJyIp92/Muo/4qXf+gVR8Z1REjNpfGj7rqRTmGbsO3k+cwYvnemh+L5RB8qxdJ49sVtpNjaJUndt/LzEiK7nt+sXQuFcr/XJUAAkCy2btnS5MrdNg66LLXpes/teIuo1q0rJ80vu+hA+QYnrn3M3mOvf1a9+IWbWM/xqLBAjFviNTXyjHUVWsd/Opq2sgxVDoo36sVO9/VOag5qwtrc0SddM5LjV5rEbEOMeDTn4eog1rIiNj7zp8a307EGTulAAAAAAAAEHcsSgEAAAAAACC9yvfGjh1rnn76afPdd9+Z7Oxss/POO5vrr7/e9O3bt/FrqqurzbnnnmseffRRU1NTY4YPH25uv/12U1S0aR1EvnzhKeMLxL7luPyli4wiMuAcowqEtA40q+ZMl2PIv+RoeY4s/8PS+BVLKuQY6pfMlca3Khokx9BaLN/LCOklZwEH5Xtq6ZuL0rlk6L4HAKmYP3V79UWTl18Qc6yLhp9lFE98+4NRhbfoJo3vesxRcgzzJ34ozxGY/6U0vqgoX45hRihbGl9XWSLHUF+l5YH1dfprfnl1fcI7+NWLpXcuSgDFCkRP1J/4EkAXHQDFqjckGRcdGSllbCY7paZOnWpOO+008+GHH5o33njD1NXVmWHDhpmKil9ebM4++2zzwgsvmCeeeML7+sWLF5vDDjsskWEDAAAkDPkTAABIFQndKfXqq682ef/+++837dq1M5999pnZbbfdTElJibnnnnvMww8/bPbaay/va+677z7Tv39/LxHbaaedEhQ5AABAYpA/AQCAVJFU3fdsEmW1atXK+79Nruzdv6FDhzZ+Tb9+/UzXrl3NtGnTNimpuuK6c0xWbuzblifveqRR5LymdZ+xOo0/QxpfX50lx1DReVt5jraZWqnVshp9y3TtonnS+Pwd9ZKz1nmZ0viQ+Dw6674nzpEM3fcAoDn7I/OnvU++3fgyYs8ftv/TSKOovXSKUbXppy3CVQwcLsdQ56AUpPrradL4Lbv+SY7h/dxCaXxt+Ro5hnBtlRZDTeJL71x08KuL6LVzEbFGyUXVm4MKQDfld2oM6nhKCJHGkmZRKhKJmLPOOssMGTLEDBw40PvY0qVLTSgUMi1atGjytfY8BPu5DbHnJti3BqWlpX9w5AAAAIlB/gQAAJqzpOm+Z89G+Oabb7wDOdXDPwsLCxvfunTp4ixGAACAZEL+BAAAmrOk2Cl1+umnmxdffNG88847pnPnzo0fb9++vamtrTXFxcVN7vYtW7bM+9yGXHzxxeacc85pcqfPJlYHPH+Nyc8MxhzjDXNWG8X7d+hdV87/7+fS+KwtD5Fj+HRxuTxH95zYvw/WzLJaOYay+cuk8a1D+npuG7H7XjBT/+ubEUp89z0AQPLmT1mtOhh/MPaOa1NO3doopr/e1aiW7avF8OLsVXIMuQG9rmbpxzOl8VscH3sXxQah/J9LRGO27Cc5hnqxfK+uRi+9q3XQwa+qVisjrHPSfU8rngs7aFEWFTvnWRGxeM5Fp7UAtXPOqN8OvhPNT0J3SkWjUS+heuaZZ8zkyZNNjx49mnx+u+22M8Fg0Lz11luNH5s1a5aZP3++GTx48AbnzMzMNAUFBU3eAAAAUgX5EwAASBUZid5ybjvDPPfccyY/P7/xnAO7bTw7O9v7/+jRo707d/bwTpsgjRkzxkuo6BwDAADSEfkTAABIFQldlJo0aZL3/z322KPJx23b4mOPPdb787hx44zf7zeHH364dwDn8OHDze23377J17pl0kcmJGwMG7lTJ6O4f8oLRvXham27crt+g+QYXpyx4QNSN8WInk0PXt1UH366RI6hbP5yaXzbCn0rf8ssrYwxM1sbb/kz9M2S/qDYfS8QSHgJoYsSxGhE38oPAMmWP3054Qhp19THew8zip3/d7NRtc3vI40/4X/a8QnWEbl6ufzS6Qul8X0vyJVjyCpoYxItUqcd41Avls1ZdS46QddHEt59Ty0BjDhoGeeidA7uOhlyKAfSblHKbj//PVlZWea2227z3gAAANId+RMAAEgVSdN9DwAAAAAAAOkjKbrvxcPZZ+xs8jNj3zrd/rJbpeuHDrrOqLLFzi3b7vBLZ55YvfelXjp38tYdpPF1n+gxlC4slcb7K9fIMRRmtZPG57novhfU16V9fm0Ouve5ey6jDrbyA8Danuy/m8n2xf57+qM11dL1f1ij5QzWMSt+OfA9Ft9/ppfs9++mHxy/ZLbWCXq3fL3sP6cwJ6Gvc1ZY7L6njrfqHXTfq6wNJ7z7Xlgsv3MRg9F/LI1aReiiglAtQ3TQoBNottgpBQAAAAAAgLhjUQoAAAAAAABxlzble0/tc6HJys2Lefxzt3wiXX+Hww8wqqHzXpHGZ+7SQ45h1FUvynO026WvNsHd0+UYypaUaROUaN37rJaFWkfHwhx9v3NGUC+d82doHYX8Dsr3kqEEkA5+AFLRqtqIyRLKSg7r21q6/sl3fmRUW664Sxq/Zlk/OYZue2wmzzH93s+k8QdHyuUY8lpkJTRncPFaWe+ifK82kvDue7VhB933xJoztfzPijoonotEm3/tm4tOhgGfLy6NNH5LxOgx+Jv/txObiJ1SAAAAAAAAiDsWpQAAAAAAABB3aVO+d+0l44wvEEpYV6v5U7TufVYoMFwan9tWDsEUz58pz5G37S7S+KDvETmG8uWV0vjwKr0DYH7bQdr4LP2vbyBDX5dWy+98KVK+BwCp6NTPHjMF+bEff2DEcq1F+15tVG99/5M0vr53FzmGTvtouY+19HatlDFjtfY8WAWtchJevldfrZUhhmu0jpDeHA5K56rE7nvqeCsilu+5KDlTu9a54CIG+amgZC3lqD9X/jT6mWCnFAAAAAAAAOKORSkAAAAAAADEXdqU72176JEmIys35vGL5qySrh+49xKjyj3xMml8+M375RhqSlfKc0S7by2Nz3NQcraiRtvyHF61VI4hL6Q9jsKcUEp030uG0js65wHAhu00/nsTCMVestWxVyvp+oVd+hvVD19MlsZntSiSY8jYbpg8R0X439L4urkz5Bh6ttVyuE+yhVLQ/1dXWSqNj9TXyjHU1+mv+Wr5XY3Yvc9N970kKHtz0DEumgLd+4DmjJ1SAAAAAAAAiDsWpQAAAAAAABB3aVO+98IOy01Bbuzbz38Ytb90/Wf6X2pU0SFnSuN3evg1OQZjOsozrMzQtvK3zdR/bNeI266rl6+QY8gNamvCLXKCcgwZYgzJUr6XDCWAAJCKFn3+jvFlZMY8fu77Wqe04y863ag6vHGnNL5owA5yDIsyO5hEq/z+O3mOnv20LoIZoWyTaOHaKnmOeged72rFDn61Lsr3xBjU8ZaL5nt6FInn4nlwUQpJF0AkAjulAAAAAAAAEHcsSgEAAAAAACDu0qZ878oDrzKZvtjX4E4/8yXp+tOLq43qg6e/lcZnT54nx5C15TbyHN+t1LZNF2XqpVozy7TOK1XL18gxtM7Q9scWZOl/fTNC+nMZyNDmoPQOAJLXw5POM7l5+TGPP/bK56Xrj9+3m1F9toNWOjdzh85yDO/OK5bnyA1oecPqmXoe2H1w7EdhWMHcQpNoYSfd9/SCsbBYfldbr5cQis33TMRBvVhYDcJB97yIg+I59WEEqJtzhirG5oedUgAAAAAAAIg7FqUAAAAAAAAQd2lTvrdP71YmNxB7mdAt49+Xrr9HG227s/XQh1Ol8V+W6CWEhZ37yHNMm6+Vvu1YlCvH8Mka7bmoXK5vw29TXSqNzwvpf32DDjoZ+jP8ie++J/zddhaDgzmiEX0rPgC4VHjh8SYvGPtrxeO3PS5df/5FJxvVthf9RRp/cp8ecgy3vv2DPMdOYtn+mjl65+AuhVnS+FBOgUm0SF1twkvvrHqxE7ST7nsRbY6wgzopFyWAAJo3dkoBAAAAAAAg7liUAgAAAAAAQNylTflel5deMHn5sW8Z7ttnkHT9gyYcY1Q1N/woja9wsMe2Q+9O8hzvz14pjd+3Zws5htoZWgyVy7XSO8tfUyaNzwvpZYxZQQfd98RuQGrpnTcHHfw8Pr9+nyEqbuUHkFoeenOuCQn3MC995DLp+vfeN92oRl9znzR+cLRcjuH0mcvlOUbmhqTxa37Ujx7omRuUxmcKnRxdiTjovheurU6C7nsOOgCKU0QcdM4LOyjfU8NwUUGoTuHgqTRiSv5zHGoMeghIQ+yUAgAAAAAAQNyxKAUAAAAAAIC4Y1EKAAAAAAAAcZc2Z0rteeJE48vIjHn8sqf/KV1/3sBDjKrlE9qZCB2/e0eOIWerDvIckz9cII1v1ae9HEPEzJHGV66skmPwV2tnSuWHCuUYskMOzpTK8Cf8HCQVZ1IBwIZddddIU5CdFfP4a45/QLp+SZ1+bs7YKT9o4yOvyzEsn6O/1rXr3VIa/+PMVXIMg3K0fzpkiWdSucgbopFwUpxLFREPdKpxcKZUnXiOZJ2Dg5BcHGUZlU90cnAYUxKIODgcK+BLjecCzUvi/zUIAAAAAACAtMOiFAAAAAAAAOIubcr38tp2Nf5QdszjJwR2lq7/9N0fG9UWe20njR/0RZ4cQ+YAvXTuiSc/lca32KWLSbTKVZXyHNGy1dL4vMJucgx5WfqvAH9AW9v2Z2gtrr05UqT8Ti0jdFGSAABrO61uHxPMiD1/2Db4P+n6e/bUStask1+eJY0/dtUbcgzly/rIc7Qf1FkaP+2LZXIMOXXa0QPZeQ5e88W8wcVrZdhB+V59rVa3FnZQOqfOEXZQLuZiDpWDpxL/L+rg+xkRyyn9VCA2O+yUAgAAAAAAQNyxKAUAAAAAAIC4S5vyvc9vOdQUFBTEPL7o4LHS9euryo1qyiOXSeOLFm0tx9Cyo14CWLbsJ2l8Tu/+CV+NrV5TI8cQKVsjjc9rq5es5SRB9z0XpXd0zwOAP8aLd9xrfIHYy6Xuu/8E6frBDj2Maum5U6TxX3w/T46hvlsneY72O2j5z+o79KMkAqVLpfE5ebF3wnZVvheu1TsoR+rr5DnCYve9Wgfd99Q5Ii6677ko90qC8js1BidVjJStpZSIg5+J5lLKyE4pAAAAAAAAxB2LUgAAAAAAAIi7tCnfe7L/bibbF3uJT3SrQxPe6WP72tnS+MjRx8gx+BZOl+eoKVkhjc/ocbAcQ3ZA28tYXKVv246UrJLGZ4pd71x13wuIcSRD6Z2LGFzMQfc8AMnmiDEnmlBO7KX7T/RoK12/U0GWUUUjb0njvyzRS/b9GUF5jqwtBkvjy+vvk2OIrlwojW9d2Cbh5Xv11fqRGpE6vfteuD7x5Xs1avmeg/KisIM51NI3Fx3jqJ0DYsdOKQAAAAAAAMQdi1IAAAAAAACIu7Qp31tVGzFZwq7Kc/9xrHT9l6bpnVu+v+EGaXyLGx+UYwg9erU8R7i2Whpf36q7HEOe2DGu1MGW6XDpaml8Voa+TTg7pP8K8ItxqNvwk6UEEABS0fXVL5gCX+wd03pdkS9dv32/zY2qaOBu0vjqT1+TY8ht21WeI9x1K2l8nYMKpfrFc6XxHVp0lmPwB7W8IRrRc7hIvV6+F0mC7nthsf4u7KJznoMawCRovuemex6QptgpBQAAAAAAgLhjUQoAAAAAAABxlzble6d+9pgpyI+9e0x07pfS9Y88abhRTe1/hjQ+Olrremft9Obn8hzGdJRGr4rEXkbQoDColXuVu+h4srpYGp8tliBa+SnSfU+dg/I/ANiwq8543ISEe5hVOx8iXf/7KXOM6viLTpfGd3hUf61s1WOAPMeSSI5JtKqFC6TxnfvpjyEjlG0SzUX5ntx9Tyz/8+YQY6hzEIOLEkCV/iiSg4tnUv520IQQMWCnFAAAAAAAAOKORSkAAAAAAADEXdqU7+00/nsTCMW+ZficBy+Trj/yLq1biTWhWOta983bP8oxdHx/oTxHsEc/afzCMn3LdMugth77U2WdHEPNmnJpfEFA3x+b66D7XkZILZ3T18YpvwOAP8bf9t/M5AVjf62IjtS6Fz9855NG9Y+9e0njZ3QukGN4u28beY5vllVI47P8et5QNn+ZNL79IP0Ihoys2I/jcCUSCctzhMNi5zsn3fe0ORw0zjMRB+V7ahfBZKg5c/E8BJLgcQCxYKcUAAAAAAAA4o5FKQAAAAAAAMRd2pTvLfr8HePLiH3L8IzSGun6L5/2gEl0ydkP07+TY/hKLCG0clp3ksbPWqltX7faiF3nZjooIawpLpPGZ4T170WWgw5+ave9gIMY5O57gcR3AASAZLT08rtMTl5+zONvjc6Urj/v8P2Nqt0XT0vjo0dtLccwYjst97GmzVsjjc9z8HpbNl/r5NwuTy/fC2RmmUSL1Ol5YEQsv3NRvqd230uGznkuSt9cPIyI3PuO0rtk4uInm+/oxmOnFAAAAAAAAOKORSkAAAAAAACkb/neddddZy6++GJz5plnmvHjx3sfq66uNueee6559NFHTU1NjRk+fLi5/fbbTVFR0SbP//Ck80yusP28+y2LjeKm2z82qiO3aCuNH//TN3IMi6rq5TnyO/SUxn+1qESO4YAOWueWWrEDjlVTrHXf89VWyTHkBPWSM79YDuBz0A0oVaglgFEH3YAAND9/ZA416rR/G19GKObY7p3zllH894dPjOrzw/4hjd9+3KVyDPu0bS3PcfrjX0njR2Tqr/mlC0ul8UW5sf8sNQgmQfc9F6+34XptjmgSdK1z0jkvOSoAAaT7TqlPPvnE3HnnnWbLLbds8vGzzz7bvPDCC+aJJ54wU6dONYsXLzaHHXZYwuIEAABIJuRQAACgOUv4olR5ebkZOXKkufvuu03Lli0bP15SUmLuuecec/PNN5u99trLbLfddua+++4zH3zwgfnwww8TGjMAAECikUMBAIDmLuHle6eddpo54IADzNChQ83VV1/d+PHPPvvM1NXVeR9v0K9fP9O1a1czbdo0s9NOO23SdQrOP87kZcT+cKMPPmsUA/83yKh2vOBAaXz4pnlyDHqfD2PadIq9jNL6Vtw6bh3dUSzfm75UjqF6TaU03lenjbeyg7nyHFliCWAgoJfvqd3z6Jz3C59fu1cRjbj4LQEgWXKorjvsaQKZOTHH+M6HLxjFHpMuMqq3Plgoje/eZdNyzg3ZLKqV7FvL5xdL4zuKnYet8mVa7tHOQQlhKCf2n0dXIvW1CZ8jKbrvOXjJj4glhF4cYhmhgxDkDn4uqhhdPA41LXeRBZKVp5+ELkrZcw6mT5/ubT1f19KlS00oFDItWrRo8nF7FoL93K+x5ybYtwalpfoCBgAAQDJxnUORPwEAgLQq31uwYIF3IOdDDz1ksrKynM07duxYU1hY2PjWpUsXZ3MDAAAk2h+RQ5E/AQCAtNopZbeWL1++3Gy77baNHwuHw+add94xEydONK+99pqpra01xcXFTe70LVu2zLRv3/5X57XdZ84555wmd/psYvXw5J9MSFiDe/3yN43i3Qt/2UIfK99hF0jjc/57nRxDgdhpzerTo5U0ftYPq+UY8js3vXuciK2pNaXatm1fjd4BMCdYIM8RSoLue2rJmQuUAAJozjnUr+VP0/7exRTkx17yPmPxAKN4ZuzrRrVA7Bz89MwVcgyj66bJc6xeVJrQ4xOslYvKpPGFWfprZWZ2RsJzBhfd99Q5Ig5q5+rFeq86ByX7aumdpYYRdVI8RzdpoNktSu29997m66+/bvKx4447zjvz4MILL/QSoWAwaN566y1z+OGHe5+fNWuWmT9/vhk8ePCvzpuZmem9AQAApKI/IocifwIAAGm1KJWfn28GDhzY5GO5ubmmdevWjR8fPXq0d9euVatWpqCgwIwZM8ZLpjb1kHMAAIBUQQ4FAABSRcK77/2WcePGGb/f793ls4dvDh8+3Nx+++0xzXXVXSNNQXbs5y7856YXjSJ4/a1Gdfsni6TxHQb8ss0/Vr0+eUyeY7M+baTxH324QI4hv0uRSbSqNVXSeH+dNt7KETvnWdkhsfueg5JQtXTOnyKldy5KCF2UJABInRxq3KC/mixf7L+nRy/+0ii+bLm5UXXPCUrj//f2D3IMB1W9Jc9RseLXj6/YGK16tZRj+G6u1gEw38G/PELZwZR4rQyL3fdcdK3Tu+85KL1zUTkHZyJiOWXAp5cxRtVuig5KKR2cLoLmuij19ttvN3nfHt552223eW8AAADYMHIoAACQNotSFRUV5rrrrvPOKrAHbUbWOV3uxx9/dBUfAABAyiCHAgAAEBelTjjhBDN16lTz17/+1XTo0MH4HGzT+6OdUrOPCfpzYx6/9aEl0vX/9pC2fd2aO0vr/rLr4K5yDH0/0Ld+F3TSOt+Vr1wix5A/uJ1JtLqKOml8pELrwmPltA4kvvueg98fdL4D0Fw0txyqa3aGyRF+xw69erJ0/cvb5hhV9+07SONv+WKWHMO8Rd/Jc9TUaK91LXfqKMew+qU50nh/5Ro5hsysjJQo34uo5Xv1et2bWn7nonzPRfc9dQ4XJYTqHJQxIp3F9Fv9lVdeMS+99JIZMmSI+4gAAABSFDkUAADAL2La4tCyZUuvmwsAAAA2HjkUAACAuFPqX//6l7nsssvMAw88YHJy9G3V8fDKXfcaXyAU8/jS97XueUUHjzWq+qpyafwJ51wmx9Buj97yHK1aZkrjq9Ysk2MIdtxMGq/3izOmprQ24eV7mUV62UhOEnTfU7vnUf4HIF6aWw51wIx3TUFBQczjjxtymnT9/W8bZVQZRdrxBcXnvyvH8P33K+U5It20sv9W/brJMZSL3dr8FavkGDLF7nv+jNj/PdAgXKt3QI6uc57cJscQ1sYnS/meiy6Case4VOHkaUjuinJsIvWvV7y6EMa0KHXTTTeZH374wRQVFZnu3bubYLDpi8P06dNdxQcAAJAyyKEAAADERalDDjkklmEAAABpjRwKAABAXJS6/PLLTXNzxJgTTSgnL+bx0/fbV7p+dXlfk2hbZ2vlf1Zk2J7yHBmrtXbXdRUlegydtPK9kIO9jJU19dL4SHmxHIOLx5Etlu/5HMSQDOV3LmJQ53DRDQjAH6u55VDbnv6I8QezYx7fb9jh0vXn77qrUWVlaK8z4do35Bhml2sl+5bPr5W7h3punvDyPVOqlzHm5+akRve9Ou1nIuqg7K1W/H46Kd9zUHIWjia+7C0qT0LdHNKX1FP1s88+MzNnzvT+vPnmm5ttttnGVVwAAAApixwKAAAgxkWp5cuXm6OPPtq8/fbbpkWLFt7HiouLzZ577mkeffRR07ZtW9dxAgAANHvkUAAAAOKi1JgxY0xZWZn59ttvTf/+/b2PzZgxw4waNcqcccYZ5pFHHjHJ5rqK50xBJPaubxe/s0C6fu9zLzCqVQu0GOre/K8cQ3Do3+Q56j9/XRtf7aAMsbBIGp8d8CV8C3ykokyOIRTQO9+FMsTuew5iULfiJ0P5H4D00NxyqNqSVcYXzIp5/OR//kW6/oETPjCqAZu1lsbntO0ix7CmTu+UFswplMb7O+tHSdSJFUrhVUvkGFrl/fz3JpHd99TOeS5KAJOh+169g9q7MJ3zgLQX06LUq6++at58883GZMoaMGCAue2228ywYcNcxgcAAJAyyKEAAAB+EdMWhUgksl4LY8t+zH4OAAAA6yOHAgAAEHdK7bXXXubMM8/0tph37NjR+9iiRYvM2Wefbfbee2+TjK48+0kTim0NzjOix8/nPsTq8JN2NKrbpmrnTMx48J9yDIV7nyHPUfD5lwnfMh0u6CCNz3ZQclYhtgqJVJTKMYQCie++FxC7IlmU3wFoLppbDjX17lNMfn5BzOMXHHuYdP2vlvQxqsV9BknjOw7cTo7B/8YD8hw5rTtJ4+tbdzeJFl61VJ6jdd5W0nh/UC/fc0Et30ud7nsO5hDjSIYCwmSpYowmw+OgEWHaielf1hMnTjSlpaWme/fuZrPNNvPeevTo4X3s1ltvdR8lAABACiCHAgAAEHdKdenSxUyfPt07E+G7777zPmbPRhg6dGgs0wEAAKQFcigAAABxUcry+Xxmn3328d6ag+MO7G3ygjE/XLPFhPHS9SM/vW1UnQ4aLo1/74JFcgzRuavlOXb69Htxhp/LHRSra7S9pXkZevleldg1pbasMinK93LE8j37uyQVuu9RQgggFXOo2Xvsa3KE328vzC2Wru/bWeu0Zi2f8b40/oAL/i7H0H5S7Dlog8JOPaTxS6tNwlUvXyHP0a5f7N0grYCD7nsuROprpfFhsfTOm0Ostap1EUOKdPDjREAgdhv9CjlhwgRz0kknmaysLO/Pv8W2NAYAAAA5FAAAgLwoNW7cODNy5EgvobJ//q27fyRUAAAAPyOHAgAAEBel5s6du8E/NxcLL5lkcvLyYx7/zxe0x3zWLdcb1V6PaR0AJ5Xo+7Z/mL5YnqPH19rW7YyWveUYVleHE16+t7S6XhpfV6F/P3NddN8Lqt339OfS59fmoPQOwB+pOedQ7ywsM5m+2H/H7tk2R7r+5/sfaFRfPP+0NP6EnbrJMcwryJTnaNs59i6I1oKSGjmGoJg2VCxZJcfQctugND4QyjbJICJ333MRg1b2FnbQETsZuCghTIaWcS46GQaS4HEg/cSUZVx11VWmsnL982yqqqq8zwEAAGB95FAAAADiotSVV15pysvL1/u4TbLs5wAAALA+cigAAIBfxNQKJBqNbrBj1pdffmlatWplktHxp99kfEK3DbVDRvdZ+nbljjfcIo2vc7Az9ceZeteUn5ZVSOODnQvlGOaLpYwFDkrO5qrd90q159EqdNArJCiWAPoD+nPp96fGVmPKCIHU19xyqMveuM4U5MVeghdo2U66/pb5A4zqgIWlWgyhNXIMZocO8hQDe2o/HzNWrL8YuqmyxdfsqhVaN0arZZZYvpepde9zJSqW74XFPNKKiN3zXJS9uaicc1G2plJDiJjUKCHEL9TvqM+kj01alGrZsqWXSNm3Pn36NEmqwuGwd+fvlFNO+SPiBAAAaLbIoQAAAMRFqfHjx3t3+I4//nhvi3lh4S87VkKhkOnevbsZPHjwpkwJAACQ8sihAAAAxEWpUaNGef/v0aOH2XnnnU0wqG2fBQAASAfkUAAAAMKiVGlpqSko+LkV7TbbbON1ibFvG9Lwdcmk+057mUBm7GcirFqwQLp+p2/fMqq3XvheGt89R0+AV/00W55jUVWdND6roK0cw4KSDf/sbqwuufpzWbtGi6GubP3uTZvKV6edrWVliudLBBycz+UTz5RycZaTL8B5UABSL4ca/laWycjKjnn8gM1iP8/TutVMMKojDjxMGl/96gNyDN2GbiXPsWvvNtL4j+aulmNoJ75mV63Uz7Vqma3lYH7hjFmXomHtTKmoi/OcxDlcnCkVdnAeVDja/M+kAtJZxqachbBkyRLTrl0706JFiw0e0tlweKc9GwEAAADkUAAAAPKi1OTJkxu7wkyZMmVjhwEAAKQ1cigAAABxUWr33Xff4J+bi/dP7mwK8vNiHj+lZkfp+t3bvG1UN93+sTT+yC30srfyZXPlOVbXaneBc9t2lmOYu1IrfevbOvZShgbhBVqL6roKvfTOV18jz5GVEUho6Z2rOVKBizJEtUU1gNTKob568WnjC8Re7vRVSHu9HDZHP/7g3B/OlsbPPPJdOYZt//1PeY7t2mqlnfe995McQ++gVr5XuVI7usBqJZbvZYg/k66or7fhev312u7QlGJwUUJI6RyQ9mJ6ZXn11VfNe++91/j+bbfdZrbeemtzzDHHmDVr1riMDwAAIGWQQwEAAIiLUueff753aKf19ddfm3POOcfsv//+Zu7cud6fAQAAsD5yKAAAgBjK99ZmE6cBAwZ4f37qqafMQQcdZK699lozffp0L7FKRuMG/dVk+WLfcnzy2BHS9fOvnWRURffsII3v/6ft5RjCT+klY3XiLt3CNrF3UWzw43Kt+0tWyyw5hlpxy3NdhV5656t30H0vQ9sGHxS791n+DRwavCnonOeOz69/P6ORiJNYgGTU3HKoa248z2Tn5sc8/srrnpSu//aHeqfZEVPvk8Z/9tEiOYYteu0sz9FTHL9mmd75rk1Ie72sXKV/P1uJMQSFbpLJVL7notw+XB9JfPc9By/5chdBByWE6lPhoorRRSGk+jgCDk7UUH8kyOqbn5j+9RAKhUxl5c8vKm+++aYZNmyY92d7iGfD3T8AAAA0RQ4FAAAg7pTaZZddvC3mQ4YMMR9//LF57LHHvI/Pnj3bdO6sH0INAACQisihAAAAxEWpiRMnmlNPPdU8+eSTZtKkSaZTp07ex1955RWz7777mmTUNTvD5AidqcZf8Kx0/eLOfzOqo7ZpL41vf/QoOYbAC/+V5wiK2zpbFsXeRbHBkpUV0vi8otyEb02tKXVQvlenz5GZoT0XoQy93EuozP3/8S5iYLMwgNTLoYY+daXJD8Xe7azllf+Wrl/x7j1G9em1j0jjZzh4vZ2+VC9b28lo3fNKxM7DVquCTGl89Rr9ucwROwBmBANJUqqe+PK9aCQ1uu+5KL8D0MwWpbp27WpefPHF9T4+btw4FzEBAACkJHIoAAAAcVHKCofD5tlnnzUzZ8703t98883NwQcfbAIcGAwAAPCryKEAAACERak5c+Z4HWIWLVpk+vbt631s7NixpkuXLuall14ym222mUk2B8x41xQUFMQ8/qc2W0jXv/OB143qhguOkMYvbDlQjiGvqEfCu6YM6BT797HB+9MXS+Nz2hWaRKstr0uK7ntZYvc8N+V7Dlp9JAFKAIHU19xyqFvv/MSEYuuL47lp8P3S9Ssv3c+oJv7j+YR2DbZe/m65PMe29dOk8RUr9HIv9fiC+d+vlmPIFcv3glnJ8Vqrlt9F6mv1GMSyt/pIapTeuWj6G5V736VGLuuiHDMgdtVG8xPTb/UzzjjDS5oWLFjgtTC2b/Pnzzc9evTwPgcAAID1kUMBAACIO6WmTp1qPvzwQ699cYPWrVub6667zusmAwAAgPWRQwEAAIiLUpmZmaasrGy9j5eXl5tQKGSS0Tan/s/4g9kxj//+rr9K17/ypp/PjVBUD50ojX9MLFmz2vTsI8/RKTv2Lj5Wx8566dxrk3+Uxmd3amkSrb66Xp7DV++i+17iy/cC4hwuyub8KVJ6pz4XLroBAamsueVQ5563m8nPjD2u8aO18r0TlnxpVMvOfUYa3z1Hy1us57/Qc7BTqr6QxleXal2crYLO+dL40pkr5RhyMnxJ0H0vkPjyPQevt5Ek6L7npoOfPAVSiFqWakUclFOmyOkicRHTv+QOPPBAc9JJJ5mPPvrI+6bbN3vX75RTTvEO6gQAAMD6yKEAAADERakJEyaYXr16mZ133tlkZWV5b3bLuf3YLbfcEsuUAAAAKY8cCgAAIMbyvUgkYm688Ubz/PPPm9raWnPIIYeYUaNGGZ/PZ/r37+8lVMmqrqzY+IKxdxp7eYsTpOt32v4Do7rpvXnS+Dc+WSDH0KNvW3mO7mLnllCbPDmG6tJiaXz2Ni1MotVW6F1XIlUV8hxZLRJfvmd/B0njU6T0DkDyaq451KO7nmOycmN/3a26cap0/ZEPfm5UB+VrZZFbbqaX7E+avVSeY/nKn6TxdRWxH2PRIH+LX85Ci0V5/Q9yDP6qEml8MDM5yvdULsrlI/VamVNtvd62zkkJoFiulRQdABMfAtA8FqWuueYac8UVV5ihQ4ea7Oxs8/LLL5vCwkJz7733/nERAgAANHPkUAAAAOvbpC0K//3vf83tt99uXnvtNfPss8+aF154wTz00EPe3T8AAABsGDkUAACAuFNq/vz5Zv/99298397ts9vOFy9ebDp37myS2dS7TzH5+QUxjx903B3S9a+/9CijGnv/Z9L40iXz5RhOvOgQeY6iLbQSwMIWmXIM1SVa95dQ23Ym0WrL6+Q5otV6+V4o4E9o9z7LT3sLAEmuueZQ111ys/EFYi9/W3DNgdL1Jzz1klHdvL9WGtl2yx5yDMWT9S7My+askMbXt+wqx5DfpUgaX+6g3MtXXSqND2VmJEX5XqS+NvHle2rZW5LUnKldBJ3EkPgQkiIGIBab9K/B+vp670DOtQWDQVNXp//jGAAAIFWRQwEAAKxvk24V2LbFxx57rMnM/GWnSnV1tdfGODf3l8Orn3766U2ZFgAAIKWRQwEAAIiLUrZLzLr+8pe/GMWiRYvMhRdeaF555RVTWVnpdZ+57777zPbbb9+YxF1++eXm7rvvNsXFxV7b5EmTJpnevXtv0nVm77GvyRG22lYU7WoUo1otMarTp09O6DZha8/ux8lz5G2/ad+7dbXI1su9aiu1zi0ZrbeUY1AfRX11fVJ038sQS+ecdN8TY/D7U6MTD4Dk5TqHilf+tP2RR5uMrNi75n47YqBR1D52iVH1v3mMNN5foHWcs6qfulue48c1sXeR9uhNBE1ut07S+KqwXl/krymTxoeyg0nxmq+W30UdnEcXFeu9nHTOS4KaM7WMMZXITwUnaqSUSDQ+4zdpUcomOy6tWbPGS5L23HNPL6lq27at+f77703Llr+8at5www1mwoQJ5oEHHjA9evQwl156qRk+fLiZMWPGetvgAQAAkpHLHIr8CQAApAr9pD/B9ddfb7p06dIkUbOJUwN7l2/8+PHmkksuMSNGjGjsXlNUVOR1rjn66KMTEjcAAECikD8BAIBUkdBFqeeff967a3fkkUeaqVOnmk6dOplTTz3VnHjiid7n586da5YuXep1qGlQWFhodtxxRzNt2rQNJlU1NTXeW4PS0p87dLy/qMxk+mIvEzryX8caxccnnGNU4dreCd/m29VXLM8R2W5baXzGqp/kGOqryqXxgdbt5RhCYslZtYMONi667wXF6rtkKN9LltI7NY5kKCcAkFr50/NbLzIFudkxx9rjstlG0XvPg4xqToch0vjsoF6P4iIHW1SlHYrv8+uvtxkdukvjq8IOcpdyLRfNzcyRY0iGvCFSpx/LkSrle+oUDqpK5bI3ezNBR+0cmif91Unw448/Np5v8Nprr5m///3v5owzzvC2mls2obLsnb212fcbPreusWPHeolXw5u9kwgAAJAqyJ8AAECqSOiiVCQSMdtuu6259tprzTbbbGNOOukk7y7fHXfcEfOcF198sSkpKWl8W7BggdOYAQAAEon8CQAApIqElu916NDBDBgwoMnH+vfvb5566invz+3b/1witWzZMu9rG9j3t9566w3OaVstr91uucE/X7/OFOTFvl3X30rrdHbBqPlG1e7PWglh5arFcgyRz1+X58joP1iLYcFMOYZwbZU0PprfNuHley462ERrqvXvZ0DtvqdvgQ8E/Anfhp8MW/kBpId45k9XHnKtdPzB6m2GGcXDVx9uVJe8pOUNg3rq3fdCeXrru5I6rfQtIytPjiHQvlvCy6QiJauk8YU5hXIM/oxQwks6XZTbh8VySheld/UuSgDpngc0awndKWU7x8yaNavJx2bPnm26devWeGinTazeeuutJmccfPTRR2bwYG1hAwAAoDkifwIAAKkioTulzj77bLPzzjt728//9Kc/mY8//tjcdddd3pvl8/nMWWedZa6++mrv3ISGlsYdO3Y0hxxySCJDBwAASAjyJwAAkCoSuig1aNAg88wzz3jnGFx11VVe0mRbGI8cObLxay644AJTUVHhnZdQXFxsdtllF/Pqq6+arKysTbrWsDcyTWATx6ztotuON4ks1bL22ndLafz0L/WSs6VvPi7P0WrIL9/fWPinPinHEKnXOthEclomvvuei24lTrrv+ZKg+548BQA0G3HNn/q1NrmB2MuTvzn2b0YxeMGrRnXMm9pr3fy+PeQYCjr3kedQ+9YFc/WytXDBL+WgsdB77xkTFsv38rP074VP+DvhiovyvVTpvqeKJEEMLlDFiOYqoYtS1oEHHui9/Rp7t88mXPYNAAAA5E8AACA1sL8AAAAAAAAA6bdTKl6+fvkZ4wvE3inj9eXa1u+TRvQ2qsJh2nbj2/L0TiE/XTVbnmPNGTXS+NbfzTOJVpelb4HPFrvWVYkdU6xIZaU8R4ZYvpfpoHzPL8ZA9z0A2LAOzz5v8vILYh7/YmSpdP3Ju48xqlUF20rj66v0UveuW24uzxEUT4LIblkkx1Cb09okWqS8WBpf6CAfDjjovpcM5XuRFCnfiyRB3ZoagYvS1mTg4jshfzv1U3MQZ+yUAgAAAAAAQNyxKAUAAAAAAIC4S5vyvWtuPM9k5+bHPH7nG7SSsS0nTjCqyPevSeOP32EfOYYPftS2TFvRRdocO81aLMdgTEdpdEmNvmU6O6CtCdc62O5cV1mdGt33fIkv33MhWeIAgAZDT55ofBmZMY9/bO4L0vWfm7PaqDJ2zpPGly6aJccwaORe8hxtM7W0Pa+N1jnPWl2l5z+qmtVaHtmiXTAlXq+Tovueg7K5ZCgBdPE4AMSOnVIAAAAAAACIOxalAAAAAAAAEHdpU7439KkrTX4o9u26rR58Trr+hC+WGNXuN9whjd9qkt755b/ltfIci2evlMb3mbNGjsGf0U0aX1YbSXj3vdUOYghX6d/PzCQo3wuIc/j8rM8DwIbkF/Uw/lB2zOOfeUMrv+ueo5daddluT2n83Pe1EkTrgM3by3OsyNbS9hZtc/UYKuuk8S5ebWvWlEvjC7L0nyl/EnTfizgp31NjoOzNXRlicrSMUzsZBpLkcaB54V9iAAAAAAAAiDsWpQAAAAAAABB3aVO+d+udn5iQsAb3Ye7b0vVXLVxmVOEpWgfAni/9V46htF4vGZs9V9vKv2axtm3byuijdeJZKW5ft3LF7ntLI/VJ0X0vT1zaDjoonfOJJYQuJEMnHgBwbfpN+5uCgoKYx3/6w13S9TsO6mpkBw+Qht845xs5hJ06x94BusGX4hxti7Tcx5pfouUNIQev19WrSqTx+ZmBlCjfc9F9Ty3VUrv3WeGI/m8LNQz1eQD+CFEHcyT+X0gbh51SAAAAAAAAiDsWpQAAAAAAABB3aVO+d+55u5n8zNi32vZ66UmTaD9UaCVjMx9+T44h6GAP4MpFZdL4xdV62VpGtraFfXmF3rUuT+wYV+tgy3R9dY08h08sIwyKXQi9GHzaHP4kKP9zgRJCAK49NmAvk+2L/XfLXxZ+YhLtdJ+W7j68xdZyDC1XfSfP0X7rImn85p1jL8NsML+kKqGdh62aYu0Yh7yQ/s8ff1Dv4JcM1PI7N+V7DuZIgfI7Fw8hklYFX0gl7JQCAAAAAABA3LEoBQAAAAAAgLhLm/K9/w0522Tlxl6y1atylnT9NYsWGlWXWe9I4z/5XO8A2D5L/5EpW/KjNH5FjV6+l5nXShq/vEIve+uSrT2XtasclO9V6N33fPVaKWPQQelcQCyFdNG9z0XpnC9A+R2A5FJWHzF1Qon0HuM+lK6/Ze82RjXOvCqN33+3EXIMVe8/Ic9RtF1fafyWnQrlGD4SOyi3EzsPWzWlWu6SF0qR7nthvfueWn4XSZLyPTkGF6VzKVBCCCQKO6UAAAAAAAAQdyxKAQAAAAAAIO7Spnzv35eNM75A7Fttf5o8Qbr+HR/r5Xt7LX9MGv/g2/PkGPbroHWtsypXLZLGl9RF5BiyCrVygEXFetlbr4JQwrca11frXQR9Ya0rZNDBVn61/M5F+V6qUMsQoxG9nABA8jj58ydNQX5+zOMv2u9a6fpzOvUxqj8velYaP+qV0+QY5l2gdyHsdcJIaXzfNrlyDI98tEAa31Mst7eq1mg5WL6D7nsZoWyTaC5eb9Xyu2g0Ocr3KJ0Dmjd2SgEAAAAAACDuWJQCAAAAAABA3KVN+d72Rx5tMrJi37a8+vSjpOtfMu42o4pc/Fdp/INvXy3H0HVIZ3mOujml0vhqB9t8cwq1MsSlxVVyDJkFmdL4sIst0w7K90xYmyMzQ/815BfL7/xCZ6kGdM4DkIp2uGGG8YdyYh7feYcDpOsv/Pglo3r92xXS+H/VLdBjeEefo89120njO2frHePWrK6Uxhc4KN+rKdU6ILdKle57Dsr3opFIQrv3uSrfC4snezjpIijm5UnQhDBl6Ae9GENWH1/slAIAAAAAAEDcsSgFAAAAAACAuGNRCgAAAAAAAHGXNmdKPb/1IlOQG3v71rMv+Fa6/qlGbyfccuJj0vheeTfIMXQbrp1n4JmzxCRaXgutle+SYq0dsZszpeQQTF1VvTyHTzxTKugPyjGEAqmxvu7zU8Fu+fz+hJ+TAeBnS7+ZZnwZsb9effbk5dL197vMyNZ8MVkaX/rif+UYvl2pncVk7ZDXSRrfOqrnLuUl2hyFQRdnSml5R3aGfo5kRig1/gmlnkvl4iwmuOPguFmjTuHiRyKg/xVFM5Ma/5IDAAAAAABAs8KiFAAAAAAAAOIuNfaeboQrD7nWZPpiX4Mb0lor93r0ye+MqviwH6TxR/ZvLceQv+v+8hyBux9K+Epqfivt+1lcqm+Bz26ZJY13UZxUV6Ftgbd89XXS+KCD0ruAX9vnK/xqWGsO1vgBpJ7HJ51rcvPzYx4fvO4U6fpXnny9UZU/ppXLz3jofTmG+ZXaa6U1a5WWe+xkfpJjqCipkcbn5YXkGOoqtOcyM8NB3uEgd1HzBrX0zsUcUQe1WmEHc0TEurWwi7o3ADHjX1EAAAAAAACIOxalAAAAAAAAEHdpU743rF9rkxuIvbPV7g9dJ13/7K1GG9WLL8+Uxl8xcmc5hvJO28hzZBa+nvDOLV1a50jjv521Uo4hVKCVELrgovuekbvv6S02QuJWfJ+DGJIB3fsAuJZ11l9NVkbs6eKEKfOk6990ynyj+v7w/tL4156YIcdQ56A66NPFJdL47Wu0TtJWdWlVQo8usJbOL5XGZznpvpf4+/pJUb7noOyt3kUJYBKU36lNf6Ny3zsrNfJZtRwz4EuN5yGdJP43KgAAAAAAANIOi1IAAAAAAACIu7Qp3+vw7PMmL78g5vHnTtM6lhyxfQejuvurKdL4FpedKscwZXG5PEd+UXdpfKuQXqKU1S5PGv/JZ4vkGDJbaDG4UF+tl+/51PK9JOi+53dQvkfpHIBU9MjU+SYk3MPcolDrfDdt9IVGNfgerYPfhHtHyjHkBvTXmXe+WyGNHxWdJcdQU6Idf5DTRhtvlf9YLI3PdtB9LyMYSIm8QS3fiyRJ9z1VEoQApDV2SgEAAAAAACDuWJQCAAAAAABA3KVN+d7QkycaX0bsW8jrKrSOJzfdc7WRHf+4NPzH7B5yCC9O18oYrdadi6TxRZn6j61P7L5XXVEnx5DVsdAkWrhW79xi6sTyvZAv4eV7ydJ9z58EW/mToRzBRUchAG78697jTEFO7B3TMoq6SNe/cO9LjSo3d3OTaL3yQvIcU35aI41fU6l1QrRqKzpK43M6652Hq8Vaq4yofnSB30EJYDK8VkbU7ntixzlX5XvJUAKYFB0AEx9CynDRWTIidkP0J8c/T+Ii8b9RAQAAAAAAkHZYlAIAAAAAAEDcpU35Xn5RD+MPxb5luGLFAun6T0f6GVX7LfeUxj/goGPctK+XynN07NpCGl/USt/6nVmozVFXWarHkCLd96K11dL4rBx9bTwkbqP3+ei+BwAbckLZbiZYnxvz+J3atZaun+2ga91Fz30rjR/uoPSuT9fYO0A3WLVwlTR+9Uo9hwvXtpTG57TTjy6oCms1Y/6aCjmGjKA/JfIGtQQwmiSld+ocyVB6B6QzdkoBAAAAAAAg7liUAgAAAAAAQNylTfne9Jv2NwUFsW+dfnBGsXT9f971sVEduH9/afzL7+md81bO17d+jxgyRBrfsqdW/me1yNe24tdW6OV7oZbaFngX6qvCCS/fCwb8Ce++50+n9hYAsAle/899xheI/TVzSmutW9s7I/oY1b1vfSqNv2RgWzmGDoO0LoRW+Rwtj1szT8tlrXBWlTQ+u62e+1SFtVIrX12lHENGMJDw8r1Ivdb92EX5XoSyt5TrnJcscSC9sFMKAAAAAAAAcceiFAAAAAAAAOIubcr3Hhuwl8n2xb5NduS9J0vXP+2Dr43q4qsnSOO3uu8VOYaastXyHIO7HiiNb9W3nRxDq2ztR7+uskSOwZ/fMeEryuE6B+V7NdpWfheVc5lq9z0HQfgddNFJhk48ALC2kWefbEI5sXeLffSOx6XrD7hFy32sNQdcp8Uwemc5hpxu3eQ5qj6ZJY1ftkzvOhftonW+y+3QKuHd93y1Wt5ihTJT459Q0Yj2XKZK972IgxgoZQRix04pAAAAAAAAxB2LUgAAAAAAAIi7hO49DYfD5oorrjD/+9//zNKlS03Hjh3Nscceay655BLj8/1cThONRs3ll19u7r77blNcXGyGDBliJk2aZHr37r1J1yqrj5i6/58zFrf99XajyN/tWKNq/cmj0viyJXMSvs3X6tcmWxqf1V/fAp8b0bZu14sla5a/oLU0PuCg7K2uuj7h3fcCwt/Lxjnonueh/A9ID/HMn65Y85QpqM6MOdZul4w2iptm6b/fQ3lax7fCg/8mxxANabmPVV89XRq/rEYv2VcF2xTJc9SKpVa+2nI5hoxQ4kv21c553hzhxP9MJEP5HtxxUsVIWp92ErpT6vrrr/cSpIkTJ5qZM2d6799www3m1ltvbfwa+/6ECRPMHXfcYT766COTm5trhg8fbqqrtX8IAwAANEfkTwAAIFUkdKfUBx98YEaMGGEOOOAA7/3u3bubRx55xHz88ceNd/nGjx/v3fmzX2f997//NUVFRebZZ581Rx99dCLDBwAAiDvyJwAAkCoSuii18847m7vuusvMnj3b9OnTx3z55ZfmvffeMzfffLP3+blz53rb0ocOHdo4prCw0Oy4445m2rRpG0yqampqvLcGpaWl3v9P/vxJU5CfH3Os/+wyzCj2+/Nwo/p87LniDJu2Zf+P0qZe6+AX2Ux/HIHSpdL4sIPOLYFCtXzPl/At8C7K94IO9muGkqD7XqqUziVDOQGA5Mmfrjr3aRMSNtbfMnVro+gx6ec4pDkG7ymN/z6kHxuQHUx8PcqKGr1k3+fXXm8DrdsnPHeJVurle9nBnJTIG9TX7GTpvqdyEUI4mviyN3tDQpf431VIPwldlLrooou8pKdfv34mEAh4ZyRcc801ZuTIkd7nbUJl2Tt7a7PvN3xuXWPHjjVXXnllHKIHAACIP/InAACQKhJ6ptTjjz9uHnroIfPwww+b6dOnmwceeMD8+9//9v4fq4svvtiUlJQ0vi1YsMBpzAAAAIlE/gQAAFJFQndKnX/++d7dvoZt5FtssYWZN2+ed7du1KhRpn37n7f4Llu2zHTo0KFxnH1/6603vB08MzPTe1vXDjfMMP5Q7Fttp16uld+1PGJzo7r55EXS+IKhB8ox1JRppXeW76cvpPHBzbaQY4isnJ/w8r1oTmHCu+9VqXuN7eOoq0t45zx1Dr+DUkgXkmErP4DkF8/8afSIPiYvGHu6eO/BVxjFyh5DjOqSMy6Txj/w2UI5hs07xH6ERIOMrFxpfHm93kHZnxGSxgda//LzGCs1dYlU6CWh2SH9+5kKr/mRJCnfq0+CEkAAzXSnVGVlpfGvU5tut6FHIj+/aPbo0cNLrN56663Gz9vt6raLzODBg+MeLwAAQKKRPwEAgFSR0J1SBx10kHcGQteuXc3mm29uPv/8c++QzuOPP977vM/nM2eddZa5+uqrTe/evb0k69JLLzUdO3Y0hxxySCJDBwAASAjyJwAAkCoSuih16623eknSqaeeapYvX+4lSyeffLK57LJftllfcMEFpqKiwpx00kmmuLjY7LLLLubVV181WVlZm3Stpd9MM76M9belb6z5519jFHVX/d2oFlRpXVO6bKWXva2cv1yeo3z6NGl8zp/OkmOIfDc94R3GItla+V7Inxrd91x0EQxlaFvg6b4HoDmJZ/70w4W3mZy82EuVZj2tld+16bejUR2/pdbtdqerZ8gxfN9Li8HKbql1rqtzUOGUkZUnjY/mtpJjUIsQo5Vlcgx5WRlJ0O02kvjue066vSVeOEUeB9BcJXRRKj8/34wfP957+zX2bt9VV13lvQEAAKQ78icAAJAqEnqmFAAAAAAAANJTQndKxdNTd5xrcvNj335+5KXPSdc/45n3jWqz3KA0vsNuPeQYXpqmr2Mu/+xZaXyLo7Wt41Zo4fzEb5nOzE94+V5dNAnK9xwsjavd93wOYqB8D0AqOvmMm41P6Lj21Wla+V3bEXsaVfUj10njF36td1qrr9O7MOd32MwkWihXO3og7KB8T+Wi+15OSH/N9wUSnzfI5XtJ0n3PxRyJ7kSY+EfwMyoZkQjslAIAAAAAAEDcsSgFAAAAAACAuEub8r2M0/9iMjJif7jLS3tL1/+hos6oTjywlzS+xdYd5RhKKmvlOZa+uEQaX1mmP5etFywziVYfzEmJ7nuRaq18L8PB48jM0NbX/Q5icIESQADJpsfOe5tAZuyvV+Fzd5Ouf0FU7/o7+ZRXpPFlBdvKMfiFEsgGnbcYII0POnipC+W3lMbXZRaYRIu46L6Xpx2pYQUc/EwkunxPLVlLlvK9SIrUrOmHiyQH9bvh5NuZHP80SBvslAIAAAAAAEDcsSgFAAAAAACAuEub8r1H31tgQsIa3M7j/iVd/+BVnxjVFhefIo0PlM+WYzhwQFd5joU/FkvjAysr5Bi2mK+WA7STY6isj6RE+V59tVbSGfAlQfc9ny8lSu+SIQYAqeX90UWmID/2rrd9r3hTuv5TK+8yqrd+0vIOs6UcgilfNleeo8dBQ6TxhUH9NSKroK00vrRGKxdzoa6sXJ4ju5WD7nsp8JrtpPteipTOAYgdO6UAAAAAAAAQdyxKAQAAAAAAIO7SpnzvX/ceZwpysmIeH9yru3T9yIAxRrWg93BpfN4Dl8gxbP/36+Q5bq3UuudVLimVY+i+WNu67fO3l2OoqEt8+V5V2EH3vbr6hJbeWSGx+54vSbrvAUCyuXGH40yWL/bfsfP7ad33npjxvVGVi+XyhV20rndW8bxv5Dl266eVzplMvVwstzD2XNoqqUl8f7C60kp5jvysjJQo31O770UT/+100n3PSQdAyhCBmLFTCgAAAAAAAHHHohQAAAAAAADiLm3K904o280E63NjHn/6HodJ18976XWjmvS61j3vuEc+kmPY9aDp8hwrxM4rPy0skWPYc4lWvufPC8kxVIrle9kBfU25RCy9c9F9L9tB5zu1BNDvoHzP5/enxFZ+AFhbr9ygyRF+N217+J+l65d/NcWoBrXUSs4+3nJzOYbSRbPkObbvWCiNX5Cpp/35LbOl8aurtCMcrKD4kl1bpndxzg3pr9f+DD2XVEXE8j03MTgonYskSR1hgksIjUn8cRQRB2WMgSR4HIgvdkoBAAAAAAAg7liUAgAAAAAAQNylTfne6/+5z/gCsW+TbT9jpXT9D+/80KhWLVwmjR84a5Ucw1ZvvyjPUS1uT13soPte6coqaXygVbYeQ7W2ZTrLQcmZi04hYbF8L+hgaTwols7RfQ8ANmyfr6eagoKCmMcf4tNe6z59tZNRtd+2szR+8e495BjmftpVnqNva60MsbpD7MdYNGjZKkcav7xCyxmsgFj2X+ug+15OMJASJftq9z0XpVpRJ2VrGhchuHguANfUn8p4/QuJnVIAAAAAAACIOxalAAAAAAAAEHdpU7438uyTTSgnL+bxR98wQ7r+A5P1srdIvbbleW6F3vFk/pufm0QrXqFvu15Zq21XDmTq5Xurq7XvR3ZA31BZ66LjSa32OHxRvWNKUHwufA46ALro4JcMkqGcAEDy2O7UB40/GPtr3tPLH5OuP/jua40sO18afkxeBzmE+3sMkOcoLF8kjW/Zs4UcQ7c2avlejRxDSHy9rauslmPIdNAB2R8MmubORemdizncdK5r/lxUEEaaTcEXUgk7pQAAAAAAABB3LEoBAAAAAAAg7tKmfO+KlU+Y/OzMmMfnvfWCdP2METcaVbhW7BjnYDfl/PcXynMUZGhroeUrtS6E1mqxfC+YFXspaIM1VXUJfR6tsINtvmr3PROpT3gnHheldy46+Kmlc74ApXcA3KqvqjD++thfM//7zgLp+nOrexpVp5DWtW7X0u/kGLr0bSPPEZnzmTS+VR+9DLF3e60UclFxdeLL9yr0GFKm+1443Ow757ko33PRjVpF9z6kM3ZKAQAAAAAAIO5YlAIAAAAAAEDcsSgFAAAAAACAuEubM6WuuuBZExLW4L6p2E+6/vaHHmhUC79fJY3vO+N1OYavHJwF0Clb+7GrXqOfKVVSJ54plVuQ8DOlWmXpf31d1NBH6rQzoXz14plU9vshHpjm4jwoAEhF7915kskviP0175vtH5WuP+auj42q42atpPGPh5+SYxixy9nyHGWfPiuNb9W/mxxD1xbZ0vjvFpfKMXQTX/NrSmuS4kypQEbINHcRB2dKuZgjGbg4pxVIV+yUAgAAAAAAQNyxKAUAAAAAAIC4S5vyvdEj+pi8YOwPd9Cj/5OuP3fyBKO6/UOtrfJ2M9rLMTz49jx5jv065Enja8pWyzFUiXtsQzmFcgyrKrSytf55waTYalxfrT0OX0QrpbT8vsSX71EC6K7FddTBzwQAN2bsNszkCH+v9/ngCen68w+4zqhKFvWXxs9coJcQ7jOqtTzHsjtnSeN7nTBSjqFrYZY0fomDYyD6BbR76rXl2vEJrsr3/ElQvpcMr7dRB0dJhMUSwIiDGADEjp1SAAAAAAAAiDsWpQAAAAAAABB3aVO+98OFt5mcvPyYx3cc/450/exH/2VU5/7lcmm8f9EucgwRB+V7bQe0kcbXrymXY6gWt/lm5uXKMawo1bawh3JDydF9r1brvmci9Xr3PbF0zp8kpXe+gF4OAAAufbCk3GT6Yr+HOfG5ldL1Czr1MaqSBTOl8R/N1o8NOCdUJc/x7hda9+G+3QbIMbTL1Y4OKBZzHytb7L5XV6GX7xWGAklR7p7o8r1oJOIghtQonVO7CDrJyVPjqUwK+k+2MYn/G958sFMKAAAAAAAAcceiFAAAAAAAAOIubcr3Thpzo/EFYi93+viZsdL1n978CqM6pr3WPS+4/yg5hoKMR+Q52m/bVRoffcPFhkpNVo7e+W5Vuda1LiMrI0m679UlvnwvkCmND4jd+1x0AIRbPr8/4SUJQCr45+SbTEFeTszj8096QLr+MeecbFRP3lUijf+pUi/3in4zRZ5j/sIyaXy4lZZ/Wa38Wu5RVablPlau2H2vvjrxxwZY/gw9l0y0ZOje56L7XpiX/EZqFaGLCkK1DFGs8EUCsFMKAAAAAAAAcceiFAAAAAAAAOIubcr3eg4ZZgKZsW8/L7j1TOn6nxbr3UZ6j31cGt/q2ePlGPrk6R3f2g7aXJvgjVkm0XLy9edhtVi+F8pLjm3f4Vpt67avXt/K7/eJ5XsOtuELzamcSYZOPgBSyz4v+00gM/bfLa16bStd//YRfY3qixm7S+ODU/WjC5a/+ZY8x4IqrexseZ2eNxRF10jjK8tr5BjyMrQX3NpyvRwz5KA+KCA+juTovhdOeNc6F+V7ABIr8b8NAQAAAAAAkHZYlAIAAAAAAEDcpU353vvHtzUF+Xkxj7+gz1Tp+ju3yjaqyV8tl8YXfzRPjuGw3i3lObK23EUaHwjNS/hqbE5BlhxDZaVWtpZZoJWsWS6ajahdbHwOtn4HxU48Lsr3kqFjHAC49u1rz0vdi19++Grp+ksu1bvvnT1S66Bcfqv+ejtv8nfyHCtqtNfbRaV6uXzH6BJpfHWFXjqXlZ2R8O57Tsr3xNwlVXKGaBKU3kXUlnNeR+vEPw6guUqN32YAAAAAAABoVliUAgAAAAAAQNylTfnejTuONllCe6yuOVrHkkP+fYRRXXD8g9L4997Xy97O2VvvglPXaaA0PphTKMeQLW67bpOvb+X/qbhKGh/M1WNwIVwrFgFG9G306i56N933kqMEEABcuv7f55js3PyYx2/xwrXS9e+54yOjOuefWg43ffsOcgwzpy+V56gTq4NmrCiXY9g2PF8aX1Oude+zMgu0Dsirl1YkRfmePyPxeUMydN+LOih7q0+CEkBVxMWZGkAzxU4pAAAAAAAAxB2LUgAAAAAAAIi7tCnf65sXNDn+QMzjD3rgTOn6SwYdY1T98x+Txj/+zedyDEXn7S7PsbBS266cVdhWjiEvQ1uP7eygm+Ls2Sul8cFcvQOgC+Fabeu2z0H5XlDsQOOifM+fIuV7PuH3JIDUs9tDl5i8UOzlb7fcq+Ueq8XXGKvk/uul8Zv/Vc99nntHO4LBxZ3kbxaXyDHUR36SxtdV6GVWavfhqkXlydF9T8xFk4GL8j0XwmL5novOeclQQRg1LoJo/vmsi26KAV/zfx6ak+b/2xAAAAAAAADNDotSAAAAAAAAiLu0Kd/b46uppqCgIObxd36pdU158r5PjWrSQb2l8efP0LZcW/6tj5fnmL6oTBqf07qjHEOrkFailNdCL9+rqaqTxgcLckwyCNeJW7fDtXIMQXEbvYvyPRconQOQbCbe+7kJCfcwu2RrqeZBPVoY1cfj3pLGD/v0WTmGNXUPyHO0DGr3kqcvLJVjqKxfKI2vry5MePlerYM6q5CDvCEQ8Cc8Z1DL7yIOyvciyVD3BvwB1M6SEQellEnyT5zfxU4pAAAAAAAAxF1GuqxQlpVpu3OqK7Tx9dUVRlVep+2siTrYkVIqPo9WZbm2ZBuprZRjqI5qd3ZqxZ8HK1yjPY6yGv37WWsi8hwV9dpB5aVl+t+NCp/2/airKk/499OK1FVJ46P1NXIM6hwufs+4mEOOIaL/3UDqiIbrnNz1bE4aHqv6OlEd1cZXOtmJEUl47uPi9bZG/PGrr9Jfb8vC4mtEfbUcQ7lPyzuqxBzQKi0tTXje4OS1MhpIaN5ihR38G6m2Uts9V12h/5O4MqD9XFZE9RzOX6fvnqsXD+B3sYswQ5zDQR8CJw2M1N0/PgeHrasPQ42gYQ3m9/InXzTFM6yFCxeaLl26JDoMAADQzC1YsMB07tzZpAPyJwAAEI/8KeUXpezdscWLF5v8/PwNrjbaOx026bJPlHLmFHguXeK5dIfn0h2eS3d4LpvXc2lTJXu3r2PHjsbvT4+TD34vf7L4OXaH59Idnks3eB7d4bl0h+cyNfOnlC/fsw9+Y+5q2m8EP9hu8Fy6w3PpDs+lOzyX7vBcNp/nsrBQP6A5FfMni59jd3gu3eG5dIPn0R2eS3d4LlMrf0qP230AAAAAAABIKixKAQAAAAAAIO7SflEqMzPTXH755d7/oeG5dIfn0h2eS3d4Lt3huXSH5zJxeO7d4bl0h+fSDZ5Hd3gu3eG5TM3nMuUPOgcAAAAAAEDySfudUgAAAAAAAIg/FqUAAAAAAAAQdyxKAQAAAAAAIO7SflHqtttuM927dzdZWVlmxx13NB9//HGiQ2p2rrjiCuPz+Zq89evXL9FhNQvvvPOOOeigg0zHjh295+3ZZ59t8nl75Ntll11mOnToYLKzs83QoUPN999/n7B4m/Nzeeyxx673c7rvvvsmLN5kNXbsWDNo0CCTn59v2rVrZw455BAza9asJl9TXV1tTjvtNNO6dWuTl5dnDj/8cLNs2bKExdycn8s99thjvZ/LU045JWExJ6tJkyaZLbfc0hQUFHhvgwcPNq+88krj5/mZjD/yJx35U+zIn9whf3KHHMoN8qf0y5/SelHqscceM+ecc4536vz06dPNVlttZYYPH26WL1+e6NCanc0339wsWbKk8e29995LdEjNQkVFhfdzZ5P7DbnhhhvMhAkTzB133GE++ugjk5ub6/2M2l8g2LTn0rJJ1No/p4888khcY2wOpk6d6r04ffjhh+aNN94wdXV1ZtiwYd7z2+Dss882L7zwgnniiSe8r1+8eLE57LDDEhp3c30urRNPPLHJz6X9e4+mOnfubK677jrz2WefmU8//dTstddeZsSIEebbb7/1Ps/PZHyRP7lD/hQb8id3yJ/cIYdyg/wpDfOnaBrbYYcdoqeddlrj++FwONqxY8fo2LFjExpXc3P55ZdHt9pqq0SH0ezZv47PPPNM4/uRSCTavn376I033tj4seLi4mhmZmb0kUceSVCUzfO5tEaNGhUdMWJEwmJqrpYvX+49n1OnTm38GQwGg9Ennnii8Wtmzpzpfc20adMSGGnzey6t3XffPXrmmWcmNK7mqmXLltH//Oc//EwmAPmTG+RPbpA/uUP+5BY5lBvkT6mfP6XtTqna2lpvxdBu523g9/u996dNm5bQ2JojuyXabvvt2bOnGTlypJk/f36iQ2r25s6da5YuXdrkZ7SwsNArk+BnNDZvv/22tw24b9++5u9//7tZtWpVokNKeiUlJd7/W7Vq5f3f/t60d6zW/rm05SZdu3bl53ITn8sGDz30kGnTpo0ZOHCgufjii01lZWWCImwewuGwefTRR707pnYbOj+T8UX+5Bb5k3vkT+6RP8WGHMoN8qfUz58yTJpauXKl940pKipq8nH7/nfffZewuJoj+yJ///33ey9UduvklVdeaXbddVfzzTffeLXAiI1NqKwN/Yw2fA4bz249t9tRe/ToYX744Qfzj3/8w+y3337eL91AIJDo8JJSJBIxZ511lhkyZIj3gm/Zn71QKGRatGjR5Gv5udz059I65phjTLdu3bx/lH711Vfmwgsv9M5NePrppxMabzL6+uuvvSTKlt/Ycw+eeeYZM2DAAPPFF1/wMxlH5E/ukD/9Mcif3CJ/ig05lBvkT+mRP6XtohTcsS9MDexBajbJsr8kHn/8cTN69OiExgY0OProoxv/vMUWW3g/q5tttpl392/vvfdOaGzJytbz238cccbJH/dcnnTSSU1+Lu2hvPbn0Sb+9ucTv7D/cLcJlL1j+uSTT5pRo0Z55x8AzRX5E5oD8qfYkEO5Qf6UHvlT2pbv2a1+dnV/3dPl7fvt27dPWFypwK629unTx8yZMyfRoTRrDT+H/Iz+MWyphP09wM/php1++unmxRdfNFOmTPEOSWxgf/Zs+U5xcXGTr+fnctOfyw2x/yi1+Llcn72b16tXL7Pddtt5nXnswby33HILP5NxRv70xyF/coP86Y9F/vT7yKHcIH9Kn/zJn87fHPuNeeutt5psD7Tv2+1tiF15ebm3Sm1XrBE7u03a/kJY+2e0tLTU6yLDz6hu4cKF3pkI/Jw2Zc85tUmA3do7efJk7+dwbfb3ZjAYbPJzabdL23NQ+LnctOdyQ+ydLIufy99nX7Nramr4mYwz8qc/DvmTG+RPfyzyp19HDuUG+VP65U9pXb5n2xnb7Wvbb7+92WGHHcz48eO9g7+OO+64RIfWrJx33nnmoIMO8rac2zaStkW0vYv65z//OdGhNYsEdO0VfXs4p/2lag/ys4fM2Rrqq6++2vTu3dv7hXzppZd6tdOHHHJIQuNubs+lfbNndRx++OFeomqT/gsuuMC7a2BbRKPpNumHH37YPPfcc96ZJg015faQ2OzsbO//tqzE/v60z2tBQYEZM2aM9+K10047JTr8ZvVc2p9D+/n999/ftG7d2jsTwbbm3W233bzyCPzCHmBqS53s78WysjLvebOlI6+99ho/kwlA/uQG+VPsyJ/cIX9yhxzKDfKnNMyfomnu1ltvjXbt2jUaCoW8FscffvhhokNqdo466qhohw4dvOewU6dO3vtz5sxJdFjNwpQpU7y2m+u+2fa7DW2NL7300mhRUZHXynjvvfeOzpo1K9FhN7vnsrKyMjps2LBo27Ztvdan3bp1i5544onRpUuXJjrspLOh59C+3XfffY1fU1VVFT311FO9lrI5OTnRQw89NLpkyZKExt0cn8v58+dHd9ttt2irVq28v9+9evWKnn/++dGSkpJEh550jj/+eO/vrX2dsX+P7e/C119/vfHz/EzGH/mTjvwpduRP7pA/uUMO5Qb5U/rlTz77n/gugwEAAAAAACDdpe2ZUgAAAAAAAEgcFqUAAAAAAAAQdyxKAQAAAAAAIO5YlAIAAAAAAEDcsSgFAAAAAACAuGNRCgAAAAAAAHHHohQAAAAAAADijkUpAAAAAAAAxB2LUgDwO4499lhzyCGHNL6/xx57mLPOOsskO5/PZ5599tlEhwEAANIUORSA35Pxu18BoNknAw888MB6H//+++9Nr169EhJTc/f000+bYDBokt2SJUtMy5YtEx0GAADNEjmUe+RQANbFohSQBvbdd19z3333NflY27Zt1/u62tpaEwqF4hhZ89SqVSvTHLRv3z7RIQAA0KyRQ7lFDgVgXZTvAWkgMzPTe3Fd+y0QCHhbqE8//XRvG3WbNm3M8OHDva//5ptvzH777Wfy8vJMUVGR+etf/2pWrlzZOF9FRYX529/+5n2+Q4cO5qabblpvO/aGtj23aNHC3H///Y3vL1iwwPzpT3/yPm6TlBEjRpiffvppvS3f//73v73rtG7d2px22mmmrq6u8WtqamrMhRdeaLp06eI9Tnvn8p577jHRaNT7sx27ti+++MKLbc6cORt8rsLhsDnnnHO8mOz1LrjgAm+uta37WLt3726uvvrqxuekW7du5vnnnzcrVqzwHpP92JZbbmk+/fTTJvO89957ZtdddzXZ2dle/GeccYb33K4977XXXmuOP/54k5+fb7p27WruuuuuJgmw/f7Z5yYrK8u77tixY3/1e/D111+bvfbay7uefWwnnXSSKS8v36TnGwCAdEIO9QtyKHIo4I/AohSQ5uy2dHtn7/333zd33HGHKS4u9l50t9lmGy8BePXVV82yZcu8xKfB+eefb6ZOnWqee+458/rrr5u3337bTJ8+fZOua1+kbQJnE4V3333Xu75NPOwdSZsoNJgyZYr54YcfvP/bWG1CtnZSZpOYRx55xEyYMMHMnDnT3Hnnnd48Npmwici6dzft+7vtttuvbru3yaGd/9577/USntWrV5tnnnnmdx/PuHHjzJAhQ8znn39uDjjgAC8JtbH95S9/8Z6bzTbbzHu/ITmzj8k+1sMPP9x89dVX5rHHHvOuZxOkdePZfvvtvXlPPfVU8/e//93MmjXL+5x9zDZxe/zxx72PPfTQQ14StiE2UbPPt92K/sknn5gnnnjCvPnmm+td7/eebwAA8DNyqKbIocihgJhEAaS0UaNGRQOBQDQ3N7fx7YgjjvA+t/vuu0e32WabJl//r3/9Kzps2LAmH1uwYIHNAqKzZs2KlpWVRUOhUPTxxx9v/PyqVaui2dnZ0TPPPLPxY/brn3nmmSbzFBYWRu+77z7vzw8++GC0b9++0Ugk0vj5mpoab57XXnutMfZu3bpF6+vrG7/myCOPjB511FHen2089jpvvPHGBh/7okWLvMf+0Ucfee/X1tZG27RpE73//vt/9fnq0KFD9IYbbmh8v66uLtq5c+foiBEjGj9mn7e1H6uN8S9/+Uvj+0uWLPHiuvTSSxs/Nm3aNO9j9nPW6NGjoyeddFKTa7/77rtRv98fraqq2uC89rlq165ddNKkSd77Y8aMie61115NnsO1rf09uOuuu6ItW7aMlpeXN37+pZde8q63dOnSjXq+AQBIJ+RQ5FAWORTwx+JMKSAN7LnnnmbSpEmN7+fm5jb+ebvttmvytV9++aV3h8feKVuXvftTVVXl3YXbcccdGz9ut4337dt3k2Ky17Hbv+1dvrVVV1d712mw+eabe9vkG9gt0XYLdcM2cvu53XfffYPX6Nixo3fHzd6x22GHHcwLL7zgbVU/8sgjN/j1JSUl3sGWaz+2jIwM7y7butvP12W3ljew2/WtLbbYYr2PLV++3Nv6bx+/vbtn78w1sNeIRCJm7ty5pn///uvNa+9c2rF2joat4vvss4/33Ns7hgceeKAZNmzYBuOzd0C32mqrJt97e1fSXs/eIWyI77eebwAA0g05FDkUORTwx2JRCkgD9kX017Zar/0Ca9n6+IMOOshcf/31632tfXH9tXME1mVf/NdNQtauq7fXscnc2gnFhg4QXbdDi53XJgGWrev/PSeccIK3DdxuDbfbzo866iiTk5NjXFs7Thvjr32sIXb7+E8++WTvDIR12XMPNjRvwzwNc2y77bZe8vXKK69428htecDQoUPNk08+6eRxrHs9AADSDTkUOVQsj2Pd6wH4dSxKAWjCvkg/9dRTXl29vcO1LlvXb190P/roo8YX/jVr1pjZs2c3udtmkyJ7x2zt9smVlZVNrmPPAGjXrp0pKCiIKVZ7F82+2NuzGWwisSH777+/lzTau5z2bId33nnnV+crLCz0kkb72OyZCVZ9fb357LPPvHhdsvPNmDFDbiltnzubJNq3I444wrvbZ89wWLe7jb1raM81sOciNCTR9gwKv9+/yXdoAQDA+sihyKEAbDoOOgfQhO0UYl+Q//znP3uHOdpt4K+99po57rjjvK4qdkv66NGjvYM6J0+e7HWZsVug7Qvz2uxBnxMnTvQOl7SHfZ5yyilN7iCNHDnS61ZjO6vYQzrt3Sp72Ke967Vw4cKNitUmfaNGjfIO47QdUhrmsIdWNrDbqG18F198sendu7cZPHjwb8555plnmuuuu86b77vvvvMOxrQHl7pmu9188MEH3iGZdgu9TTjtoafrHpr5W26++WbvgFIbp01o7cGbdmu67XqzLvt82+4y9vmy3zNbXjBmzBjvDmjDtnMAABA7cihyKACbjkUpAOudIWDv/tjkydbW2ztptnWvfZFuSJpuvPFGrw2v3aJu767tsssu652rYDue2Ba99uuOOeYYc9555zXZ8m3/bO+42TuFhx12mHcXyiZq9jyETbnrZ+/e2btbNvHp16+fOfHEE5u0BLbsvPYMB5sU/p5zzz3XSzJs4mGTL3tew6GHHmpcs+cc2LuTNhGyz5Ht1HPZZZd5z//GsrHdcMMN3nkNgwYN8lpBv/zyy+sltw3Pt02MbbJsv9Y+Z3vvvbeX9AIAAB05FDkUgE3ns6edxzAOAJrYY489zNZbb23Gjx9vko29i2iThwULFnBHCwAAJBVyKADpjDOlAKQs2yVmxYoV5oorrvC6xZBMAQAA/D5yKADxQvkegJRlzwro1q2bd56B3aINAACA30cOBSBeKN8DAAAAAABA3LFTCgAAAAAAAHHHohQAAAAAAADijkUpAAAAAAAAxB2LUgAAAAAAAIg7FqUAAAAAAAAQdyxKAQAAAAAAIO5YlAIAAAAAAEDcsSgFAAAAAACAuGNRCgAAAAAAACbe/g8SdR8UqjbvMwAAAABJRU5ErkJggg==",

|

||

"text/plain": [

|

||

"<Figure size 1200x400 with 2 Axes>"

|

||

]

|

||

},

|

||

"metadata": {},

|

||

"output_type": "display_data"

|

||

}

|

||

],

|

||

"source": [

|

||

"import matplotlib.pyplot as plt\n",

|

||

"\n",

|

||

"cos_m, sin_m = create_rotary_embeddings(64, 100)\n",

|

||

"\n",

|

||

"fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 4))\n",

|

||

"ax1.imshow(cos_m.numpy(), aspect='auto', cmap='RdBu')\n",

|

||

"ax1.set_title('Cosine Matrix')\n",

|

||

"ax1.set_xlabel('Frequency dimension')\n",

|

||

"ax1.set_ylabel('Position')\n",

|

||

"\n",

|

||

"ax2.imshow(sin_m.numpy(), aspect='auto', cmap='RdBu')\n",

|

||

"ax2.set_title('Sine Matrix')\n",

|

||

"ax2.set_xlabel('Frequency dimension')\n",

|

||

"ax2.set_ylabel('Position')\n",

|

||

"plt.tight_layout()"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 6,

|

||

"id": "4fe79d02",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"\n",

|

||

"class RoPE(nn.Module):\n",

|

||

" def __init__(self, head_size: int, max_seq_len: int, base: int = 10_000):\n",

|

||

" super().__init__()\n",

|

||

" assert head_size % 2 == 0, \"head_size должен быть четным\"\n",

|

||

"\n",

|

||

" # Обратные частоты\n",

|

||

" freqs = 1.0 / (base ** (2 * torch.arange(head_size // 2).float() / head_size))\n",

|

||

" \n",

|

||

" # Позиции\n",

|

||

" positions = torch.arange(max_seq_len).float()\n",

|

||

" \n",

|

||

" # Матрица частот (внешнее произведение)\n",

|

||

" #freq_matrix = torch.outer(positions, freqs)\n",

|

||

" freq_matrix = positions.unsqueeze(1) * freqs.unsqueeze(0)\n",

|

||

"\n",

|

||

" # Матрицы косинусов и синусов\n",

|

||

" self.register_buffer('cos_matrix', torch.cos(freq_matrix))\n",

|

||

" self.register_buffer('sin_matrix', torch.sin(freq_matrix))\n",

|

||

"\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor): # Получает на вход тензор x (тип float) размером [batch_size × seq_len × head_size]\n",

|

||

" seq_len = x.size(1)\n",

|

||

" # Берем нужную часть матриц и приводим к типу x\n",

|

||

" cos = self.cos_matrix[:seq_len].to(x.dtype) # [seq_len, head_size//2]\n",

|

||

" sin = self.sin_matrix[:seq_len].to(x.dtype) # [seq_len, head_size//2]\n",

|

||

" \n",

|

||

"\n",

|

||

" # Разделяем на четные и нечетные\n",

|

||

" x_even = x[:, :, 0::2] # [batch_size, seq_len, head_size//2]\n",

|

||

" x_odd = x[:, :, 1::2] # [batch_size, seq_len, head_size//2]\n",

|

||

"\n",

|

||

" # Применяем поворот\n",

|

||

" x_rotated_even = x_even * cos - x_odd * sin\n",

|

||

" x_rotated_odd = x_even * sin + x_odd * cos\n",

|

||

"\n",

|

||

"\n",

|

||

" # Объединяем обратно\n",

|

||

" x_rotated = torch.stack([x_rotated_even, x_rotated_odd], dim=-1)\n",

|

||

" x_rotated = x_rotated.flatten(-2) # [batch_size, seq_len, head_size]\n",

|

||

"\n",

|

||

" return x_rotated"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "c9fe652d",

|

||

"metadata": {},

|

||

"source": []

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"id": "1133b962",

|

||

"metadata": {},

|

||

"source": []

|

||

},

|

||

{

|

||

"cell_type": "code",

|

||

"execution_count": 7,

|

||

"id": "fe1274b1",

|

||

"metadata": {},

|

||

"outputs": [],

|

||

"source": [

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"import torch.nn.functional as F\n",

|

||

"from math import sqrt\n",

|

||

"import torch\n",

|

||

"from torch import nn\n",

|

||

"from torch import Tensor\n",

|

||

"\n",

|

||

"class TokenEmbeddings(nn.Module):\n",

|

||

" def __init__(self, vocab_size: int, emb_size: int):\n",

|

||

" super().__init__()\n",

|

||

" self._embedding = nn.Embedding(\n",

|

||

" num_embeddings=vocab_size,\n",

|

||

" embedding_dim=emb_size\n",

|

||

" )\n",

|

||

"\n",

|

||

" def forward(self, x: Tensor) -> Tensor:\n",

|

||

" return self._embedding(x)\n",

|

||

"\n",

|

||

" @property\n",

|

||

" def num_embeddings(self) -> int:\n",

|

||

" return self._embedding.num_embeddings\n",

|

||

"\n",

|

||

" @property\n",

|

||

" def embedding_dim(self) -> int:\n",

|

||

" return self._embedding.embedding_dim\n",

|

||

" \n",

|

||

" \n",

|

||

"class HeadAttention(nn.Module):\n",

|

||

"\n",

|

||

" def __init__(self, emb_size: int, head_size: int, max_seq_len: int, rope: RoPE):\n",

|

||

" super().__init__()\n",

|

||

" self._emb_size = emb_size\n",

|

||

" self._head_size = head_size\n",

|

||

" self._max_seq_len = max_seq_len\n",

|

||

" self._rope = rope\n",

|

||

"\n",

|

||

" self._k = nn.Linear(emb_size, head_size)\n",

|

||

" self._q = nn.Linear(emb_size, head_size)\n",

|

||

" self._v = nn.Linear(emb_size, head_size)\n",

|

||

"\n",

|

||

" mask = torch.tril(torch.ones(max_seq_len, max_seq_len))\n",

|

||

" self.register_buffer('_tril_mask', mask.bool() if hasattr(torch, 'bool') else mask.byte())\n",

|

||

"\n",

|

||

" def forward(self, x: torch.Tensor, use_cache: bool = True, cache: tuple = None) -> tuple:\n",

|

||

" seq_len = x.shape[1]\n",

|

||

" if seq_len > self._max_seq_len:\n",

|

||

" raise ValueError(f\"Длина последовательности {seq_len} превышает максимум {self._max_seq_len}\")\n",

|

||

"\n",

|

||

" k = self._k(x) # [B, T, hs]\n",

|

||

" q = self._q(x) # [B, T, hs]\n",

|

||

" v = self._v(x) # [B, T, hs]\n",

|

||

"\n",

|

||

" # ✅ Применяем RoPE к Q и K (НЕ к V!)\n",

|

||

" q = self._rope(q) # [B, T, hs]\n",

|

||

" k = self._rope(k) # [B, T, hs]\n",

|

||

"\n",

|

||

" if cache is not None:\n",

|